有噪信道编码定理

此词条暂由Henry翻译。

In information theory, the noisy-channel coding theorem (sometimes Shannon's theorem or Shannon's limit), establishes that for any given degree of noise contamination of a communication channel, it is possible to communicate discrete data (digital information) nearly error-free up to a computable maximum rate through the channel. This result was presented by Claude Shannon in 1948 and was based in part on earlier work and ideas of Harry Nyquist and Ralph Hartley.

In information theory, the noisy-channel coding theorem (sometimes Shannon's theorem or Shannon's limit), establishes that for any given degree of noise contamination of a communication channel, it is possible to communicate discrete data (digital information) nearly error-free up to a computable maximum rate through the channel. This result was presented by Claude Shannon in 1948 and was based in part on earlier work and ideas of Harry Nyquist and Ralph Hartley.

在信息论中,Noisy-channel coding theorem有噪声信道编码定理(有时是香农定理或香农极限)确定了对于通信信道的任何给定程度的噪声污染,都有可能通过信道传输几乎无差错的离散数据(数字信息),从而达到可计算的最大速率。这个结果是由克劳德·香农在1948年提出的,部分基于哈利·奈奎斯特和拉尔夫·哈特利早期的工作和思想。

The Shannon limit or Shannon capacity of a communication channel refers to the maximum rate of error-free data that can theoretically be transferred over the channel if the link is subject to random data transmission errors, for a particular noise level. It was first described by Shannon (1948), and shortly after published in a book by Claude Elwood Shannon and Warren Weaver in 1949 entitled The Mathematical Theory of Communication. (

). This founded the modern discipline of information theory.

The Shannon limit or Shannon capacity of a communication channel refers to the maximum rate of error-free data that can theoretically be transferred over the channel if the link is subject to random data transmission errors, for a particular noise level. It was first described by Shannon (1948), and shortly after published in a book by Claude Elwood Shannon and Warren Weaver in 1949 entitled The Mathematical Theory of Communication. (). This founded the modern discipline of information theory.

通信信道的香农极限或香农容量是指在特定噪声水平下,如果链路受到随机数据传输错误的影响,理论上可以通过信道传输的最大无错误数据速率。它最早由香农(1948)描述,不久后在1949年由克劳德·埃尔伍德·香农和沃伦·韦弗出版的一本书中发表,书名为《通信的数学理论》。这奠定了现代信息论学科的基础。

Overview 总览

Stated by Claude Shannon in 1948, the theorem describes the maximum possible efficiency of error-correcting methods versus levels of noise interference and data corruption. Shannon's theorem has wide-ranging applications in both communications and data storage. This theorem is of foundational importance to the modern field of information theory. Shannon only gave an outline of the proof. The first rigorous proof for the discrete case is due to Amiel Feinstein[1] in 1954.

Stated by Claude Shannon in 1948, the theorem describes the maximum possible efficiency of error-correcting methods versus levels of noise interference and data corruption. Shannon's theorem has wide-ranging applications in both communications and data storage. This theorem is of foundational importance to the modern field of information theory. Shannon only gave an outline of the proof. The first rigorous proof for the discrete case is due to Amiel Feinstein in 1954.

香农在1948年提出的定理描述了纠错方法的最大可能效率与噪声干扰和数据损坏程度的关系。香农定理在通信和数据存储中都有广泛的应用。这个定理对现代信息论领域具有重要的基础性意义。香农只概述了证明。1954年,阿米尔·范斯坦提出了离散情况的第一个严格证明。

The Shannon theorem states that given a noisy channel with channel capacity C and information transmitted at a rate R, then if [math]\displaystyle{ R \lt C }[/math] there exist codes that allow the probability of error at the receiver to be made arbitrarily small. This means that, theoretically, it is possible to transmit information nearly without error at any rate below a limiting rate, C.

The Shannon theorem states that given a noisy channel with channel capacity C and information transmitted at a rate R, then if [math]\displaystyle{ R \lt C }[/math] there exist codes that allow the probability of error at the receiver to be made arbitrarily small. This means that, theoretically, it is possible to transmit information nearly without error at any rate below a limiting rate, C.

香农定理指出,给定一个信道容量为C的噪声信道和以R速率传输的信息,那么如果R<C,则存在允许接收机处的错误概率任意小的码。这意味着,从理论上讲,以低于极限速率C的任何速率几乎无误地传输信息是可能的。

The converse is also important. If [math]\displaystyle{ R \gt C }[/math], an arbitrarily small probability of error is not achievable. All codes will have a probability of error greater than a certain positive minimal level, and this level increases as the rate increases. So, information cannot be guaranteed to be transmitted reliably across a channel at rates beyond the channel capacity. The theorem does not address the rare situation in which rate and capacity are equal.

The converse is also important. If [math]\displaystyle{ R \gt C }[/math], an arbitrarily small probability of error is not achievable. All codes will have a probability of error greater than a certain positive minimal level, and this level increases as the rate increases. So, information cannot be guaranteed to be transmitted reliably across a channel at rates beyond the channel capacity. The theorem does not address the rare situation in which rate and capacity are equal.

定理反过来也很重要。如果R>C,任意小的错误概率都是不可能实现的。所有代码的错误概率都将大于某个正最小水平,并且该水平随着速率的增加而增加。因此,不能保证信息以超出信道容量的速率可靠地跨信道传输。这个定理并不适用于速率和容量相等的罕见情况

The channel capacity [math]\displaystyle{ C }[/math] can be calculated from the physical properties of a channel; for a band-limited channel with Gaussian noise, using the Shannon–Hartley theorem.

The channel capacity [math]\displaystyle{ C }[/math] can be calculated from the physical properties of a channel; for a band-limited channel with Gaussian noise, using the Shannon–Hartley theorem.

信道容量C可以从信道的物理特性计算出来,对于带有高斯噪声的带限信道,可以使用 Shannon–Hartley theorem香农-哈特莱定理。

Simple schemes such as "send the message 3 times and use a best 2 out of 3 voting scheme if the copies differ" are inefficient error-correction methods, unable to asymptotically guarantee that a block of data can be communicated free of error. Advanced techniques such as Reed–Solomon codes and, more recently, low-density parity-check (LDPC) codes and turbo codes, come much closer to reaching the theoretical Shannon limit, but at a cost of high computational complexity. Using these highly efficient codes and with the computing power in today's digital signal processors, it is now possible to reach very close to the Shannon limit. In fact, it was shown that LDPC codes can reach within 0.0045 dB of the Shannon limit (for binary Additive white Gaussian noise (AWGN) channels, with very long block lengths).[2]

Simple schemes such as "send the message 3 times and use a best 2 out of 3 voting scheme if the copies differ" are inefficient error-correction methods, unable to asymptotically guarantee that a block of data can be communicated free of error. Advanced techniques such as Reed–Solomon codes and, more recently, low-density parity-check (LDPC) codes and turbo codes, come much closer to reaching the theoretical Shannon limit, but at a cost of high computational complexity. Using these highly efficient codes and with the computing power in today's digital signal processors, it is now possible to reach very close to the Shannon limit. In fact, it was shown that LDPC codes can reach within 0.0045 dB of the Shannon limit (for binary Additive white Gaussian noise (AWGN) channels, with very long block lengths).

诸如“发送消息3次,如果副本不同,则使用3选2最佳投票方案”之类的简单方案是低效的纠错方法,无法渐近地保证数据块可以无错误地通信。诸如Reed-Solomon码以及最近的低密度奇偶校验(LDPC)码和turbo码之类的先进技术更接近于达到理论上的香农极限,但代价是计算复杂度很高。使用这些高效的代码和当今数字信号处理器的计算能力,现在有可能达到非常接近香农极限。事实上,LDPC码可以达到香农极限的0.0045dB以内(对于二进制加性高斯白噪声(AWGN)信道,具有很长的块长度)。 。

Mathematical statement数学表述

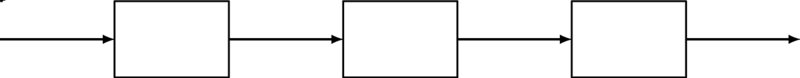

The basic mathematical model for a communication system is the following:

The basic mathematical model for a communication system is the following:

通信系统的基本数学模型如下:

Channel model

通道模型

A message W is transmitted through a noisy channel by using encoding and decoding functions. An encoder maps W into a pre-defined sequence of channel symbols of length n. In its most basic model, the channel distorts each of these symbols independently of the others. The output of the channel –the received sequence– is fed into a decoder which maps the sequence into an estimate of the message. In this setting, the probability of error is defined as:

A message W is transmitted through a noisy channel by using encoding and decoding functions. An encoder maps W into a pre-defined sequence of channel symbols of length n. In its most basic model, the channel distorts each of these symbols independently of the others. The output of the channel –the received sequence– is fed into a decoder which maps the sequence into an estimate of the message. In this setting, the probability of error is defined as:

通过使用编码和解码功能,通过噪声信道传送消息W。编码器将W映射到长度为n的预定义信道符号序列中。在其最基本的模型中,信道独立于其他符号而扭曲这些符号中的每一个。信道的输出——接收到的序列——被送入解码器,解码器将序列映射成消息的估计。在此设置中,错误概率定义为:

- [math]\displaystyle{ P_e = \text{Pr}\left\{ \hat{W} \neq W \right\}. }[/math]

[math]\displaystyle{ P_e = \text{Pr}\left\{ \hat{W} \neq W \right\}. }[/math]

[数学 > p _ e = 文本{ Pr }左{ w } neq w 右}。数学

Theorem (Shannon, 1948):

Theorem (Shannon, 1948):

定理(Shannon,1948) :

- 1. For every discrete memoryless channel, the channel capacity is defined in terms of the mutual information [math]\displaystyle{ I(X; Y) }[/math],

1. For every discrete memoryless channel, the channel capacity is defined in terms of the mutual information [math]\displaystyle{ I(X; Y) }[/math],

1.对于每一个离散的无记忆信道,信道容量是根据互信息I(x; y)来定义的,

- [math]\displaystyle{ \ C = \sup_{p_X} I(X;Y) }[/math][3]

[math]\displaystyle{ \ C = \sup_{p_X} I(X;Y) }[/math]

[ math > c = sup { p _ x } i (x; y) </math ]

- has the following property. For any [math]\displaystyle{ \epsilon\gt 0 }[/math] and [math]\displaystyle{ R\lt C }[/math], for large enough [math]\displaystyle{ N }[/math], there exists a code of length [math]\displaystyle{ N }[/math] and rate [math]\displaystyle{ \geq R }[/math] and a decoding algorithm, such that the maximal probability of block error is [math]\displaystyle{ \leq \epsilon }[/math].

has the following property. For any [math]\displaystyle{ \epsilon\gt 0 }[/math] and [math]\displaystyle{ R\lt C }[/math], for large enough [math]\displaystyle{ N }[/math], there exists a code of length [math]\displaystyle{ N }[/math] and rate [math]\displaystyle{ \geq R }[/math] and a decoding algorithm, such that the maximal probability of block error is [math]\displaystyle{ \leq \epsilon }[/math].

具有以下属性。对于任何ε>0 和 R<C ,对于足够大的N ,存在一个长度为 N 和速率R的代码和一个解码算法,使得块错误的最大概率为ε 。

- 2. If a probability of bit error [math]\displaystyle{ p_b }[/math] is acceptable, rates up to [math]\displaystyle{ R(p_b) }[/math] are achievable, where

2. If a probability of bit error [math]\displaystyle{ p_b }[/math] is acceptable, rates up to [math]\displaystyle{ R(p_b) }[/math] are achievable, where

2.如果位错概率pb是可以接受的,那么达到R(pb)的速率是可以实现的

- [math]\displaystyle{ R(p_b) = \frac{C}{1-H_2(p_b)} . }[/math]

[math]\displaystyle{ R(p_b) = \frac{C}{1-H_2(p_b)} . }[/math]

1-H _ 2(p _ b)} . </math >

- and [math]\displaystyle{ H_2(p_b) }[/math] is the binary entropy function

and [math]\displaystyle{ H_2(p_b) }[/math] is the binary entropy function

2(p _ b) </math > 是二元熵函数

- [math]\displaystyle{ H_2(p_b)=- \left[ p_b \log_2 {p_b} + (1-p_b) \log_2 ({1-p_b}) \right] }[/math]

[math]\displaystyle{ H_2(p_b)=- \left[ p_b \log_2 {p_b} + (1-p_b) \log_2 ({1-p_b}) \right] }[/math]

< math > h _ 2(p _ b) =-左[ p _ b log_2{ p _ b } + (1-p _ b) log_2({1-p _ b })右] </math >

- 3. For any [math]\displaystyle{ p_b }[/math], rates greater than [math]\displaystyle{ R(p_b) }[/math] are not achievable.

3. For any [math]\displaystyle{ p_b }[/math], rates greater than [math]\displaystyle{ R(p_b) }[/math] are not achievable.

3.对于任何pb ,比率大于R(pb)是无法实现的。

(MacKay (2003), p. 162; cf Gallager (1968), ch.5; Cover and Thomas (1991), p. 198; Shannon (1948) thm. 11)

(MacKay (2003), p. 162; cf Gallager (1968), ch.5; Cover and Thomas (1991), p. 198; Shannon (1948) thm. 11)

(MacKay (2003) ,第162页; cf Gallager (1968) ,第5章; Cover and Thomas (1991) ,第198页; Shannon (1948) thm。11)

Outline of proof证明概述

As with the several other major results in information theory, the proof of the noisy channel coding theorem includes an achievability result and a matching converse result. These two components serve to bound, in this case, the set of possible rates at which one can communicate over a noisy channel, and matching serves to show that these bounds are tight bounds.

As with the several other major results in information theory, the proof of the noisy channel coding theorem includes an achievability result and a matching converse result. These two components serve to bound, in this case, the set of possible rates at which one can communicate over a noisy channel, and matching serves to show that these bounds are tight bounds.

结合信息论中的其他几个主要结果,噪声信道编码定理的证明包括一个可达性结果和一个匹配逆结果。在这种情况下,这两个分量用来限定一个人在噪声信道上进行通信的可能速率集,而匹配用来表明这些界限是紧界限。

The following outlines are only one set of many different styles available for study in information theory texts.

The following outlines are only one set of many different styles available for study in information theory texts.

下面的提纲只是信息论文本中可供学习的许多不同风格中的一组

Achievability for discrete memoryless channels离散无记忆信道的可达性

This particular proof of achievability follows the style of proofs that make use of the asymptotic equipartition property (AEP). Another style can be found in information theory texts using error exponents.

This particular proof of achievability follows the style of proofs that make use of the asymptotic equipartition property (AEP). Another style can be found in information theory texts using error exponents.

这个特殊的可实现性证明遵循了利用渐近均分性质(AEP)的证明风格。另一种风格可以在信息论文本中找到使用错误指数。

Both types of proofs make use of a random coding argument where the codebook used across a channel is randomly constructed - this serves to make the analysis simpler while still proving the existence of a code satisfying a desired low probability of error at any data rate below the channel capacity.

Both types of proofs make use of a random coding argument where the codebook used across a channel is randomly constructed - this serves to make the analysis simpler while still proving the existence of a code satisfying a desired low probability of error at any data rate below the channel capacity.

这两种类型的证明都使用了一个随机编码参数,其中跨信道使用的码本是随机构造的-这使得分析更简单,同时仍然证明在低于信道容量的任何数据速率下,存在满足期望的低错误概率的码。

By an AEP-related argument, given a channel, length [math]\displaystyle{ n }[/math] strings of source symbols [math]\displaystyle{ X_1^{n} }[/math], and length [math]\displaystyle{ n }[/math] strings of channel outputs [math]\displaystyle{ Y_1^{n} }[/math], we can define a jointly typical set by the following:

By an AEP-related argument, given a channel, length [math]\displaystyle{ n }[/math] strings of source symbols [math]\displaystyle{ X_1^{n} }[/math], and length [math]\displaystyle{ n }[/math] strings of channel outputs [math]\displaystyle{ Y_1^{n} }[/math], we can define a jointly typical set by the following:

通过一个与 aep-相关的参数,给定一个通道,长度n的源符号的字符串X1n,以及长度n通道输出的字符串Y1n,我们可以定义一个联合的典型集合如下:

- [math]\displaystyle{ A_\varepsilon^{(n)} = \{(x^n, y^n) \in \mathcal X^n \times \mathcal Y^n }[/math]

[math]\displaystyle{ A_\varepsilon^{(n)} = \{(x^n, y^n) \in \mathcal X^n \times \mathcal Y^n }[/math]

- [math]\displaystyle{ 2^{-n(H(X)+\varepsilon)} \le p(X_1^n) \le 2^{-n(H(X) - \varepsilon)} }[/math]

[math]\displaystyle{ 2^{-n(H(X)+\varepsilon)} \le p(X_1^n) \le 2^{-n(H(X) - \varepsilon)} }[/math]

2 ^ {-n (h (x) + varepsilon)} le p (x _ 1 ^ n) le 2 ^ {-n (h (x)-varepsilon)} </math >

- [math]\displaystyle{ 2^{-n(H(Y) + \varepsilon)} \le p(Y_1^n) \le 2^{-n(H(Y)-\varepsilon)} }[/math]

[math]\displaystyle{ 2^{-n(H(Y) + \varepsilon)} \le p(Y_1^n) \le 2^{-n(H(Y)-\varepsilon)} }[/math]

2 ^ {-n (h (y) + varepsilon)} le p (y _ 1 ^ n) le 2 ^ {-n (h (y)-varepsilon)}

- [math]\displaystyle{ {2^{-n(H(X,Y) + \varepsilon)}}\le p(X_1^n, Y_1^n) \le 2^{-n(H(X,Y) -\varepsilon)} \} }[/math]

[math]\displaystyle{ {2^{-n(H(X,Y) + \varepsilon)}}\le p(X_1^n, Y_1^n) \le 2^{-n(H(X,Y) -\varepsilon)} \} }[/math]

{2 ^ {-n (h (x,y) + varepsilon)} le p (x _ 1 ^ n,y _ 1 ^ n) le 2 ^ {-n (h (x,y)-varepsilon)}

We say that two sequences [math]\displaystyle{ {X_1^n} }[/math] and [math]\displaystyle{ Y_1^n }[/math] are jointly typical if they lie in the jointly typical set defined above.

We say that two sequences [math]\displaystyle{ {X_1^n} }[/math] and [math]\displaystyle{ Y_1^n }[/math] are jointly typical if they lie in the jointly typical set defined above.

我们说两个序列X1n和Y1n如果它们位于上面定义的联合典型集合中,那么它们是共同典型的。

Steps

Steps

步骤

- In the style of the random coding argument, we randomly generate [math]\displaystyle{ 2^{nR} }[/math] codewords of length n from a probability distribution Q.

In the style of the random coding argument, we randomly generate [math]\displaystyle{ 2^{nR} }[/math] codewords of length n from a probability distribution Q.

在随机编码参数的风格中,我们随机从概率分布 q 生成长度为 n 的长度为2nR的码字。

- This code is revealed to the sender and receiver. It is also assumed that one knows the transition matrix [math]\displaystyle{ p(y|x) }[/math] for the channel being used.

This code is revealed to the sender and receiver. It is also assumed that one knows the transition matrix [math]\displaystyle{ p(y|x) }[/math] for the channel being used.

这段代码向发送者和接收者显示。还假设人们知道所使用的通道的转移矩阵。

- A message W is chosen according to the uniform distribution on the set of codewords. That is, [math]\displaystyle{ Pr(W = w) = 2^{-nR}, w = 1, 2, \dots, 2^{nR} }[/math].

A message W is chosen according to the uniform distribution on the set of codewords. That is, [math]\displaystyle{ Pr(W = w) = 2^{-nR}, w = 1, 2, \dots, 2^{nR} }[/math].

根据码字集上的均匀分布选择消息 w。也就是,Pr (w = w) = 2-nR ,w = 1,2,,2nR。

- The message W is sent across the channel.

The message W is sent across the channel.

消息 w 是通过通道发送的。

- The receiver receives a sequence according to [math]\displaystyle{ P(y^n|x^n(w))= \prod_{i = 1}^np(y_i|x_i(w)) }[/math]

The receiver receives a sequence according to [math]\displaystyle{ P(y^n|x^n(w))= \prod_{i = 1}^np(y_i|x_i(w)) }[/math]

接收端根据P(y^n|x^n(w))= \prod_{i = 1}^np(y_i|x_i(w))接收一个序列

- Sending these codewords across the channel, we receive [math]\displaystyle{ Y_1^n }[/math], and decode to some source sequence if there exists exactly 1 codeword that is jointly typical with Y. If there are no jointly typical codewords, or if there are more than one, an error is declared. An error also occurs if a decoded codeword doesn't match the original codeword. This is called typical set decoding.

Sending these codewords across the channel, we receive [math]\displaystyle{ Y_1^n }[/math], and decode to some source sequence if there exists exactly 1 codeword that is jointly typical with Y. If there are no jointly typical codewords, or if there are more than one, an error is declared. An error also occurs if a decoded codeword doesn't match the original codeword. This is called typical set decoding.

通过信道发送这些码字,我们接收到Y1n,并解码到某个源序列,如果存在正好与 y 共同典型的一个码字。如果没有共同的典型代码字,或者有多个代码字,则声明错误。如果解码的码字与原始码字不匹配,也会发生错误。这就是所谓的典型集合译码。

The probability of error of this scheme is divided into two parts:

The probability of error of this scheme is divided into two parts:

该方案的误差概率分为两部分:

- First, error can occur if no jointly typical X sequences are found for a received Y sequence

First, error can occur if no jointly typical X sequences are found for a received Y sequence

首先,如果没有为接收到的 y 序列找到联合的典型 x 序列,就可能发生错误

- Second, error can occur if an incorrect X sequence is jointly typical with a received Y sequence.

Second, error can occur if an incorrect X sequence is jointly typical with a received Y sequence.

其次,如果一个不正确的 x 序列与一个接收到的 y 序列是共同的典型,则可能发生错误。

- By the randomness of the code construction, we can assume that the average probability of error averaged over all codes does not depend on the index sent. Thus, without loss of generality, we can assume W = 1.

- From the joint AEP, we know that the probability that no jointly typical X exists goes to 0 as n grows large. We can bound this error probability by [math]\displaystyle{ \varepsilon }[/math].

- Also from the joint AEP, we know the probability that a particular [math]\displaystyle{ X_1^{n}(i) }[/math] and the [math]\displaystyle{ Y_1^n }[/math] resulting from W = 1 are jointly typical is [math]\displaystyle{ \le 2^{-n(I(X;Y) - 3\varepsilon)} }[/math].

Define: [math]\displaystyle{ E_i = \{(X_1^n(i), Y_1^n) \in A_\varepsilon^{(n)}\}, i = 1, 2, \dots, 2^{nR} }[/math]

Define: [math]\displaystyle{ E_i = \{(X_1^n(i), Y_1^n) \in A_\varepsilon^{(n)}\}, i = 1, 2, \dots, 2^{nR} }[/math]

as the event that message i is jointly typical with the sequence received when message 1 is sent.

as the event that message i is jointly typical with the sequence received when message 1 is sent.

作为消息 i 与消息1发送时接收到的序列一起发生的典型事件。

- [math]\displaystyle{ \lt math\gt 《数学》 \begin{align} \begin{align} 开始{ align } P(\text{error}) & {} = P(\text{error}|W=1) \le P(E_1^c) + \sum_{i=2}^{2^{nR}}P(E_i) \\ P(\text{error}) & {} = P(\text{error}|W=1) \le P(E_1^c) + \sum_{i=2}^{2^{nR}}P(E_i) \\ P (text { error }) & {} = p (text { error } | w = 1) le p (e_1 ^ c) + sum _ { i = 2} ^ {2 ^ { nR }} p (e_i) & {} \le P(E_1^c) + (2^{nR}-1)2^{-n(I(X;Y)-3\varepsilon)} \\ & {} \le P(E_1^c) + (2^{nR}-1)2^{-n(I(X;Y)-3\varepsilon)} \\ & {} le p (e _ 1 ^ c) + (2 ^ { nR }-1)2 ^ {-n (i (x; y)-3 varepsilon)} & {} \le \varepsilon + 2^{-n(I(X;Y)-R-3\varepsilon)}. & {} \le \varepsilon + 2^{-n(I(X;Y)-R-3\varepsilon)}. & {} le varepsilon + 2 ^ {-n (i (x; y)-R-3 varepsilon)}. \end{align} \end{align} 结束{ align } }[/math]

</math>

数学

We can observe that as [math]\displaystyle{ n }[/math] goes to infinity, if [math]\displaystyle{ R \lt I(X;Y) }[/math] for the channel, the probability of error will go to 0.

We can observe that as [math]\displaystyle{ n }[/math] goes to infinity, if [math]\displaystyle{ R \lt I(X;Y) }[/math] for the channel, the probability of error will go to 0.

我们可以观察到,当n趋于无穷大时,如果R<I(X;Y)对于通道,错误概率将趋于0。

Finally, given that the average codebook is shown to be "good," we know that there exists a codebook whose performance is better than the average, and so satisfies our need for arbitrarily low error probability communicating across the noisy channel.

Finally, given that the average codebook is shown to be "good," we know that there exists a codebook whose performance is better than the average, and so satisfies our need for arbitrarily low error probability communicating across the noisy channel.

最后,假设平均码本是“好的” ,我们知道存在一个性能优于平均值的码本,从而满足了我们在噪声信道中任意低错误概率通信的需要。

Weak converse for discrete memoryless channels离散无记忆信道的弱逆

Suppose a code of [math]\displaystyle{ 2^{nR} }[/math] codewords. Let W be drawn uniformly over this set as an index. Let [math]\displaystyle{ X^n }[/math] and [math]\displaystyle{ Y^n }[/math] be the transmitted codewords and received codewords, respectively.

Suppose a code of [math]\displaystyle{ 2^{nR} }[/math] codewords. Let W be drawn uniformly over this set as an index. Let [math]\displaystyle{ X^n }[/math] and [math]\displaystyle{ Y^n }[/math] be the transmitted codewords and received codewords, respectively.

假设一个2nR代码字的代码。让 w 作为索引均匀地绘制在这个集合上。让 x ^ n和 y ^ n 分别作为传输代码和接收代码。

- [math]\displaystyle{ nR = H(W) = H(W|Y^n) + I(W;Y^n) }[/math] using identities involving entropy and mutual information

[math]\displaystyle{ nR = H(W) = H(W|Y^n) + I(W;Y^n) }[/math] using identities involving entropy and mutual information

使用包含熵和互信息的恒等式nR=H(W)=H(W|Yn)+I(W;Yn)

- [math]\displaystyle{ \le H(W|Y^n) + I(X^n(W);Y^{n}) }[/math] since X is a function of W

[math]\displaystyle{ \le H(W|Y^n) + I(X^n(W);Y^{n}) }[/math] since X is a function of W

因为 x 是 w 的一个函数,所以它是一个数学公式

- [math]\displaystyle{ \le 1 + P_e^{(n)}nR + I(X^n(W);Y^n) }[/math] by the use of Fano's Inequality

[math]\displaystyle{ \le 1 + P_e^{(n)}nR + I(X^n(W);Y^n) }[/math] by the use of Fano's Inequality

利用 Fano 不等式,得到了一个新的数学公式≤1+Pe(n)nR+I(Xn(W);Yn)

- [math]\displaystyle{ \le 1 + P_e^{(n)}nR + nC }[/math] by the fact that capacity is maximized mutual information.

[math]\displaystyle{ \le 1 + P_e^{(n)}nR + nC }[/math] by the fact that capacity is maximized mutual information.

由于容量是最大化的互信息,因此≤1+Pe(n)nR+nC。

The result of these steps is that [math]\displaystyle{ P_e^{(n)} \ge 1 - \frac{1}{nR} - \frac{C}{R} }[/math]. As the block length [math]\displaystyle{ n }[/math] goes to infinity, we obtain [math]\displaystyle{ P_e^{(n)} }[/math] is bounded away from 0 if R is greater than C - we can get arbitrarily low rates of error only if R is less than C.

The result of these steps is that [math]\displaystyle{ P_e^{(n)} \ge 1 - \frac{1}{nR} - \frac{C}{R} }[/math]. As the block length [math]\displaystyle{ n }[/math] goes to infinity, we obtain [math]\displaystyle{ P_e^{(n)} }[/math] is bounded away from 0 if R is greater than C - we can get arbitrarily low rates of error only if R is less than C.

这些步骤的结果是Pe(n)≥1−1nR−CR。当块长度n 趋于无穷大时,我们得到当R大于C时Pe(n)远离0,我们只有当R小于C时才能得到任意低的误差率。

Strong converse for discrete memoryless channels离散无记忆信道的强逆

A strong converse theorem, proven by Wolfowitz in 1957,[4] states that,

A strong converse theorem, proven by Wolfowitz in 1957, states that,

沃尔福威茨在1957年证明了一个强逆定理,

- [math]\displaystyle{ \lt math\gt 《数学》 P_e \geq 1- \frac{4A}{n(R-C)^2} - e^{-\frac{n(R-C)}{2}} P_e \geq 1- \frac{4A}{n(R-C)^2} - e^{-\frac{n(R-C)}{2}} P _ e geq 1-frac {4A }{ n (R-C) ^ 2}-e ^ {-frac { n (R-C)}{2} }[/math]

</math>

数学

for some finite positive constant [math]\displaystyle{ A }[/math]. While the weak converse states that the error probability is bounded away from zero as [math]\displaystyle{ n }[/math] goes to infinity, the strong converse states that the error goes to 1. Thus, [math]\displaystyle{ C }[/math] is a sharp threshold between perfectly reliable and completely unreliable communication.

for some finite positive constant [math]\displaystyle{ A }[/math]. While the weak converse states that the error probability is bounded away from zero as [math]\displaystyle{ n }[/math] goes to infinity, the strong converse states that the error goes to 1. Thus, [math]\displaystyle{ C }[/math] is a sharp threshold between perfectly reliable and completely unreliable communication.

为了某个有限的正常数。当弱逆表示错误概率远离零是有界的时候,强逆表示错误概率远离零是有界的。因此,C是完全可靠和完全不可靠的通信之间的一个尖锐的门槛。

Channel coding theorem for non-stationary memoryless channels

We assume that the channel is memoryless, but its transition probabilities change with time, in a fashion known at the transmitter as well as the receiver.

We assume that the channel is memoryless, but its transition probabilities change with time, in a fashion known at the transmitter as well as the receiver.

我们假设信道是无记忆的,但是它的跃迁概率随时间而变化,这种变化在发射机和接收机中都是已知的。

Then the channel capacity is given by

Then the channel capacity is given by

然后通过对信道容量的分析,给出信道容量的计算公式

- [math]\displaystyle{ \lt math\gt 《数学》 C=\lim \inf \max_{p^{(X_1)},p^{(X_2)},...}\frac{1}{n}\sum_{i=1}^nI(X_i;Y_i). C=\lim \inf \max_{p^{(X_1)},p^{(X_2)},...}\frac{1}{n}\sum_{i=1}^nI(X_i;Y_i). C = lim inf max { p ^ {(x _ 1)} ,p ^ {(x _ 2)} ,... } frac {1}{ n } sum { i = 1} ^ nI (x _ i; y _ i). }[/math]

</math>

数学

The maximum is attained at the capacity achieving distributions for each respective channel. That is,

The maximum is attained at the capacity achieving distributions for each respective channel. That is,

在每个通道的容量分配上达到最大值。就是,

[math]\displaystyle{ \lt math\gt 《数学》 C=\lim \inf \frac{1}{n}\sum_{i=1}^n C_i C=\lim \inf \frac{1}{n}\sum_{i=1}^n C_i 1}{ n } sum { i = 1} ^ n c _ i }[/math]

</math>

数学

where [math]\displaystyle{ C_i }[/math] is the capacity of the ith channel.

where [math]\displaystyle{ C_i }[/math] is the capacity of the ith channel.

其中Ci是第i通道的容量。

Outline of the proof证明概述

The proof runs through in almost the same way as that of channel coding theorem. Achievability follows from random coding with each symbol chosen randomly from the capacity achieving distribution for that particular channel. Typicality arguments use the definition of typical sets for non-stationary sources defined in the asymptotic equipartition property article.

The proof runs through in almost the same way as that of channel coding theorem. Achievability follows from random coding with each symbol chosen randomly from the capacity achieving distribution for that particular channel. Typicality arguments use the definition of typical sets for non-stationary sources defined in the asymptotic equipartition property article.

该证明与信道编码定理的证明几乎相同。可实现性来自随机编码,每个符号从该特定信道的容量实现分布中随机选择。典型性参数使用渐近均分性质文章中定义的非平稳源的典型集的定义

The technicality of lim inf comes into play when [math]\displaystyle{ \frac{1}{n}\sum_{i=1}^n C_i }[/math] does not converge.

The technicality of lim inf comes into play when [math]\displaystyle{ \frac{1}{n}\sum_{i=1}^n C_i }[/math] does not converge.

当 1n∑i=1nCi不收敛时,lim inf 的技术性就发挥了作用。

See also参见

渐近均分性

法诺不等式

率失真理论

香农信源编码定理

香农-哈特莱定理

涡轮码

Notes批注

- ↑ "A new basic theorem of information theory". Feinstein, Amiel. 1954. Bibcode:1955PhDT........12F. hdl:1721.1/4798.

{{cite journal}}: Cite journal requires|journal=(help)CS1 maint: others (link) - ↑ Sae-Young Chung, G. David Forney, Jr., Thomas J. Richardson, and Rüdiger Urbanke, "On the Design of Low-Density Parity-Check Codes within 0.0045 dB of the Shannon Limit", IEEE Communications Letters, 5: 58-60, Feb. 2001. ISSN 1089-7798

- ↑ For a description of the "sup" function, see Supremum

- ↑ Robert Gallager. Information Theory and Reliable Communication. New York: John Wiley & Sons, 1968.

References参考

- Cover T. M., Thomas J. A., Elements of Information Theory, John Wiley & Sons, 1991.

- Fano, R. A., Transmission of information; a statistical theory of communications, MIT Press, 1961.

- Feinstein, Amiel, "A New basic theorem of information theory", IEEE Transactions on Information Theory, 4(4): 2-22, 1954.

- MacKay, David J. C., Information Theory, Inference, and Learning Algorithms, Cambridge University Press, 2003. [free online]

- Shannon, C. E., A Mathematical Theory of Communication. The Bell System Technical Journal 27,3: 379–423, 1948.

- Shannon, C. E., A Mathematical Theory of Communication Urbana, IL: University of Illinois Press, 1948 (reprinted 1998).

- Wolfowitz, J., "The coding of messages subject to chance errors", Illinois J. Math., 1: 591–606, 1957.

External links外部链接

Category:Information theory

范畴: 信息论

Category:Theorems in discrete mathematics

范畴: 离散数学的定理

Category:Telecommunication theory

范畴: 电信理论

Category:Coding theory

类别: 编码理论

This page was moved from wikipedia:en:Noisy-channel coding theorem. Its edit history can be viewed at 有噪信道编码定理/edithistory

- Fano, R. A., Transmission of information; a statistical theory of communications, MIT Press, 1961.