“概率图模型”的版本间的差异

| 第28行: | 第28行: | ||

Generally, probabilistic graphical models use a graph-based representation as the foundation for encoding a distribution over a multi-dimensional space and a graph that is a compact or factorized representation of a set of independences that hold in the specific distribution. Two branches of graphical representations of distributions are commonly used, namely, Bayesian networks and Markov random fields. Both families encompass the properties of factorization and independences, but they differ in the set of independences they can encode and the factorization of the distribution that they induce.<ref name=koller09>{{cite book | Generally, probabilistic graphical models use a graph-based representation as the foundation for encoding a distribution over a multi-dimensional space and a graph that is a compact or factorized representation of a set of independences that hold in the specific distribution. Two branches of graphical representations of distributions are commonly used, namely, Bayesian networks and Markov random fields. Both families encompass the properties of factorization and independences, but they differ in the set of independences they can encode and the factorization of the distribution that they induce.<ref name=koller09>{{cite book | ||

| − | + | 一般来说,概率图模型中图的表示方法常常作为对多维空间上的分布进行编码的基础,而图是一组独立分布的紧凑或分解表示。常用的概率图模型大致分为两类:贝叶斯网络和马尔可夫随机场。这两种都包含因子分解和独立性的性质,但是它们在它们可以编码的一系列独立性和它们所诱导的分布的因子分解上有所不同。 09{ cite book | |

|author=Koller, D. | |author=Koller, D. | ||

| 第135行: | 第135行: | ||

===Bayesian network=== | ===Bayesian network=== | ||

| − | + | 贝叶斯网络 | |

{{main|Bayesian network}} | {{main|Bayesian network}} | ||

| 第144行: | 第144行: | ||

If the network structure of the model is a directed acyclic graph, the model represents a factorization of the joint probability of all random variables. More precisely, if the events are <math>X_1,\ldots,X_n</math> then the joint probability satisfies | If the network structure of the model is a directed acyclic graph, the model represents a factorization of the joint probability of all random variables. More precisely, if the events are <math>X_1,\ldots,X_n</math> then the joint probability satisfies | ||

| − | + | 如果模型的网络结构是有向无环图,则模型表示所有随机变量的联合概率的因子分解。更确切地说,如果事件是<math>X_1,\ldots,X_n</math>,那么联合概率满足 | |

| 第152行: | 第152行: | ||

<math>P[X_1,\ldots,X_n]=\prod_{i=1}^nP[X_i|\text{pa}(X_i)]</math> | <math>P[X_1,\ldots,X_n]=\prod_{i=1}^nP[X_i|\text{pa}(X_i)]</math> | ||

| − | + | ||

| 第160行: | 第160行: | ||

where <math>\text{pa}(X_i)</math> is the set of parents of node <math>X_i</math> (nodes with edges directed towards <math>X_i</math>). In other words, the joint distribution factors into a product of conditional distributions. For example, the graphical model in the Figure shown above (which is actually not a directed acyclic graph, but an ancestral graph) consists of the random variables <math>A, B, C, D</math> | where <math>\text{pa}(X_i)</math> is the set of parents of node <math>X_i</math> (nodes with edges directed towards <math>X_i</math>). In other words, the joint distribution factors into a product of conditional distributions. For example, the graphical model in the Figure shown above (which is actually not a directed acyclic graph, but an ancestral graph) consists of the random variables <math>A, B, C, D</math> | ||

| − | 其中 math | + | 其中 <math>\text{pa}(X_i)</math> 是节点 <math>X_i</math> 的父节点集(节点的边际指向 <math>X_i</math> )。换句话说,联合分布因子可以表示为条件分布的乘积。例如,上图中的图模型(实际上不是有向无环图,而是原始图)是由随机变量<math>A, B, C, D</math> 组成 |

with a joint probability density that factors as | with a joint probability density that factors as | ||

| 第166行: | 第166行: | ||

with a joint probability density that factors as | with a joint probability density that factors as | ||

| − | + | 联合概率密度因子为 | |

| 第174行: | 第174行: | ||

<math>P[A,B,C,D] = P[A]\cdot P[B]\cdot P[C,D|A,B]</math> | <math>P[A,B,C,D] = P[A]\cdot P[B]\cdot P[C,D|A,B]</math> | ||

| − | + | ||

| 第182行: | 第182行: | ||

Any two nodes are conditionally independent given the values of their parents. In general, any two sets of nodes are conditionally independent given a third set if a criterion called d-separation holds in the graph. Local independences and global independences are equivalent in Bayesian networks. | Any two nodes are conditionally independent given the values of their parents. In general, any two sets of nodes are conditionally independent given a third set if a criterion called d-separation holds in the graph. Local independences and global independences are equivalent in Bayesian networks. | ||

| − | + | 任何两个节点都是和其父节点的值是条件独立的。一般来说,如果 d- 分离准则在图中成立,那么任意两组节点和给定第三个节点集都是条件独立的。在贝叶斯网络中,局部独立性和全局独立性是等价的。 | |

| 第190行: | 第190行: | ||

This type of graphical model is known as a directed graphical model, Bayesian network, or belief network. Classic machine learning models like hidden Markov models, neural networks and newer models such as variable-order Markov models can be considered special cases of Bayesian networks. | This type of graphical model is known as a directed graphical model, Bayesian network, or belief network. Classic machine learning models like hidden Markov models, neural networks and newer models such as variable-order Markov models can be considered special cases of Bayesian networks. | ||

| − | + | 这种类型的图形模型被称为有向图形模型、贝氏网路或信念网络。经典的机器学习模型:隐马尔可夫模型、神经网络和更新的模型如可变阶马尔可夫模型都可以看作是贝叶斯网络的特殊情况。 | |

===Other types=== | ===Other types=== | ||

| + | 其他类别 | ||

*[[Naive Bayes classifier]] where we use a tree with a single root | *[[Naive Bayes classifier]] where we use a tree with a single root | ||

| − | + | 朴素贝叶斯分类器,其中我们会使用一颗单结点树 | |

*[[Dependency network (graphical model)|Dependency network]] where cycles are allowed | *[[Dependency network (graphical model)|Dependency network]] where cycles are allowed | ||

| − | + | 依赖网络中环的出现 | |

*Tree-augmented classifier or '''TAN model''' | *Tree-augmented classifier or '''TAN model''' | ||

| − | + | 树增广朴素贝叶斯分类器或简称为TAN模型 | |

*A [[factor graph]] is an undirected [[bipartite graph]] connecting variables and factors. Each factor represents a function over the variables it is connected to. This is a helpful representation for understanding and implementing [[belief propagation]]. | *A [[factor graph]] is an undirected [[bipartite graph]] connecting variables and factors. Each factor represents a function over the variables it is connected to. This is a helpful representation for understanding and implementing [[belief propagation]]. | ||

| − | + | 因子图是连接变量和因子的无向二分图。 每个因子代表与其连接的变量有关的函数。 这对于理解和实现信念传播算法很有帮助。 | |

* A [[clique tree]] or junction tree is a [[tree (graph theory)|tree]] of [[clique (graph theory)|cliques]], used in the [[junction tree algorithm]]. | * A [[clique tree]] or junction tree is a [[tree (graph theory)|tree]] of [[clique (graph theory)|cliques]], used in the [[junction tree algorithm]]. | ||

| + | 在节点树算法中,通常会使用派系中一棵派系树或者是节点树。 | ||

| + | * A [[chain graph]] is a graph which may have both directed and undirected edges, but without any directed cycles (i.e. if we start at any vertex and move along the graph respecting the directions of any arrows, we cannot return to the vertex we started from if we have passed an arrow). Both directed acyclic graphs and undirected graphs are special cases of chain graphs, which can therefore provide a way of unifying and generalizing Bayesian and Markov networks.<ref>{{cite journal|last=Frydenberg|first=Morten|year=1990|title=The Chain Graph Markov Property|journal=[[Scandinavian Journal of Statistics]]|volume=17|issue=4|pages=333–353|mr=1096723|jstor=4616181 }} | ||

| − | + | 链式图是既可以有向也可以无向的图,但是没有任何有向环(即,如果我们从任意一个顶点开始并依据任何箭头的方向沿该图形移动,则我们将无法返回到通过箭头开始的该顶点)。 有向无环图和无向图都是链式图的特例,因此可以提供一种统一和泛化贝叶斯网络和马尔可夫网络的方法。 | |

</ref> | </ref> | ||

| 第281行: | 第284行: | ||

* [[Belief propagation]] | * [[Belief propagation]] | ||

| − | + | 信念传递网络 | |

* [[Structural equation model]] | * [[Structural equation model]] | ||

| − | + | 结构方程模型 | |

2020年10月31日 (六) 19:54的版本

此词条暂由Yuling翻译,未经人工整理和审校,带来阅读不便,请见谅。

A graphical model or probabilistic graphical model (PGM) or structured probabilistic model is a probabilistic model for which a graph expresses the conditional dependence structure between random variables. They are commonly used in probability theory, statistics—particularly Bayesian statistics—and machine learning.

A graphical model or probabilistic graphical model (PGM) or structured probabilistic model is a probabilistic model for which a graph expresses the conditional dependence structure between random variables. They are commonly used in probability theory, statistics—particularly Bayesian statistics—and machine learning.

图模型 Graphical Model或概率图模型 Probabilistic Graphical Model(PGM)或结构化概率模型是一种用图表示随机变量之间条件依赖关系的概率模型。它们通常用于概率论、统计学---- 尤其是贝叶斯统计学---- 和机器学习 Machine Learning。

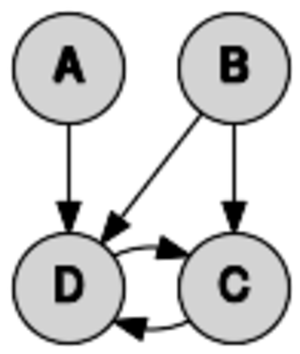

An example of a graphical model. Each arrow indicates a dependency. In this example: D depends on A, B, and C; and C depends on B and D; whereas A and B are each independent.

这是一个图模型的例子。每个箭头表示一个依赖关系。在这个例子中: D 依赖于 A、 B 和 C; C 依赖于 B 和 D; 而 A 和 B 相互独立。

Types of graphical models

图模型的类别

Generally, probabilistic graphical models use a graph-based representation as the foundation for encoding a distribution over a multi-dimensional space and a graph that is a compact or factorized representation of a set of independences that hold in the specific distribution. Two branches of graphical representations of distributions are commonly used, namely, Bayesian networks and Markov random fields. Both families encompass the properties of factorization and independences, but they differ in the set of independences they can encode and the factorization of the distribution that they induce.引用错误:没有找到与</ref>对应的<ref>标签

}}</ref>

{} / ref

Bayesian network

贝叶斯网络

If the network structure of the model is a directed acyclic graph, the model represents a factorization of the joint probability of all random variables. More precisely, if the events are [math]\displaystyle{ X_1,\ldots,X_n }[/math] then the joint probability satisfies

If the network structure of the model is a directed acyclic graph, the model represents a factorization of the joint probability of all random variables. More precisely, if the events are [math]\displaystyle{ X_1,\ldots,X_n }[/math] then the joint probability satisfies

如果模型的网络结构是有向无环图,则模型表示所有随机变量的联合概率的因子分解。更确切地说,如果事件是[math]\displaystyle{ X_1,\ldots,X_n }[/math],那么联合概率满足

- [math]\displaystyle{ P[X_1,\ldots,X_n]=\prod_{i=1}^nP[X_i|\text{pa}(X_i)] }[/math]

[math]\displaystyle{ P[X_1,\ldots,X_n]=\prod_{i=1}^nP[X_i|\text{pa}(X_i)] }[/math]

where [math]\displaystyle{ \text{pa}(X_i) }[/math] is the set of parents of node [math]\displaystyle{ X_i }[/math] (nodes with edges directed towards [math]\displaystyle{ X_i }[/math]). In other words, the joint distribution factors into a product of conditional distributions. For example, the graphical model in the Figure shown above (which is actually not a directed acyclic graph, but an ancestral graph) consists of the random variables [math]\displaystyle{ A, B, C, D }[/math]

where [math]\displaystyle{ \text{pa}(X_i) }[/math] is the set of parents of node [math]\displaystyle{ X_i }[/math] (nodes with edges directed towards [math]\displaystyle{ X_i }[/math]). In other words, the joint distribution factors into a product of conditional distributions. For example, the graphical model in the Figure shown above (which is actually not a directed acyclic graph, but an ancestral graph) consists of the random variables [math]\displaystyle{ A, B, C, D }[/math]

其中 [math]\displaystyle{ \text{pa}(X_i) }[/math] 是节点 [math]\displaystyle{ X_i }[/math] 的父节点集(节点的边际指向 [math]\displaystyle{ X_i }[/math] )。换句话说,联合分布因子可以表示为条件分布的乘积。例如,上图中的图模型(实际上不是有向无环图,而是原始图)是由随机变量[math]\displaystyle{ A, B, C, D }[/math] 组成

with a joint probability density that factors as

with a joint probability density that factors as

联合概率密度因子为

- [math]\displaystyle{ P[A,B,C,D] = P[A]\cdot P[B]\cdot P[C,D|A,B] }[/math]

[math]\displaystyle{ P[A,B,C,D] = P[A]\cdot P[B]\cdot P[C,D|A,B] }[/math]

Any two nodes are conditionally independent given the values of their parents. In general, any two sets of nodes are conditionally independent given a third set if a criterion called d-separation holds in the graph. Local independences and global independences are equivalent in Bayesian networks.

Any two nodes are conditionally independent given the values of their parents. In general, any two sets of nodes are conditionally independent given a third set if a criterion called d-separation holds in the graph. Local independences and global independences are equivalent in Bayesian networks.

任何两个节点都是和其父节点的值是条件独立的。一般来说,如果 d- 分离准则在图中成立,那么任意两组节点和给定第三个节点集都是条件独立的。在贝叶斯网络中,局部独立性和全局独立性是等价的。

This type of graphical model is known as a directed graphical model, Bayesian network, or belief network. Classic machine learning models like hidden Markov models, neural networks and newer models such as variable-order Markov models can be considered special cases of Bayesian networks.

This type of graphical model is known as a directed graphical model, Bayesian network, or belief network. Classic machine learning models like hidden Markov models, neural networks and newer models such as variable-order Markov models can be considered special cases of Bayesian networks.

这种类型的图形模型被称为有向图形模型、贝氏网路或信念网络。经典的机器学习模型:隐马尔可夫模型、神经网络和更新的模型如可变阶马尔可夫模型都可以看作是贝叶斯网络的特殊情况。

Other types

其他类别

- Naive Bayes classifier where we use a tree with a single root

朴素贝叶斯分类器,其中我们会使用一颗单结点树

- Dependency network where cycles are allowed

依赖网络中环的出现

- Tree-augmented classifier or TAN model

树增广朴素贝叶斯分类器或简称为TAN模型

- A factor graph is an undirected bipartite graph connecting variables and factors. Each factor represents a function over the variables it is connected to. This is a helpful representation for understanding and implementing belief propagation.

因子图是连接变量和因子的无向二分图。 每个因子代表与其连接的变量有关的函数。 这对于理解和实现信念传播算法很有帮助。

- A clique tree or junction tree is a tree of cliques, used in the junction tree algorithm.

在节点树算法中,通常会使用派系中一棵派系树或者是节点树。

- A chain graph is a graph which may have both directed and undirected edges, but without any directed cycles (i.e. if we start at any vertex and move along the graph respecting the directions of any arrows, we cannot return to the vertex we started from if we have passed an arrow). Both directed acyclic graphs and undirected graphs are special cases of chain graphs, which can therefore provide a way of unifying and generalizing Bayesian and Markov networks.[1]

</ref>

/ 参考

- An ancestral graph is a further extension, having directed, bidirected and undirected edges.[2]

|citeseerx=10.1.1.33.4906}}</ref>

10.1.1.33.4906} / ref

- Random field techniques

- A Markov random field, also known as a Markov network, is a model over an undirected graph. A graphical model with many repeated subunits can be represented with plate notation.

- A conditional random field is a discriminative model specified over an undirected graph.

- A restricted Boltzmann machine is a bipartite generative model specified over an undirected graph.

Applications

The framework of the models, which provides algorithms for discovering and analyzing structure in complex distributions to describe them succinctly and extract the unstructured information, allows them to be constructed and utilized effectively.[3] Applications of graphical models include causal inference, information extraction, speech recognition, computer vision, decoding of low-density parity-check codes, modeling of gene regulatory networks, gene finding and diagnosis of diseases, and graphical models for protein structure.

The framework of the models, which provides algorithms for discovering and analyzing structure in complex distributions to describe them succinctly and extract the unstructured information, allows them to be constructed and utilized effectively. Applications of graphical models include causal inference, information extraction, speech recognition, computer vision, decoding of low-density parity-check codes, modeling of gene regulatory networks, gene finding and diagnosis of diseases, and graphical models for protein structure.

该模型的框架为发现和分析复杂分布中的结构、简洁地描述结构和提取非结构化信息提供了算法,使模型得到有效的构建和利用。图形模型的应用包括因果推理、信息抽取、语音识别、计算机视觉、低密度奇偶校验码的解码、基因调控网络的建模、基因发现和疾病诊断,以及蛋白质结构的图形模型。

See also

信念传递网络

结构方程模型

Notes

- ↑ Frydenberg, Morten (1990). "The Chain Graph Markov Property". Scandinavian Journal of Statistics. 17 (4): 333–353. JSTOR 4616181. MR 1096723. 链式图是既可以有向也可以无向的图,但是没有任何有向环(即,如果我们从任意一个顶点开始并依据任何箭头的方向沿该图形移动,则我们将无法返回到通过箭头开始的该顶点)。 有向无环图和无向图都是链式图的特例,因此可以提供一种统一和泛化贝叶斯网络和马尔可夫网络的方法。

- ↑ Richardson, Thomas; Spirtes

1 Thomas, Peter (2002). "Ancestral graph Markov models". Annals of Statistics 统计年鉴. 30 (4): 962–1030

第三十卷,第四期2002年,第962-1030页. CiteSeerX 10.1.1.33.4906. doi:10.1214/aos/1031689015. MR 1926166. Zbl [//zbmath.org/?format=complete&q=an:1033.60008%0A%0A1926%20%2F%20166%E5%85%88%E7%94%9F1033.60008 1033.60008

1926 / 166先生1033.60008].

{{cite journal}}: Check|zbl=value (help); Text "first2 Peter" ignored (help); Text "last 1 Richardson" ignored (help); Text "last 2 Spirtes" ignored (help); Text "标题祖先图马尔科夫模型" ignored (help); line feed character in|journal=at position 21 (help); line feed character in|last2=at position 8 (help); line feed character in|pages=at position 9 (help); line feed character in|zbl=at position 11 (help) - ↑ 引用错误:无效

<ref>标签;未给name属性为koller09的引用提供文字

Further reading

Books and book chapters

- Barber

理发师, David

首先是大卫 (2012

2012年). Bayesian Reasoning and Machine Learning

贝叶斯推理和机器学习. Cambridge University Press

出版商剑桥大学出版社. ISBN [[Special:BookSources/978-0-521-51814-7

[国际标准图书编号978-0-521-51814-7]|978-0-521-51814-7

[国际标准图书编号978-0-521-51814-7]]].

}}

}}

- [[Christopher Bishop

克里斯托弗 · 毕晓普 |Bishop

最后一个主教, Christopher M.

首先是克里斯托弗 m。]] (2006

2006年). [https://www.microsoft.com/en-us/research/wp-content/uploads/2016/05/Bishop-PRML-sample.pdf

Https://www.microsoft.com/en-us/research/wp-content/uploads/2016/05/bishop-prml-sample.pdf "Chapter 8. Graphical Models"]. [https://www.springer.com/us/book/9780387310732

Https://www.springer.com/us/book/9780387310732 Pattern Recognition and Machine Learning

模式识别与机器学习]. Springer

出版商斯普林格. pp. 359–422

第359-422页. ISBN [[Special:BookSources/978-0-387-31073-2

[国际标准图书编号978-0-387-31073-2]|978-0-387-31073-2

[国际标准图书编号978-0-387-31073-2]]]. MR [http://www.ams.org/mathscinet-getitem?mr=2247587

2247587先生 2247587 2247587先生]. https://www.microsoft.com/en-us/research/wp-content/uploads/2016/05/Bishop-PRML-sample.pdf

Https://www.microsoft.com/en-us/research/wp-content/uploads/2016/05/bishop-prml-sample.pdf.

}}

}}

- Cowell, Robert G.

作者: 罗伯特 · g。; Dawid, A. Philip; Lauritzen, Steffen L.; Spiegelhalter, David J. 2 Dawid,a. Philip (1999). Probabilistic networks and expert systems. Berlin: Springer. ISBN 978-0-387-98767-5. MR 1697175. A more advanced and statistically oriented book

|title=Probabilistic networks and expert systems |publisher=Springer |location=Berlin |year=1999 |pages= |isbn=978-0-387-98767-5 |doi= |accessdate= |mr=1697175 |ref=cowell |author2-link=Philip Dawid }} A more advanced and statistically oriented book

一本更先进和面向统计的书。1697175

- Jensen, Finn (1996). An introduction to Bayesian networks. Berlin: Springer. ISBN 978-0-387-91502-9.

- [[Judea Pearl

朱迪亚珍珠 |Pearl, Judea]] (1988

1988年). Probabilistic Reasoning in Intelligent Systems (2nd revised

第二版修订版 ed.). San Mateo, CA: Morgan Kaufmann

出版商摩根 · 考夫曼. ISBN [[Special:BookSources/978-1-55860-479-7

[国际标准图书编号978-1-55860-479-7]|978-1-55860-479-7

[国际标准图书编号978-1-55860-479-7]]]. MR [http://www.ams.org/mathscinet-getitem?mr=0965765

0965765先生 0965765 0965765先生]. A computational reasoning approach, where the relationships between graphs and probabilities were formally introduced.

}} A computational reasoning approach, where the relationships between graphs and probabilities were formally introduced.

一种计算推理方法,图和概率之间的关系被正式引入。

Journal articles

- [[Edoardo Airoldi

1 Edoardo Airoldi|Edoardo M. Airoldi]] (2007

2007年). "Getting Started in Probabilistic Graphical Models

开始使用概率图模型". PLoS Computational Biology 2012年3月24日. 3

第三卷 (12

第12期): e252

第252页. doi:[//doi.org/10.1371%2Fjournal.pcbi.0030252%0A%0A10.1371%20%2F%20journal.pcbi.%200030252 10.1371/journal.pcbi.0030252 10.1371 / journal.pcbi. 0030252]. PMC [//www.ncbi.nlm.nih.gov/pmc/articles/PMC2134967%0A%0A2134967 2134967 2134967]. PMID [//pubmed.ncbi.nlm.nih.gov/18069887

18069887 18069887

18069887]. {{cite journal}}: Check |doi= value (help); Check |pmc= value (help); Check |pmid= value (help); Check date values in: |year= (help); Text "PLoS 计算生物学" ignored (help); line feed character in |authorlink1= at position 16 (help); line feed character in |doi= at position 29 (help); line feed character in |issue= at position 3 (help); line feed character in |journal= at position 27 (help); line feed character in |pages= at position 5 (help); line feed character in |pmc= at position 8 (help); line feed character in |pmid= at position 9 (help); line feed character in |title= at position 50 (help); line feed character in |volume= at position 2 (help); line feed character in |year= at position 5 (help)

}}

}}

- Jordan, M. I. (2004). "Graphical Models". Statistical Science. 19: 140–155. doi:10.1214/088342304000000026.

- Ghahramani, Zoubin (May 2015). "Probabilistic machine learning and artificial intelligence". Nature (in English). 521 (7553): 452–459. doi:10.1038/nature14541. PMID 26017444.

Other

External links

Category:Bayesian statistics

类别: 贝叶斯统计

This page was moved from wikipedia:en:Graphical model. Its edit history can be viewed at 概率图模型/edithistory

- 有参考文献错误的页面

- 调用重复模板参数的页面

- CS1 errors: unrecognized parameter

- CS1 errors: invisible characters

- CS1 errors: Zbl

- Articles with hatnote templates targeting a nonexistent page

- CS1 errors: dates

- CS1 errors: PMC

- CS1 errors: PMID

- CS1 errors: DOI

- CS1: long volume value

- CS1 English-language sources (en)

- Bayesian statistics

- Graphical models

- 待整理页面