蚁群优化算法

此词条暂由彩云小译翻译,未经人工整理和审校,带来阅读不便,请见谅。

}}

Ant behavior was the inspiration for the metaheuristic optimization technique

蚂蚁行为是元启发式优化技术的灵感来源

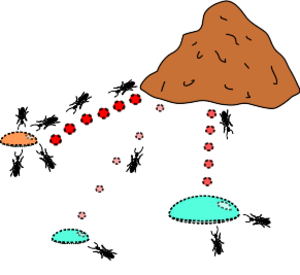

When a colony of ants is confronted with the choice of reaching their food via two different routes of which one is much shorter than the other, their choice is entirely random. However, those who use the shorter route reach the food faster and therefore go back and forth more often between the anthill and the food.

当一群蚂蚁面临选择通过两条不同的路径获取食物时,其中一条路径比另一条路径短得多,它们的选择完全是随机的。然而,那些使用较短的路线到达食物更快,因此往返于蚁丘和食物之间的频率更高。

In computer science and operations research, the ant colony optimization algorithm (ACO) is a probabilistic technique for solving computational problems which can be reduced to finding good paths through graphs. Artificial Ants stand for multi-agent methods inspired by the behavior of real ants.

In computer science and operations research, the ant colony optimization algorithm (ACO) is a probabilistic technique for solving computational problems which can be reduced to finding good paths through graphs. Artificial Ants stand for multi-agent methods inspired by the behavior of real ants.

在计算机科学和运筹学中,蚁群优化算法(ACO)是一种用于解决计算问题的概率技术,它可以简化为通过图寻找好的路径。人工蚂蚁代表受真蚂蚁行为启发的多智能体方法。

The pheromone-based communication of biological ants is often the predominant paradigm used.[2] Combinations of Artificial Ants and local search algorithms have become a method of choice for numerous optimization tasks involving some sort of graph, e.g., vehicle routing and internet routing. The burgeoning activity in this field has led to conferences dedicated solely to Artificial Ants, and to numerous commercial applications by specialized companies such as AntOptima.

The pheromone-based communication of biological ants is often the predominant paradigm used. Combinations of Artificial Ants and local search algorithms have become a method of choice for numerous optimization tasks involving some sort of graph, e.g., vehicle routing and internet routing. The burgeoning activity in this field has led to conferences dedicated solely to Artificial Ants, and to numerous commercial applications by specialized companies such as AntOptima.

基于信息素的生物蚂蚁通信往往是主要的范例使用。人工蚁群算法和局部搜索算法的结合已经成为许多优化任务的选择方法,这些优化任务涉及到一些图形,如车辆路径和互联网路由。这一领域蓬勃发展的活动导致了专门讨论人工蚂蚁的会议,以及诸如 AntOptima 等专业公司的大量商业应用。

As an example, Ant colony optimization[3] is a class of optimization algorithms modeled on the actions of an ant colony. Artificial 'ants' (e.g. simulation agents) locate optimal solutions by moving through a parameter space representing all possible solutions. Real ants lay down pheromones directing each other to resources while exploring their environment. The simulated 'ants' similarly record their positions and the quality of their solutions, so that in later simulation iterations more ants locate better solutions.[4] One variation on this approach is the bees algorithm, which is more analogous to the foraging patterns of the honey bee, another social insect.

As an example, Ant colony optimization is a class of optimization algorithms modeled on the actions of an ant colony. Artificial 'ants' (e.g. simulation agents) locate optimal solutions by moving through a parameter space representing all possible solutions. Real ants lay down pheromones directing each other to resources while exploring their environment. The simulated 'ants' similarly record their positions and the quality of their solutions, so that in later simulation iterations more ants locate better solutions. One variation on this approach is the bees algorithm, which is more analogous to the foraging patterns of the honey bee, another social insect.

以蚁群算法为例,提出了一类以蚁群行为为模型的优化算法。人造「蚂蚁」(例如:。模拟代理)通过移动一个代表所有可能解的参数空间来找到最优解。真正的蚂蚁在探索自己的环境时,会产生信息素,互相指引对方寻找资源。模拟的“蚂蚁”类似地记录它们的位置和解决方案的质量,这样在随后的模拟迭代中,更多的蚂蚁找到更好的解决方案。这种方法的一个变种是蜜蜂算法,它更类似于另一种社会昆虫---- 蜜蜂的觅食模式。

This algorithm is a member of the ant colony algorithms family, in swarm intelligence methods, and it constitutes some metaheuristic optimizations. Initially proposed by Marco Dorigo in 1992 in his PhD thesis,[5][6] the first algorithm was aiming to search for an optimal path in a graph, based on the behavior of ants seeking a path between their colony and a source of food. The original idea has since diversified to solve a wider class of numerical problems, and as a result, several problems have emerged, drawing on various aspects of the behavior of ants. From a broader perspective, ACO performs a model-based search[7] and shares some similarities with estimation of distribution algorithms.

This algorithm is a member of the ant colony algorithms family, in swarm intelligence methods, and it constitutes some metaheuristic optimizations. Initially proposed by Marco Dorigo in 1992 in his PhD thesis, the first algorithm was aiming to search for an optimal path in a graph, based on the behavior of ants seeking a path between their colony and a source of food. The original idea has since diversified to solve a wider class of numerical problems, and as a result, several problems have emerged, drawing on various aspects of the behavior of ants. From a broader perspective, ACO performs a model-based search and shares some similarities with estimation of distribution algorithms.

这个算法是蚁群算法家族的一员,在群体智能方法中,它构成了一些元启发式优化。最初由 Marco Dorigo 在1992年他的博士论文中提出,第一个算法的目的是在图中寻找一条最佳路径,基于蚂蚁寻找它们的群体和食物来源之间的路径的行为。最初的想法已经多样化,以解决更广泛的数值问题,结果,出现了几个问题,借鉴了蚂蚁行为的各个方面。从更广泛的角度来看,蚁群算法实现了基于模型的搜索,并与分布估计算法具有一定的相似性。

Overview

In the natural world, ants of some species (initially) wander randomly, and upon finding food return to their colony while laying down pheromone trails. If other ants find such a path, they are likely not to keep travelling at random, but instead to follow the trail, returning and reinforcing it if they eventually find food (see Ant communication).[8]

In the natural world, ants of some species (initially) wander randomly, and upon finding food return to their colony while laying down pheromone trails. If other ants find such a path, they are likely not to keep travelling at random, but instead to follow the trail, returning and reinforcing it if they eventually find food (see Ant communication).

在自然世界中,一些物种的蚂蚁(最初)随机徘徊,当发现食物回到它们的群落,同时产生信息素的踪迹。如果其他蚂蚁找到了这样一条路径,它们很可能不会继续随机行走,而是沿着这条路径前进,如果它们最终找到了食物,就会返回并加固这条路径(参见蚂蚁通讯)。

Over time, however, the pheromone trail starts to evaporate, thus reducing its attractive strength. The more time it takes for an ant to travel down the path and back again, the more time the pheromones have to evaporate. A short path, by comparison, gets marched over more frequently, and thus the pheromone density becomes higher on shorter paths than longer ones. Pheromone evaporation also has the advantage of avoiding the convergence to a locally optimal solution. If there were no evaporation at all, the paths chosen by the first ants would tend to be excessively attractive to the following ones. In that case, the exploration of the solution space would be constrained. The influence of pheromone evaporation in real ant systems is unclear, but it is very important in artificial systems.[9]

Over time, however, the pheromone trail starts to evaporate, thus reducing its attractive strength. The more time it takes for an ant to travel down the path and back again, the more time the pheromones have to evaporate. A short path, by comparison, gets marched over more frequently, and thus the pheromone density becomes higher on shorter paths than longer ones. Pheromone evaporation also has the advantage of avoiding the convergence to a locally optimal solution. If there were no evaporation at all, the paths chosen by the first ants would tend to be excessively attractive to the following ones. In that case, the exploration of the solution space would be constrained. The influence of pheromone evaporation in real ant systems is unclear, but it is very important in artificial systems.

然而,随着时间的推移,信息素的踪迹开始蒸发,从而减少了它的吸引力。蚂蚁沿着路径往返需要的时间越长,信息素蒸发的时间就越长。相比之下,短路径更频繁地穿越,因此短路径上的信息素密度比长路径上的高。信息素蒸发还具有避免收敛到局部最优解的优点。如果根本没有蒸发,那么第一批蚂蚁选择的路径对于后面的蚂蚁来说就会非常有吸引力。在这种情况下,对解空间的探索将受到限制。信息素蒸发对实际蚂蚁系统的影响尚不清楚,但在人工系统中具有重要意义。

The overall result is that when one ant finds a good (i.e., short) path from the colony to a food source, other ants are more likely to follow that path, and positive feedback eventually leads to many ants following a single path. The idea of the ant colony algorithm is to mimic this behavior with "simulated ants" walking around the graph representing the problem to solve.

The overall result is that when one ant finds a good (i.e., short) path from the colony to a food source, other ants are more likely to follow that path, and positive feedback eventually leads to many ants following a single path. The idea of the ant colony algorithm is to mimic this behavior with "simulated ants" walking around the graph representing the problem to solve.

总的结果是,当一只蚂蚁找到一条从蚁群到食物来源的好的(即短的)路径时,其他蚂蚁更有可能遵循这条路径,正反馈最终导致许多蚂蚁走同一条路径。蚁群算法的思想是通过“模拟蚂蚁”在代表要解决问题的图上走来模拟这种行为。

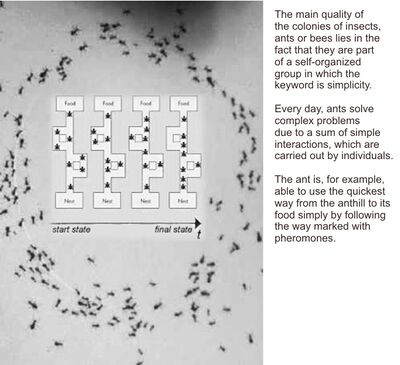

Ambient networks of intelligent objects

New concepts are required since “intelligence” is no longer centralized but can be found throughout all minuscule objects. Anthropocentric concepts have been known to lead to the production of IT systems in which data processing, control units and calculating forces are centralized. These centralized units have continually increased their performance and can be compared to the human brain. The model of the brain has become the ultimate vision of computers. Ambient networks of intelligent objects and, sooner or later, a new generation of information systems which are even more diffused and based on nanotechnology, will profoundly change this concept. Small devices that can be compared to insects do not dispose of a high intelligence on their own. Indeed, their intelligence can be classed as fairly limited. It is, for example, impossible to integrate a high performance calculator with the power to solve any kind of mathematical problem into a biochip that is implanted into the human body or integrated in an intelligent tag which is designed to trace commercial articles. However, once those objects are interconnected they dispose of a form of intelligence that can be compared to a colony of ants or bees. In the case of certain problems, this type of intelligence can be superior to the reasoning of a centralized system similar to the brain.[10]

New concepts are required since “intelligence” is no longer centralized but can be found throughout all minuscule objects. Anthropocentric concepts have been known to lead to the production of IT systems in which data processing, control units and calculating forces are centralized. These centralized units have continually increased their performance and can be compared to the human brain. The model of the brain has become the ultimate vision of computers. Ambient networks of intelligent objects and, sooner or later, a new generation of information systems which are even more diffused and based on nanotechnology, will profoundly change this concept. Small devices that can be compared to insects do not dispose of a high intelligence on their own. Indeed, their intelligence can be classed as fairly limited. It is, for example, impossible to integrate a high performance calculator with the power to solve any kind of mathematical problem into a biochip that is implanted into the human body or integrated in an intelligent tag which is designed to trace commercial articles. However, once those objects are interconnected they dispose of a form of intelligence that can be compared to a colony of ants or bees. In the case of certain problems, this type of intelligence can be superior to the reasoning of a centralized system similar to the brain.

需要新的概念,因为“智能”不再是集中的,而是可以在所有微小的物体中找到。以人为中心的概念已经被认识到导致 IT 系统的生产,其中数据处理,控制单元和计算力量是集中的。这些集中的单位不断地提高他们的表现,可以与人类的大脑相比较。大脑模型已经成为计算机的终极愿景。由智能物体构成的环境网络,以及基于纳米技术的新一代信息系统,迟早会深刻地改变这一概念。可以与昆虫相比较的小型设备本身并不具备高智能。事实上,他们的智力可以归类为相当有限。例如,不可能将一个有能力解决任何数学问题的高性能计算器集成到植入人体的生物芯片中,或者集成到一个用于跟踪商业文章的智能标签中。然而,一旦这些物体互相连接起来,它们就拥有了一种可以与一群蚂蚁或蜜蜂相提并论的智慧。在某些问题的情况下,这种类型的智能可能优于类似于大脑的集中式系统的推理。

Nature offers several examples of how minuscule organisms, if they all follow the same basic rule, can create a form of collective intelligence on the macroscopic level. Colonies of social insects perfectly illustrate this model which greatly differs from human societies. This model is based on the co-operation of independent units with simple and unpredictable behavior.[11] They move through their surrounding area to carry out certain tasks and only possess a very limited amount of information to do so. A colony of ants, for example, represents numerous qualities that can also be applied to a network of ambient objects. Colonies of ants have a very high capacity to adapt themselves to changes in the environment as well as an enormous strength in dealing with situations where one individual fails to carry out a given task. This kind of flexibility would also be very useful for mobile networks of objects which are perpetually developing. Parcels of information that move from a computer to a digital object behave in the same way as ants would do. They move through the network and pass from one knot to the next with the objective of arriving at their final destination as quickly as possible.[12]

Nature offers several examples of how minuscule organisms, if they all follow the same basic rule, can create a form of collective intelligence on the macroscopic level. Colonies of social insects perfectly illustrate this model which greatly differs from human societies. This model is based on the co-operation of independent units with simple and unpredictable behavior. They move through their surrounding area to carry out certain tasks and only possess a very limited amount of information to do so. A colony of ants, for example, represents numerous qualities that can also be applied to a network of ambient objects. Colonies of ants have a very high capacity to adapt themselves to changes in the environment as well as an enormous strength in dealing with situations where one individual fails to carry out a given task. This kind of flexibility would also be very useful for mobile networks of objects which are perpetually developing. Parcels of information that move from a computer to a digital object behave in the same way as ants would do. They move through the network and pass from one knot to the next with the objective of arriving at their final destination as quickly as possible.

大自然提供了几个例子,说明如果微小的生物体都遵循相同的基本规则,它们是如何在宏观层面上创造出一种集体智慧的。社会性昆虫的群落完美地说明了这个模型,它与人类社会有很大的不同。该模型基于具有简单和不可预测行为的独立单元之间的合作。他们穿过周围地区执行某些任务,但只掌握非常有限的信息。例如,一群蚂蚁代表了许多特性,这些特性也可以应用到环境对象网络中。蚂蚁群体具有非常高的适应环境变化的能力,并且在处理个人未能完成某项任务的情况方面具有巨大的力量。这种灵活性对于不断发展的移动对象网络也是非常有用的。从计算机到数字物体的信息包和蚂蚁的行为一样。它们穿过网络,从一个节点传到下一个节点,目的是尽快到达最终目的地。

Artificial pheromone system

Pheromone-based communication is one of the most effective ways of communication which is widely observed in nature. Pheromone is used by social insects such as

Pheromone-based communication is one of the most effective ways of communication which is widely observed in nature. Pheromone is used by social insects such as

基于信息素的通信是自然界中普遍存在的最有效的通信方式之一。信息素是用于社会昆虫,如

bees, ants and termites; both for inter-agent and agent-swarm communications. Due to its feasibility, artificial pheromones have been adopted in multi-robot and swarm robotic systems. Pheromone-based communication was implemented by different means such as chemical [13][14][15] or physical (RFID tags,[16] light,[17][18][19][20] sound[21]) ways. However, those implementations were not able to replicate all the aspects of pheromones as seen in nature.

bees, ants and termites; both for inter-agent and agent-swarm communications. Due to its feasibility, artificial pheromones have been adopted in multi-robot and swarm robotic systems. Pheromone-based communication was implemented by different means such as chemical or physical (RFID tags, light, sound) ways. However, those implementations were not able to replicate all the aspects of pheromones as seen in nature.

蜜蜂、蚂蚁和白蚁; 都用于内部代理和代理群通信。由于其可行性,人工信息素已被应用于多机器人和群机器人系统中。基于信息素的通信是通过化学或物理(RFID 标签、光、声)等不同方式实现的。然而,这些实现不能复制信息素的所有方面。

Using projected light was presented in an 2007 IEEE paper by Garnier, Simon, et al.[22] as an experimental setup to study pheromone-based communication with micro autonomous robots. Another study that proposed a novel pheromone communication method, COSΦ,[23] for a swarm robotic system is based on precise and fast visual localization.[24]

Using projected light was presented in an 2007 IEEE paper by Garnier, Simon, et al. as an experimental setup to study pheromone-based communication with micro autonomous robots. Another study that proposed a novel pheromone communication method, COSΦ, for a swarm robotic system is based on precise and fast visual localization.

在2007年 IEEE 的一篇论文中,Garnier,Simon 等人提出了使用投射光。作为研究信息素与微型自主机器人通信的实验装置。另一项研究提出了一种新的信息素通信方法,cos,为一个群机器人系统是基于精确和快速的视觉定位。

The system allows simulation of a virtually unlimited number of different pheromones and provides the result of their interaction as a gray-scale image on a horizontal LCD screen that the robots move on. In order to demonstrate the pheromone communication method, Colias autonomous micro robot was deployed as the swarm robotic platform.[citation needed]

The system allows simulation of a virtually unlimited number of different pheromones and provides the result of their interaction as a gray-scale image on a horizontal LCD screen that the robots move on. In order to demonstrate the pheromone communication method, Colias autonomous micro robot was deployed as the swarm robotic platform.

该系统可以模拟几乎无限数量的不同信息素,并在机器人移动的水平 LCD 屏幕上以灰度图像的形式提供它们相互作用的结果。为了演示信息素通信方法,以大肠杆菌自主微型机器人为群体机器人平台。

Algorithm and formulae

In the ant colony optimization algorithms, an artificial ant is a simple computational agent that searches for good solutions to a given optimization problem. To apply an ant colony algorithm, the optimization problem needs to be converted into the problem of finding the shortest path on a weighted graph. In the first step of each iteration, each ant stochastically constructs a solution, i.e. the order in which the edges in the graph should be followed. In the second step, the paths found by the different ants are compared. The last step consists of updating the pheromone levels on each edge.

In the ant colony optimization algorithms, an artificial ant is a simple computational agent that searches for good solutions to a given optimization problem. To apply an ant colony algorithm, the optimization problem needs to be converted into the problem of finding the shortest path on a weighted graph. In the first step of each iteration, each ant stochastically constructs a solution, i.e. the order in which the edges in the graph should be followed. In the second step, the paths found by the different ants are compared. The last step consists of updating the pheromone levels on each edge.

在蚁群算法中,人工蚂蚁是一种简单的计算代理,可以为给定的最佳化问题寻找好的解决方案。为了应用蚁群算法,需要将最佳化问题问题转化为在加权图上寻找最短路径的问题。在每个迭代的第一步,每个随机构造一个解,即。图中的边应该遵循的顺序。在第二步中,比较了不同蚂蚁发现的路径。最后一步是更新每个边上的信息素水平。

procedure ACO_MetaHeuristic is

procedure ACO_MetaHeuristic is

过程 ACO 元启发式是

while not_termination do

while not_termination do

而不是终止

generateSolutions()

generateSolutions()

概要解答()

daemonActions()

daemonActions()

Daemonactions ()

pheromoneUpdate()

pheromoneUpdate()

信息素更新()

repeat

repeat

重复

end procedure

end procedure

结束过程

Edge selection

Each ant needs to construct a solution to move through the graph. To select the next edge in its tour, an ant will consider the length of each edge available from its current position, as well as the corresponding pheromone level. At each step of the algorithm, each ant moves from a state [math]\displaystyle{ x }[/math] to state [math]\displaystyle{ y }[/math], corresponding to a more complete intermediate solution. Thus, each ant [math]\displaystyle{ k }[/math] computes a set [math]\displaystyle{ A_k(x) }[/math] of feasible expansions to its current state in each iteration, and moves to one of these in probability. For ant [math]\displaystyle{ k }[/math], the probability [math]\displaystyle{ p_{xy}^k }[/math] of moving from state [math]\displaystyle{ x }[/math] to state [math]\displaystyle{ y }[/math] depends on the combination of two values, the attractiveness [math]\displaystyle{ \eta_{xy} }[/math] of the move, as computed by some heuristic indicating the a priori desirability of that move and the trail level [math]\displaystyle{ \tau_{xy} }[/math] of the move, indicating how proficient it has been in the past to make that particular move. The trail level represents a posteriori indication of the desirability of that move.

Each ant needs to construct a solution to move through the graph. To select the next edge in its tour, an ant will consider the length of each edge available from its current position, as well as the corresponding pheromone level. At each step of the algorithm, each ant moves from a state [math]\displaystyle{ x }[/math] to state [math]\displaystyle{ y }[/math], corresponding to a more complete intermediate solution. Thus, each ant [math]\displaystyle{ k }[/math] computes a set [math]\displaystyle{ A_k(x) }[/math] of feasible expansions to its current state in each iteration, and moves to one of these in probability. For ant [math]\displaystyle{ k }[/math], the probability [math]\displaystyle{ p_{xy}^k }[/math] of moving from state [math]\displaystyle{ x }[/math] to state [math]\displaystyle{ y }[/math] depends on the combination of two values, the attractiveness [math]\displaystyle{ \eta_{xy} }[/math] of the move, as computed by some heuristic indicating the a priori desirability of that move and the trail level [math]\displaystyle{ \tau_{xy} }[/math] of the move, indicating how proficient it has been in the past to make that particular move. The trail level represents a posteriori indication of the desirability of that move.

每只蚂蚁都需要构造一个解来在图中移动。为了在它的旅行中选择下一条边,蚂蚁会考虑每条边从它当前位置可以获得的长度,以及相应的信息素水平。在算法的每个步骤中,每个蚂蚁从状态数学 x / math 移动到状态数学 y / math,对应于一个更完整的中间解。因此,每个蚂蚁数学 k / math 计算一个集合数学 a k (x) / 可行展开式的数学到它在每次迭代中的当前状态,并移动到其中一个概率上。对于蚂蚁数学 k / math 来说,从状态数学 x / math 到状态数学 y / math 的概率数学 p { xy } ^ k / math 取决于两个值的组合,即移动的吸引力数学 eta / math,正如某些启发式算法所计算的那样,表明移动的先验渴望和移动的拖尾级数学 tau,表明过去它在移动这一特定动作方面有多么精通。试验水平代表了一个后验指示的愿望,这一举动。

In general, the [math]\displaystyle{ k }[/math]th ant moves from state [math]\displaystyle{ x }[/math] to state [math]\displaystyle{ y }[/math] with probability

In general, the [math]\displaystyle{ k }[/math]th ant moves from state [math]\displaystyle{ x }[/math] to state [math]\displaystyle{ y }[/math] with probability

一般来说,蚂蚁的数学 k / math 从状态数学 x / math 移动到状态数学 y / math 和概率

[math]\displaystyle{ \lt math\gt 数学 p_{xy}^k = p_{xy}^k = P { xy } ^ k \frac \frac 压裂 { (\tau_{xy}^{\alpha}) (\eta_{xy}^{\beta}) } { (\tau_{xy}^{\alpha}) (\eta_{xy}^{\beta}) } {( tau { xy } ^ { alpha })( eta { xy } ^ { beta })} { \sum_{z\in \mathrm{allowed}_x} (\tau_{xz}^{\alpha}) (\eta_{xz}^{\beta}) } { \sum_{z\in \mathrm{allowed}_x} (\tau_{xz}^{\alpha}) (\eta_{xz}^{\beta}) } ( tau { xz } ^ alpha }( eta { xz } ^ beta }) }[/math]

</math>

数学

where

where

在哪里

[math]\displaystyle{ \tau_{xy} }[/math] is the amount of pheromone deposited for transition from state [math]\displaystyle{ x }[/math] to [math]\displaystyle{ y }[/math], 0 ≤ [math]\displaystyle{ \alpha }[/math] is a parameter to control the influence of [math]\displaystyle{ \tau_{xy} }[/math], [math]\displaystyle{ \eta_{xy} }[/math] is the desirability of state transition [math]\displaystyle{ xy }[/math] (a priori knowledge, typically [math]\displaystyle{ 1/d_{xy} }[/math], where [math]\displaystyle{ d }[/math] is the distance) and [math]\displaystyle{ \beta }[/math] ≥ 1 is a parameter to control the influence of [math]\displaystyle{ \eta_{xy} }[/math]. [math]\displaystyle{ \tau_{xz} }[/math] and [math]\displaystyle{ \eta_{xz} }[/math] represent the trail level and attractiveness for the other possible state transitions.

[math]\displaystyle{ \tau_{xy} }[/math] is the amount of pheromone deposited for transition from state [math]\displaystyle{ x }[/math] to [math]\displaystyle{ y }[/math], 0 ≤ [math]\displaystyle{ \alpha }[/math] is a parameter to control the influence of [math]\displaystyle{ \tau_{xy} }[/math], [math]\displaystyle{ \eta_{xy} }[/math] is the desirability of state transition [math]\displaystyle{ xy }[/math] (a priori knowledge, typically [math]\displaystyle{ 1/d_{xy} }[/math], where [math]\displaystyle{ d }[/math] is the distance) and [math]\displaystyle{ \beta }[/math] ≥ 1 is a parameter to control the influence of [math]\displaystyle{ \eta_{xy} }[/math]. [math]\displaystyle{ \tau_{xz} }[/math] and [math]\displaystyle{ \eta_{xz} }[/math] represent the trail level and attractiveness for the other possible state transitions.

Math 是从状态数学 x / math 到数学 y / math 的信息素沉积量,0≤ math alpha / math 是控制数学 tau / math 影响的参数, math 是状态转换数学 xy / math (先验知识,通常是数学1 / d { xy } / math,其中数学 d / math 是距离)的可取性,math beta / math ≥1是控制数学影响的参数。Math 和 math 表示其他可能状态转换的轨迹级别和吸引力。

Pheromone update

Trails are usually updated when all ants have completed their solution, increasing or decreasing the level of trails corresponding to moves that were part of "good" or "bad" solutions, respectively. An example of a global pheromone updating rule is

Trails are usually updated when all ants have completed their solution, increasing or decreasing the level of trails corresponding to moves that were part of "good" or "bad" solutions, respectively. An example of a global pheromone updating rule is

当所有的蚂蚁都完成了他们的解决方案,路径通常更新,增加或减少路径的水平对应的移动,是“好”或“坏”的解决方案的一部分,分别。全局信息素更新规则的一个例子是

[math]\displaystyle{ \lt math\gt 数学 \tau_{xy} \leftarrow \tau_{xy} \leftarrow 左塔罗 (1-\rho)\tau_{xy} + \sum_{k}\Delta \tau^{k}_{xy} (1-\rho)\tau_{xy} + \sum_{k}\Delta \tau^{k}_{xy} (1- rho) tau { xy } + sum { k } Delta tau ^ { k } }[/math]

</math>

数学

where [math]\displaystyle{ \tau_{xy} }[/math] is the amount of pheromone deposited for a state transition [math]\displaystyle{ xy }[/math], [math]\displaystyle{ \rho }[/math] is the pheromone evaporation coefficient and [math]\displaystyle{ \Delta \tau^{k}_{xy} }[/math] is the amount of pheromone deposited by [math]\displaystyle{ k }[/math]th ant, typically given for a TSP problem (with moves corresponding to arcs of the graph) by

where [math]\displaystyle{ \tau_{xy} }[/math] is the amount of pheromone deposited for a state transition [math]\displaystyle{ xy }[/math], [math]\displaystyle{ \rho }[/math] is the pheromone evaporation coefficient and [math]\displaystyle{ \Delta \tau^{k}_{xy} }[/math] is the amount of pheromone deposited by [math]\displaystyle{ k }[/math]th ant, typically given for a TSP problem (with moves corresponding to arcs of the graph) by

其中 math 是状态转换数学 xy / math 的信息素沉积量,math 是信息素蒸发系数,math 是 Delta tau ^ xy / math 是数学 k / math 蚂蚁沉积的信息素沉积量,通常用于 TSP 问题(与图的弧线相对应)

[math]\displaystyle{ \lt math\gt 数学 \Delta \tau^{k}_{xy} = \Delta \tau^{k}_{xy} = Delta tau ^ { k } \begin{cases} \begin{cases} Begin { cases } Q/L_k & \mbox{if ant }k\mbox{ uses curve }xy\mbox{ in its tour} \\ Q/L_k & \mbox{if ant }k\mbox{ uses curve }xy\mbox{ in its tour} \\ Q / l k & mbox { if ant } k mbox {在其行程中使用曲线} xy mbox { 0 & \mbox{otherwise} 0 & \mbox{otherwise} 0 & mbox { otherwise } \end{cases} \end{cases} End { cases } }[/math]

</math>

数学

where [math]\displaystyle{ L_k }[/math] is the cost of the [math]\displaystyle{ k }[/math]th ant's tour (typically length) and [math]\displaystyle{ Q }[/math] is a constant.

where [math]\displaystyle{ L_k }[/math] is the cost of the [math]\displaystyle{ k }[/math]th ant's tour (typically length) and [math]\displaystyle{ Q }[/math] is a constant.

其中数学 l k / math 是蚂蚁旅行的数学 k / math 的成本(通常是长度) ,数学 q / math 是一个常数。

Common extensions

Here are some of the most popular variations of ACO algorithms.

Here are some of the most popular variations of ACO algorithms.

下面是一些最流行的 ACO 算法变体。

Ant System (AS)

The Ant System is the first ACO algorithm. This algorithm corresponds to the one presented above. It was developed by Dorigo.[25]

The Ant System is the first ACO algorithm. This algorithm corresponds to the one presented above. It was developed by Dorigo.

蚁群算法是第一种蚁群算法。此算法与上述算法相对应。它是由 Dorigo 开发的。

Ant Colony System (ACS)

In the Ant Colony System algorithm, the original Ant System was modified in three aspects: (i) the edge selection is biased towards exploitation (i.e. favoring the probability of selecting the shortest edges with a large amount of pheromone); (ii) while building a solution, ants change the pheromone level of the edges they are selecting by applying a local pheromone updating rule; (iii) at the end of each iteration, only the best ant is allowed to update the trails by applying a modified global pheromone updating rule.[26]

In the Ant Colony System algorithm, the original Ant System was modified in three aspects: (i) the edge selection is biased towards exploitation (i.e. favoring the probability of selecting the shortest edges with a large amount of pheromone); (ii) while building a solution, ants change the pheromone level of the edges they are selecting by applying a local pheromone updating rule; (iii) at the end of each iteration, only the best ant is allowed to update the trails by applying a modified global pheromone updating rule.

在蚁群算法中,对原有的蚁群算法进行了三方面的改进: (i)边缘选择偏向于挖掘(即边缘选择偏向于挖掘边缘)。在求解过程中,蚂蚁通过应用局部信息素更新规则来改变所选边缘的信息素水平; 在每次迭代结束时,只允许最优的蚂蚁通过应用一个改进的全局信息素更新规则来更新路径。

Elitist Ant System

In this algorithm, the global best solution deposits pheromone on its trail after every iteration (even if this trial has not been revisited), along with all the other ants.

In this algorithm, the global best solution deposits pheromone on its trail after every iteration (even if this trial has not been revisited), along with all the other ants.

在这种算法中,全局最优解在每次迭代之后(即使这次试验没有被重新审视)和其他所有蚂蚁一起将信息素存放在它的轨迹上。

Max-Min Ant System (MMAS)

This algorithm controls the maximum and minimum pheromone amounts on each trail. Only the global best tour or the iteration best tour are allowed to add pheromone to its trail. To avoid stagnation of the search algorithm, the range of possible pheromone amounts on each trail is limited to an interval [τmax,τmin]. All edges are initialized to τmax to force a higher exploration of solutions. The trails are reinitialized to τmax when nearing stagnation.[27]

This algorithm controls the maximum and minimum pheromone amounts on each trail. Only the global best tour or the iteration best tour are allowed to add pheromone to its trail. To avoid stagnation of the search algorithm, the range of possible pheromone amounts on each trail is limited to an interval [τmax,τmin]. All edges are initialized to τmax to force a higher exploration of solutions. The trails are reinitialized to τmax when nearing stagnation.

该算法控制每条路线上信息素的最大值和最小值。只有全局最优旅行或迭代最优旅行才允许在其轨迹中添加信息素。为了避免搜索算法的停滞,每条路径上可能的信息素数量范围被限制在一个区间[次最大 / 次,次最小 / 次]。所有边都被初始化为子 max / sub,以强制进行更高层次的解决方案探索。当接近停滞状态时,轨迹被重新初始化为次最大 / 次。

Rank-based Ant System (ASrank)

All solutions are ranked according to their length. Only a fixed number of the best ants in this iteration are allowed to update their trials. The amount of pheromone deposited is weighted for each solution, such that solutions with shorter paths deposit more pheromone than the solutions with longer paths.

All solutions are ranked according to their length. Only a fixed number of the best ants in this iteration are allowed to update their trials. The amount of pheromone deposited is weighted for each solution, such that solutions with shorter paths deposit more pheromone than the solutions with longer paths.

所有的解决方案都是根据它们的长度来排序的。在这个迭代中,只有一定数量的最优秀的蚂蚁被允许更新它们的试验。信息素沉积量的权重每个解决方案,使解决方案更短的路径比解决方案更多的信息素沉积长路径。

Continuous Orthogonal Ant Colony (COAC)

The pheromone deposit mechanism of COAC is to enable ants to search for solutions collaboratively and effectively. By using an orthogonal design method, ants in the feasible domain can explore their chosen regions rapidly and efficiently, with enhanced global search capability and accuracy. The orthogonal design method and the adaptive radius adjustment method can also be extended to other optimization algorithms for delivering wider advantages in solving practical problems.[28]

The pheromone deposit mechanism of COAC is to enable ants to search for solutions collaboratively and effectively. By using an orthogonal design method, ants in the feasible domain can explore their chosen regions rapidly and efficiently, with enhanced global search capability and accuracy. The orthogonal design method and the adaptive radius adjustment method can also be extended to other optimization algorithms for delivering wider advantages in solving practical problems.

Coac 的信息素存储机制是使蚂蚁能够协同有效地寻找解决方案。利用正交设计方法,可行域中的蚂蚁可以快速有效地搜索所选区域,提高了全局搜索能力和准确性。正交设计法和自适应半径调整法也可以推广到其他优化算法中,在解决实际问题时具有更广泛的优势。

Recursive Ant Colony Optimization

It is a recursive form of ant system which divides the whole search domain into several sub-domains and solves the objective on these subdomains.[29] The results from all the subdomains are compared and the best few of them are promoted for the next level. The subdomains corresponding to the selected results are further subdivided and the process is repeated until an output of desired precision is obtained. This method has been tested on ill-posed geophysical inversion problems and works well.[30]

It is a recursive form of ant system which divides the whole search domain into several sub-domains and solves the objective on these subdomains. The results from all the subdomains are compared and the best few of them are promoted for the next level. The subdomains corresponding to the selected results are further subdivided and the process is repeated until an output of desired precision is obtained. This method has been tested on ill-posed geophysical inversion problems and works well.

它是蚂蚁系统的一种递归形式,将整个搜索域划分为若干子域,并在这些子域上求解目标。对所有子域的结果进行比较,并将其中最好的几个子域提升到下一个级别。与所选结果相对应的子区域被进一步细分,并重复这一过程,直到获得所需精度的输出。该方法已在不适定地球物理反演问题中得到验证,效果良好。

Convergence

For some versions of the algorithm, it is possible to prove that it is convergent (i.e., it is able to find the global optimum in finite time). The first evidence of convergence for an ant colony algorithm was made in 2000, the graph-based ant system algorithm, and later on for the ACS and MMAS algorithms. Like most metaheuristics, it is very difficult to estimate the theoretical speed of convergence. A performance analysis of a continuous ant colony algorithm with respect to its various parameters (edge selection strategy, distance measure metric, and pheromone evaporation rate) showed that its performance and rate of convergence are sensitive to the chosen parameter values, and especially to the value of the pheromone evaporation rate.[31] In 2004, Zlochin and his colleagues[32] showed that COAC-type algorithms could be assimilated methods of stochastic gradient descent, on the cross-entropy and estimation of distribution algorithm. They proposed these metaheuristics as a "research-based model".

For some versions of the algorithm, it is possible to prove that it is convergent (i.e., it is able to find the global optimum in finite time). The first evidence of convergence for an ant colony algorithm was made in 2000, the graph-based ant system algorithm, and later on for the ACS and MMAS algorithms. Like most metaheuristics, it is very difficult to estimate the theoretical speed of convergence. A performance analysis of a continuous ant colony algorithm with respect to its various parameters (edge selection strategy, distance measure metric, and pheromone evaporation rate) showed that its performance and rate of convergence are sensitive to the chosen parameter values, and especially to the value of the pheromone evaporation rate. In 2004, Zlochin and his colleagues showed that COAC-type algorithms could be assimilated methods of stochastic gradient descent, on the cross-entropy and estimation of distribution algorithm. They proposed these metaheuristics as a "research-based model".

对于某些版本的算法,可以证明它是收敛的(也就是说,它能在有限时间内找到全局最优解)。蚁群算法的收敛性在2000年首次得到证实,基于图的蚂蚁系统算法,以及后来的 ACS 和 MMAS 算法。和大多数启发式算法一样,很难估计理论上的收敛速度。通过对连续蚁群算法各参数(边缘选择策略、距离测度和信息素蒸发速率)的性能分析表明,蚁群算法的性能和收敛速度对参数选择,特别是信息素蒸发速率的选择非常敏感。在2004年,Zlochin 和他的同事们展示了 coac 类型的算法可以同化随机梯度下降的方法,比如交叉熵和分布估计算法。他们提出这些元启发式作为一个“基于研究的模型”。

Applications

[背包问题: 相对于数量更多但营养更少的糖,蚂蚁更喜欢小滴的蜂蜜]

Ant colony optimization algorithms have been applied to many combinatorial optimization problems, ranging from quadratic assignment to protein folding or routing vehicles and a lot of derived methods have been adapted to dynamic problems in real variables, stochastic problems, multi-targets and parallel implementations.

Ant colony optimization algorithms have been applied to many combinatorial optimization problems, ranging from quadratic assignment to protein folding or routing vehicles and a lot of derived methods have been adapted to dynamic problems in real variables, stochastic problems, multi-targets and parallel implementations.

蚁群算法已经应用于许多组合优化问题,从二次赋值到蛋白质折叠或路径选择车辆,许多派生方法已经适用于实际变量、随机问题、多目标和并行实现的动态问题。

It has also been used to produce near-optimal solutions to the travelling salesman problem. They have an advantage over simulated annealing and genetic algorithm approaches of similar problems when the graph may change dynamically; the ant colony algorithm can be run continuously and adapt to changes in real time. This is of interest in network routing and urban transportation systems.

It has also been used to produce near-optimal solutions to the travelling salesman problem. They have an advantage over simulated annealing and genetic algorithm approaches of similar problems when the graph may change dynamically; the ant colony algorithm can be run continuously and adapt to changes in real time. This is of interest in network routing and urban transportation systems.

它也被用来产生接近最佳的解决方案的旅行推销员问题。当图形可能发生动态变化时,它们比模拟退火算法和遗传算法具有优势; 蚁群算法可以连续运行并实时适应变化。这是在网络路由和城市交通系统的兴趣。

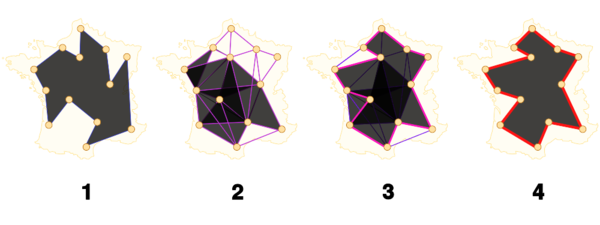

The first ACO algorithm was called the ant system[25] and it was aimed to solve the travelling salesman problem, in which the goal is to find the shortest round-trip to link a series of cities. The general algorithm is relatively simple and based on a set of ants, each making one of the possible round-trips along the cities. At each stage, the ant chooses to move from one city to another according to some rules:

The first ACO algorithm was called the ant system and it was aimed to solve the travelling salesman problem, in which the goal is to find the shortest round-trip to link a series of cities. The general algorithm is relatively simple and based on a set of ants, each making one of the possible round-trips along the cities. At each stage, the ant chooses to move from one city to another according to some rules:

第一个蚁群算法被称为蚂蚁系统,它的目标是解决旅行推销员问题,其中的目标是找到最短的往返连接一系列城市。一般的算法相对简单,基于一组蚂蚁,每个蚂蚁沿着城市进行一次可能的往返旅行。在每个阶段,蚂蚁都会根据一些规则从一个城市迁移到另一个城市:

- It must visit each city exactly once;

It must visit each city exactly once;

它必须每个城市只参观一次;

- A distant city has less chance of being chosen (the visibility);

A distant city has less chance of being chosen (the visibility);

一个遥远的城市被选中的可能性较小(能见度) ;

- The more intense the pheromone trail laid out on an edge between two cities, the greater the probability that that edge will be chosen;

The more intense the pheromone trail laid out on an edge between two cities, the greater the probability that that edge will be chosen;

在两个城市之间的边缘布置的信息素踪迹越强烈,选择这条边缘的概率就越大;

- Having completed its journey, the ant deposits more pheromones on all edges it traversed, if the journey is short;

Having completed its journey, the ant deposits more pheromones on all edges it traversed, if the journey is short;

旅程结束后,如果旅程很短,蚂蚁会在所经过的所有边缘释放更多的信息素;

- After each iteration, trails of pheromones evaporate.

After each iteration, trails of pheromones evaporate.

每次迭代后,信息素的踪迹蒸发。

center

中心

Scheduling problem

- Job-shop scheduling problem (JSP)[34]

</ref>

/ 参考

- Open-shop scheduling problem (OSP)[35][36]

- Permutation flow shop problem (PFSP)[37]

- Single machine total tardiness problem (SMTTP)[38]

- Resource-constrained project scheduling problem (RCPSP)[42]

- Group-shop scheduling problem (GSP)[43]

- Single-machine total tardiness problem with sequence dependent setup times (SMTTPDST)[44]

- Multistage flowshop scheduling problem (MFSP) with sequence dependent setup/changeover times[45]

Vehicle routing problem

- Multi-depot vehicle routing problem (MDVRP)[49]

- Period vehicle routing problem (PVRP)[50]

- Split delivery vehicle routing problem (SDVRP)[51]

- Stochastic vehicle routing problem (SVRP)[52]

- Time dependent vehicle routing problem with time windows (TDVRPTW)[59]

- Vehicle routing problem with time windows and multiple service workers (VRPTWMS)

Assignment problem

Set problem

- Partition problem (SPP)[67]

- Weight constrained graph tree partition problem (WCGTPP)[68]

- Arc-weighted l-cardinality tree problem (AWlCTP)[69]

- Multiple knapsack problem (MKP)[70]

- Maximum independent set problem (MIS)[71]

Device sizing problem in nanoelectronics physical design

- Ant colony optimization (ACO) based optimization of 45 nm CMOS-based sense amplifier circuit could converge to optimal solutions in very minimal time.[72]

- Ant colony optimization (ACO) based reversible circuit synthesis could improve efficiency significantly.[73]

Antennas optimization and synthesis

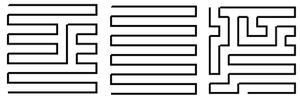

Loopback vibrators 10×10, synthesized by means of ACO algorithm

回路振子1010,采用蚁群算法合成

Unloopback vibrators 10×10, synthesized by means of ACO algorithm

采用蚁群算法合成无回路振子1010

To optimize the form of antennas, ant colony algorithms can be used. As example can be considered antennas RFID-tags based on ant colony algorithms (ACO).,[75] loopback and unloopback vibrators 10×10[74]

To optimize the form of antennas, ant colony algorithms can be used. As example can be considered antennas RFID-tags based on ant colony algorithms (ACO)., loopback and unloopback vibrators 10×10

为了优化天线的形状,可以采用蚁群算法。作为例子,可以考虑天线射频识别标签基于蚁群算法(ACO) ,环回和非环回振动器1010

Image processing

The ACO algorithm is used in image processing for image edge detection and edge linking.[76][77]

The ACO algorithm is used in image processing for image edge detection and edge linking.

将蚁群算法应用于图像处理,实现了图像的边缘检测和边缘连接。

- Edge detection:

The graph here is the 2-D image and the ants traverse from one pixel depositing pheromone.The movement of ants from one pixel to another is directed by the local variation of the image's intensity values. This movement causes the highest density of the pheromone to be deposited at the edges.

The graph here is the 2-D image and the ants traverse from one pixel depositing pheromone.The movement of ants from one pixel to another is directed by the local variation of the image's intensity values. This movement causes the highest density of the pheromone to be deposited at the edges.

这里的图形是二维图像和蚂蚁遍历从一个像素沉积信息素。 蚂蚁从一个像素移动到另一个像素是由图像灰度值的局部变化引导的。这一运动导致最高密度的信息素沉积在边缘。

The following are the steps involved in edge detection using ACO:[78][79][80]

The following are the steps involved in edge detection using ACO:

以下是使用 ACO 进行边缘检测的步骤:

Step1: Initialization:

Randomly place [math]\displaystyle{ K }[/math] ants on the image [math]\displaystyle{ I_{M_1 M_2} }[/math] where [math]\displaystyle{ K= (M_1*M_2)^\tfrac{1}{2} }[/math] . Pheromone matrix [math]\displaystyle{ \tau_{(i,j)} }[/math] are initialized with a random value. The major challenge in the initialization process is determining the heuristic matrix.

Step1: Initialization:

Randomly place [math]\displaystyle{ K }[/math] ants on the image [math]\displaystyle{ I_{M_1 M_2} }[/math] where [math]\displaystyle{ K= (M_1*M_2)^\tfrac{1}{2} }[/math] . Pheromone matrix [math]\displaystyle{ \tau_{(i,j)} }[/math] are initialized with a random value. The major challenge in the initialization process is determining the heuristic matrix.

Step1: 初始化: br / 随机放置数学 k / math 蚂蚁在图像数学 i { m 1 m 2} / math where math k (m 1 * m 2) ^ tfrac {1} / math。用一个随机值初始化信息素矩阵 math tau {(i,j)} / math。在初始化过程中的主要挑战是确定启发式矩阵。

There are various methods to determine the heuristic matrix. For the below example the heuristic matrix was calculated based on the local statistics:

There are various methods to determine the heuristic matrix. For the below example the heuristic matrix was calculated based on the local statistics:

确定启发式矩阵的方法有多种。对于下面的例子,启发式矩阵是根据当地统计数据计算出来的:

the local statistics at the pixel position (i,j).

the local statistics at the pixel position (i,j).

像素位置(i,j)的局部统计。

[math]\displaystyle{ \eta_{(i,j)}= \tfrac{1}{Z}*Vc*I_{(i,j)} }[/math]

[math]\displaystyle{ \eta_{(i,j)}= \tfrac{1}{Z}*Vc*I_{(i,j)} }[/math]

数学{(i,j)} tfrac {1}{ z } * Vc {(i,j)} / math

Where [math]\displaystyle{ I }[/math] is the image of size [math]\displaystyle{ M_1*M_2 }[/math]

Where [math]\displaystyle{ I }[/math] is the image of size [math]\displaystyle{ M_1*M_2 }[/math]

数学 i / math 是大小数学 m1 * m2 / math br / 的图像

[math]\displaystyle{ Z =\sum_{i=1:M_1} \sum_{j=1:M_2} Vc(I_{i,j}) }[/math],which is a normalization factor

[math]\displaystyle{ Z =\sum_{i=1:M_1} \sum_{j=1:M_2} Vc(I_{i,j}) }[/math],which is a normalization factor

数学 zsum { i: m1} sum { j 1: m2} Vc (i { i,j }) / math,这是一个标准化因子

[math]\displaystyle{ \begin{align}Vc(I_{i,j}) = &f \left( \left\vert I_{(i-2,j-1)} - I_{(i+2,j+1)} \right\vert + \left\vert I_{(i-2,j+1)} - I_{(i+2,j-1)} \right\vert \right. \\ \lt math\gt \begin{align}Vc(I_{i,j}) = &f \left( \left\vert I_{(i-2,j-1)} - I_{(i+2,j+1)} \right\vert + \left\vert I_{(i-2,j+1)} - I_{(i+2,j-1)} \right\vert \right. \\ 数学开始{ align } Vc (i,j }) & f 左(左-垂直 i {(i-2,j-1)}-i {(i + 2,j + 1)}右-垂直-垂直-i {(i + 2,j + 1)}-i {(i + 2,j-1)}右。\\ & +\left\vert I_{(i-1,j-2)} - I_{(i+1,j+2)} \right\vert + \left\vert I_{(i-1,j-1)} - I_{(i+1,j+1)} \right\vert\\ & +\left\vert I_{(i-1,j-2)} - I_{(i+1,j+2)} \right\vert + \left\vert I_{(i-1,j-1)} - I_{(i+1,j+1)} \right\vert\\ & +\left\vert I_{(i-1,j-2)} - I_{(i+1,j+2)} \right\vert + \left\vert I_{(i-1,j-1)} - I_{(i+1,j+1)} \right\vert\\ & +\left\vert I_{(i-1,j)} - I_{(i+1,j)} \right\vert + \left\vert I_{(i-1,j+1)} - I_{(i-1,j-1)} \right\vert\\ & +\left\vert I_{(i-1,j)} - I_{(i+1,j)} \right\vert + \left\vert I_{(i-1,j+1)} - I_{(i-1,j-1)} \right\vert\\ & +\left\vert I_{(i-1,j)} - I_{(i+1,j)} \right\vert + \left\vert I_{(i-1,j+1)} - I_{(i-1,j-1)} \right\vert\\ & + \left. \left\vert I_{(i-1,j+2)} - I_{(i-1,j-2)} \right\vert + \left\vert I_{(i,j-1)} - I_{(i,j+1)} \right\vert \right) \end{align} }[/math]

& + \left. \left\vert I_{(i-1,j+2)} - I_{(i-1,j-2)} \right\vert + \left\vert I_{(i,j-1)} - I_{(i,j+1)} \right\vert \right) \end{align}</math>

左边。{(i-1,j + 2)}-i {(i-1,j-2)}右 vert + 左 i{(i,j-1)}-i {(i,j + 1)}右右 vert 右) end { align } / math

[math]\displaystyle{ f(\cdot) }[/math] can be calculated using the following functions:

[math]\displaystyle{ f(x) = \lambda x, \quad \text{for x ≥ 0; (1)} }[/math]

[math]\displaystyle{ f(x) = \lambda x^2, \quad \text{for x ≥ 0; (2)} }[/math]

[math]\displaystyle{ f(x) =

\lt math\gt f(\cdot) }[/math] can be calculated using the following functions:

[math]\displaystyle{ f(x) = \lambda x, \quad \text{for x ≥ 0; (1)} }[/math]

[math]\displaystyle{ f(x) = \lambda x^2, \quad \text{for x ≥ 0; (2)} }[/math]

[math]\displaystyle{ f(x) =

数学 f ( cdot) / math 可以用以下函数计算: br / math f (x) lambda x,quad text { for x ≥0; (1)} / math br / math f (x) lambda x ^ 2, text { for x ≥0; (2)} / math br / math f (x)

\begin{cases}

\begin{cases}

Begin { cases }

\sin(\frac{\pi x}{2 \lambda}), & \text{for 0 ≤ x ≤} \lambda \text{; (3)} \\

\sin(\frac{\pi x}{2 \lambda}), & \text{for 0 ≤ x ≤} \lambda \text{; (3)} \\

Sin ( frac { pi {2 lambda }) ,& text { for 0≤ x ≤} lambda text { ; (3)}

0, & \text{else}

0, & \text{else}

0,& text { else }

\end{cases} }[/math]

[math]\displaystyle{ f(x) =

\end{cases} }[/math]

[math]\displaystyle{ f(x) =

End { cases } / math br / math f (x)

\begin{cases}

\begin{cases}

Begin { cases }

\pi x \sin(\frac{\pi x}{2 \lambda}), & \text{for 0 ≤ x ≤} \lambda \text{; (4)} \\

\pi x \sin(\frac{\pi x}{2 \lambda}), & \text{for 0 ≤ x ≤} \lambda \text{; (4)} \\

\pi x \sin(\frac{\pi x}{2 \lambda}), & \text{for 0 ≤ x ≤} \lambda \text{; (4)} \\

0, & \text{else}

0, & \text{else}

0,& text { else }

\end{cases} }[/math]

The parameter [math]\displaystyle{ \lambda }[/math] in each of above functions adjusts the functions’ respective shapes.

Step 2 Construction process:

The ant's movement is based on 4-connected pixels or 8-connected pixels. The probability with which the ant moves is given by the probability equation [math]\displaystyle{ P_{x,y} }[/math]

Step 3 and Step 5 Update process:

The pheromone matrix is updated twice. in step 3 the trail of the ant (given by [math]\displaystyle{ \tau_{(x,y)} }[/math] ) is updated where as in step 5 the evaporation rate of the trail is updated which is given by the below equation.

[math]\displaystyle{

\end{cases} }[/math]

The parameter [math]\displaystyle{ \lambda }[/math] in each of above functions adjusts the functions’ respective shapes.

Step 2 Construction process:

The ant's movement is based on 4-connected pixels or 8-connected pixels. The probability with which the ant moves is given by the probability equation [math]\displaystyle{ P_{x,y} }[/math]

Step 3 and Step 5 Update process:

The pheromone matrix is updated twice. in step 3 the trail of the ant (given by [math]\displaystyle{ \tau_{(x,y)} }[/math] ) is updated where as in step 5 the evaporation rate of the trail is updated which is given by the below equation.

[math]\displaystyle{

{ cases } / math br / 上述每个函数中的 math lambda / math 参数调整函数各自的形状。 构建过程: 蚂蚁的移动是基于4个连接的像素或8个连接的像素。利用概率方程 p { x,y } / math br / Step 3和 Step 5更新过程,给出蚂蚁移动的概率: br / 信息素矩阵更新两次。在步骤3中,蚂蚁的踪迹(由 math tau {(x,y)} / math 给出)被更新,在步骤5中,蚂蚁的踪迹蒸发率被更新,该更新由下面的方程给出。 Br / math

\tau_{new} \leftarrow

\tau_{new} \leftarrow

左塔罗

(1-\psi)\tau_{old} + \psi \tau_{0}

(1-\psi)\tau_{old} + \psi \tau_{0}

(1- psi) tau { old } + psi tau {0}

}[/math], where [math]\displaystyle{ \psi }[/math] is the pheromone decay coefficient [math]\displaystyle{ 0\lt \tau \lt 1 }[/math]

</math>, where [math]\displaystyle{ \psi }[/math] is the pheromone decay coefficient [math]\displaystyle{ 0\lt \tau \lt 1 }[/math]

数学,数学是信息素衰变系数,数学是数学

Step 7 Decision Process:

Once the K ants have moved a fixed distance L for N iteration, the decision whether it is an edge or not is based on the threshold T on the pheromone matrixτ. Threshold for the below example is calculated based on Otsu's method.

Step 7 Decision Process:

Once the K ants have moved a fixed distance L for N iteration, the decision whether it is an edge or not is based on the threshold T on the pheromone matrixτ. Threshold for the below example is calculated based on Otsu's method.

步骤7决策过程: 一旦 k 蚂蚁在 n 次迭代中移动了一个固定的距离 l,根据信息素矩阵上的阈值 t 来决定它是边还是边。下面例子的阈值是根据 Otsu 的方法计算出来的。

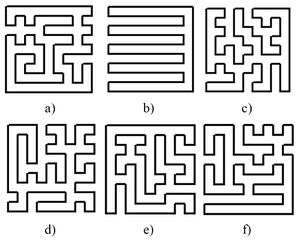

Image Edge detected using ACO:

The images below are generated using different functions given by the equation (1) to (4).[81]

Image Edge detected using ACO:

The images below are generated using different functions given by the equation (1) to (4).

图像边缘检测使用 ACO: br / 下面的图像生成使用不同的函数给出的方程(1)至(4)。

thumb

拇指

- Edge linking:[82] ACO has also been proven effective in edge linking algorithms too.

Other applications

- Connection-oriented network routing[85]

- Discounted cash flows in project scheduling[91]

- Energy and electricity network design[94]

</ref>

/ 参考

- Grid workflow scheduling problem[95]

- Inhibitory peptide design for protein protein interactions[96]

- Intelligent testing system[97]

Definition difficulty

thumb

拇指

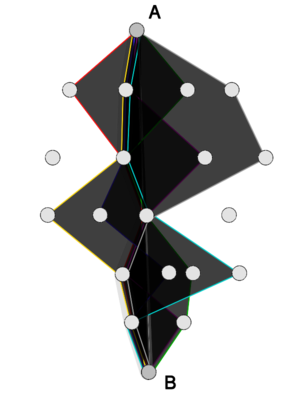

With an ACO algorithm, the shortest path in a graph, between two points A and B, is built from a combination of several paths.[104] It is not easy to give a precise definition of what algorithm is or is not an ant colony, because the definition may vary according to the authors and uses. Broadly speaking, ant colony algorithms are regarded as populated metaheuristics with each solution represented by an ant moving in the search space.[105] Ants mark the best solutions and take account of previous markings to optimize their search. They can be seen as probabilistic multi-agent algorithms using a probability distribution to make the transition between each iteration.[106] In their versions for combinatorial problems, they use an iterative construction of solutions.[107] According to some authors, the thing which distinguishes ACO algorithms from other relatives (such as algorithms to estimate the distribution or particle swarm optimization) is precisely their constructive aspect. In combinatorial problems, it is possible that the best solution eventually be found, even though no ant would prove effective. Thus, in the example of the Travelling salesman problem, it is not necessary that an ant actually travels the shortest route: the shortest route can be built from the strongest segments of the best solutions. However, this definition can be problematic in the case of problems in real variables, where no structure of 'neighbours' exists. The collective behaviour of social insects remains a source of inspiration for researchers. The wide variety of algorithms (for optimization or not) seeking self-organization in biological systems has led to the concept of "swarm intelligence",[10] which is a very general framework in which ant colony algorithms fit.

With an ACO algorithm, the shortest path in a graph, between two points A and B, is built from a combination of several paths. It is not easy to give a precise definition of what algorithm is or is not an ant colony, because the definition may vary according to the authors and uses. Broadly speaking, ant colony algorithms are regarded as populated metaheuristics with each solution represented by an ant moving in the search space. Ants mark the best solutions and take account of previous markings to optimize their search. They can be seen as probabilistic multi-agent algorithms using a probability distribution to make the transition between each iteration. In their versions for combinatorial problems, they use an iterative construction of solutions. According to some authors, the thing which distinguishes ACO algorithms from other relatives (such as algorithms to estimate the distribution or particle swarm optimization) is precisely their constructive aspect. In combinatorial problems, it is possible that the best solution eventually be found, even though no ant would prove effective. Thus, in the example of the Travelling salesman problem, it is not necessary that an ant actually travels the shortest route: the shortest route can be built from the strongest segments of the best solutions. However, this definition can be problematic in the case of problems in real variables, where no structure of 'neighbours' exists. The collective behaviour of social insects remains a source of inspiration for researchers. The wide variety of algorithms (for optimization or not) seeking self-organization in biological systems has led to the concept of "swarm intelligence", which is a very general framework in which ant colony algorithms fit.

采用蚁群优化算法,由多条路径组合构造图中两点 a 和 b 之间的最短路径。要准确定义什么算法是蚁群算法,什么算法不是蚁群算法并不容易,因为定义可能会因作者和用途的不同而有所不同。广义地说,蚁群算法被看作是一种填充的元启发式算法,每个解都由一个在搜索空间中移动的蚂蚁表示。蚂蚁标记最好的解决方案,并考虑到以前的标记,以优化他们的搜索。它们可以被看作是概率多智能体算法,使用概率分布进行每次迭代之间的转换。在他们解决组合问题的版本中,他们使用了迭代构造的解决方案。根据一些作者的观点,蚁群算法区别于其他相关算法(比如估计分布或粒子群优化的算法)正是蚁群算法的建设性方面。在组合问题中,最终可能找到最好的解决方案,即使没有蚂蚁证明是有效的。因此,在旅行推销员问题的例子中,蚂蚁实际上并不需要走最短的路线: 最短的路线可以从最好的解决方案中最强的部分建立起来。然而,这一定义在实变量问题的情况下可能是有问题的,在实变量中没有”邻居”的结构存在。群居昆虫的集体行为仍然是研究人员的灵感来源。在生物系统中寻找自我组织的算法种类繁多(无论是优化还是非优化)已经导致了“群体智能”的概念,这是一个非常通用的框架,适合蚁群算法。

Stigmergy algorithms

There is in practice a large number of algorithms claiming to be "ant colonies", without always sharing the general framework of optimization by canonical ant colonies.[108] In practice, the use of an exchange of information between ants via the environment (a principle called "stigmergy") is deemed enough for an algorithm to belong to the class of ant colony algorithms. This principle has led some authors to create the term "value" to organize methods and behavior based on search of food, sorting larvae, division of labour and cooperative transportation.[109]

There is in practice a large number of algorithms claiming to be "ant colonies", without always sharing the general framework of optimization by canonical ant colonies. In practice, the use of an exchange of information between ants via the environment (a principle called "stigmergy") is deemed enough for an algorithm to belong to the class of ant colony algorithms. This principle has led some authors to create the term "value" to organize methods and behavior based on search of food, sorting larvae, division of labour and cooperative transportation.

在实践中,有大量的算法声称是“蚁群” ,并不总是共享规范的蚁群优化的一般框架。在实践中,蚂蚁之间通过环境交换信息(一个原则称为“ stigmergy”)被认为足以使算法属于蚁群算法的类别。这个原则促使一些作者创造了“价值”这个词来组织方法和行为,基于寻找食物,分类幼虫,分工和合作运输。

Related methods

- Genetic algorithms (GA) maintain a pool of solutions rather than just one. The process of finding superior solutions mimics that of evolution, with solutions being combined or mutated to alter the pool of solutions, with solutions of inferior quality being discarded.

- An estimation of distribution algorithm (EDA) is an evolutionary algorithm that substitutes traditional reproduction operators by model-guided operators. Such models are learned from the population by employing machine learning techniques and represented as probabilistic graphical models, from which new solutions can be sampled[110][111] or generated from guided-crossover.[112][113]

- Simulated annealing (SA) is a related global optimization technique which traverses the search space by generating neighboring solutions of the current solution. A superior neighbor is always accepted. An inferior neighbor is accepted probabilistically based on the difference in quality and a temperature parameter. The temperature parameter is modified as the algorithm progresses to alter the nature of the search.

- Reactive search optimization focuses on combining machine learning with optimization, by adding an internal feedback loop to self-tune the free parameters of an algorithm to the characteristics of the problem, of the instance, and of the local situation around the current solution.

- Tabu search (TS) is similar to simulated annealing in that both traverse the solution space by testing mutations of an individual solution. While simulated annealing generates only one mutated solution, tabu search generates many mutated solutions and moves to the solution with the lowest fitness of those generated. To prevent cycling and encourage greater movement through the solution space, a tabu list is maintained of partial or complete solutions. It is forbidden to move to a solution that contains elements of the tabu list, which is updated as the solution traverses the solution space.

- Artificial immune system (AIS) algorithms are modeled on vertebrate immune systems.

- Particle swarm optimization (PSO), a swarm intelligence method

- Intelligent water drops (IWD), a swarm-based optimization algorithm based on natural water drops flowing in rivers

- Gravitational search algorithm (GSA), a swarm intelligence method

- Ant colony clustering method (ACCM), a method that make use of clustering approach, extending the ACO.

- Stochastic diffusion search (SDS), an agent-based probabilistic global search and optimization technique best suited to problems where the objective function can be decomposed into multiple independent partial-functions

History

The inventors are Frans Moyson and Bernard Manderick. Pioneers of the field include Marco Dorigo, Luca Maria Gambardella.[114]

The inventors are Frans Moyson and Bernard Manderick. Pioneers of the field include Marco Dorigo, Luca Maria Gambardella.

发明者是 Frans Moyson 和 Bernard Manderick。这一领域的先驱包括马可 · 多里戈、卢卡 · 玛利亚 · 甘巴德拉。

}}

Chronology of ant colony optimization algorithms.

Chronology of ant colony optimization algorithms.

蚁群算法年表。

- 1959, Pierre-Paul Grassé invented the theory of stigmergy to explain the behavior of nest building in termites;[115]

- 1983, Deneubourg and his colleagues studied the collective behavior of ants;[116]

- 1988, and Moyson Manderick have an article on self-organization among ants;[117]

- 1989, the work of Goss, Aron, Deneubourg and Pasteels on the collective behavior of Argentine ants, which will give the idea of ant colony optimization algorithms;[118]

- 1989, implementation of a model of behavior for food by Ebling and his colleagues;[119]

- 1991, M. Dorigo proposed the ant system in his doctoral thesis (which was published in 1992[6]). A technical report extracted from the thesis and co-authored by V. Maniezzo and A. Colorni[120] was published five years later;[25]

- 1994, Appleby and Steward of British Telecommunications Plc published the first application to telecommunications networks[121]

- 1995, Gambardella and Dorigo proposed ant-q, [122] the preliminary version of ant colony system as first estension of ant system; [25].

- 1996, Gambardella and Dorigo proposed ant colony system [123]

- 1996, publication of the article on ant system;[25]

- 1996, Hoos and Stützle invent the max-min ant system;[27]

- 1997, Dorigo and Gambardella proposed ant colony system hybrized with local search;[26]

- 1997, Schoonderwoerd and his colleagues published an improved application to telecommunication networks;[124]

- 1998, Dorigo launches first conference dedicated to the ACO algorithms;[125]

- 1998, Stützle proposes initial parallel implementations;[126]

- 1999, Gambardella, Taillard and Agazzi proposed macs-vrptw, first multi ant colony system applied to vehicle routing problems with time windows, [127]

- 1999, Bonabeau, Dorigo and Theraulaz publish a book dealing mainly with artificial ants[128]

- 2000, special issue of the Future Generation Computer Systems journal on ant algorithms[129]

- 2000, first applications to the scheduling, scheduling sequence and the satisfaction of constraints;

- 2000, Gutjahr provides the first evidence of convergence for an algorithm of ant colonies[130]

- 2001, Iredi and his colleagues published the first multi-objective algorithm[131]

- 2002, first applications in the design of schedule, Bayesian networks;

- 2002, Bianchi and her colleagues suggested the first algorithm for stochastic problem;[132]

- 2004, Dorigo and Stützle publish the Ant Colony Optimization book with MIT Press [133]

- 2004, Zlochin and Dorigo show that some algorithms are equivalent to the stochastic gradient descent, the cross-entropy method and algorithms to estimate distribution[32]

- 2005, first applications to protein folding problems.

- 2012, Prabhakar and colleagues publish research relating to the operation of individual ants communicating in tandem without pheromones, mirroring the principles of computer network organization. The communication model has been compared to the Transmission Control Protocol.[134]

- 2016, first application to peptide sequence design.[96]

- 2017, successful integration of the multi-criteria decision-making method PROMETHEE into the ACO algorithm (HUMANT algorithm).[135]

References

- ↑ Waldner, Jean-Baptiste (2008). Nanocomputers and Swarm Intelligence. London: ISTE John Wiley & Sons. p. 225. ISBN 978-1-84704-002-2.

- ↑ Monmarché Nicolas, Guinand Frédéric and Siarry Patrick (2010). Artificial Ants. Wiley-ISTE. ISBN 978-1-84821-194-0.

- ↑ Dorigo, Gambardella, M, L.M. (1997). "Learning Approach to the Traveling Salesman Problem". IEEE Transactions on Evolutionary Computation, 1 (1): 214.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Ant Colony Optimization by Marco Dorigo and Thomas Stützle, MIT Press, 2004.

- ↑ A. Colorni, M. Dorigo et V. Maniezzo, Distributed Optimization by Ant Colonies, actes de la première conférence européenne sur la vie artificielle, Paris, France, Elsevier Publishing, 134-142, 1991.

- ↑ 6.0 6.1 M. Dorigo, Optimization, Learning and Natural Algorithms, PhD thesis, Politecnico di Milano, Italy, 1992.

- ↑ Zlochin, Mark; Birattari, Mauro; Meuleau, Nicolas; Dorigo, Marco (1 October 2004). "Model-Based Search for Combinatorial Optimization: A Critical Survey". Annals of Operations Research (in English). 131 (1–4): 373–395. CiteSeerX 10.1.1.3.427. doi:10.1023/B:ANOR.0000039526.52305.af. ISSN 0254-5330.

- ↑ Fladerer, Johannes-Paul; Kurzmann, Ernst (November 2019). WISDOM OF THE MANY : how to create self -organisation and how to use collective... intelligence in companies and in society from mana.. BOOKS ON DEMAND. ISBN 9783750422421.

- ↑ Marco Dorigo and Thomas Stültze, Ant Colony Optimization, p.12. 2004.

- ↑ 10.0 10.1 Waldner, Jean-Baptiste (2008). Nanocomputers and Swarm Intelligence. London: ISTE John Wiley & Sons. p. 214. ISBN 978-1-84704-002-2.

- ↑ Waldner, Jean-Baptiste (2007). Inventer l'Ordinateur du XXIème Siècle. London: Hermes Science. pp. 259–265. ISBN 978-2-7462-1516-0.

- ↑ Waldner, Jean-Baptiste (2008). Nanocomputers and Swarm Intelligence. London: ISTE John Wiley & Sons. p. 215. ISBN 978-1-84704-002-2.

- ↑ Lima, Danielli A., and Gina MB Oliveira. "A cellular automata ant memory model of foraging in a swarm of robots." Applied Mathematical Modelling 47, 2017: 551-572.

- ↑ Russell, R. Andrew. "Ant trails-an example for robots to follow?." Robotics and Automation, 1999. Proceedings. 1999 IEEE International Conference on. Vol. 4. IEEE, 1999.

- ↑ Fujisawa, Ryusuke, et al. "Designing pheromone communication in swarm robotics: Group foraging behavior mediated by chemical substance." Swarm Intelligence 8.3 (2014): 227-246.

- ↑ Sakakibara, Toshiki, and Daisuke Kurabayashi. "Artificial pheromone system using rfid for navigation of autonomous robots." Journal of Bionic Engineering 4.4 (2007): 245-253.

- ↑ Arvin, Farshad, et al. "Investigation of cue-based aggregation in static and dynamic environments with a mobile robot swarm." Adaptive Behavior (2016): 1-17.

- ↑ Farshad Arvin, et al. "Imitation of honeybee aggregation with collective behavior of swarm robots." International Journal of Computational Intelligence Systems 4.4 (2011): 739-748.

- ↑ Schmickl, Thomas, et al. "Get in touch: cooperative decision making based on robot-to-robot collisions." Autonomous Agents and Multi-Agent Systems 18.1 (2009): 133-155.

- ↑ Garnier, Simon, et al. "Do ants need to estimate the geometrical properties of trail bifurcations to find an efficient route? A swarm robotics test bed." PLoS Comput Biol 9.3 (2013): e1002903.

- ↑ Arvin, Farshad, et al. "Cue-based aggregation with a mobile robot swarm: a novel fuzzy-based method." Adaptive Behavior 22.3 (2014): 189-206.

- ↑ Garnier, Simon, et al. "Alice in pheromone land: An experimental setup for the study of ant-like robots." 2007 IEEE Swarm Intelligence Symposium. IEEE, 2007.

- ↑ Farshad Arvin et al. "COSΦ: artificial pheromone system for robotic swarms research." IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2015.

- ↑ Krajník, Tomáš, et al. "A practical multirobot localization system." Journal of Intelligent & Robotic Systems 76.3-4 (2014): 539-562.

- ↑ 25.0 25.1 25.2 25.3 25.4 M. Dorigo, V. Maniezzo, et A. Colorni, Ant system: optimization by a colony of cooperating agents, IEEE Transactions on Systems, Man, and Cybernetics--Part B , volume 26, numéro 1, pages 29-41, 1996.

- ↑ 26.0 26.1 M. Dorigo et L.M. Gambardella, Ant Colony System : A Cooperative Learning Approach to the Traveling Salesman Problem, IEEE Transactions on Evolutionary Computation, volume 1, numéro 1, pages 53-66, 1997.

- ↑ 27.0 27.1 T. Stützle et H.H. Hoos, MAX MIN Ant System, Future Generation Computer Systems, volume 16, pages 889-914, 2000

- ↑ X Hu, J Zhang, and Y Li (2008). Orthogonal methods based ant colony search for solving continuous optimization problems. Journal of Computer Science and Technology, 23(1), pp.2-18.

- ↑ Gupta, D.K.; Arora, Y.; Singh, U.K.; Gupta, J.P., "Recursive Ant Colony Optimization for estimation of parameters of a function," Recent Advances in Information Technology (RAIT), 2012 1st International Conference on , vol., no., pp.448-454, 15–17 March 2012

- ↑ Gupta, D.K.; Gupta, J.P.; Arora, Y.; Shankar, U., "Recursive ant colony optimization: a new technique for the estimation of function parameters from geophysical field data," Near Surface Geophysics , vol. 11, no. 3, pp.325-339

- ↑ V.K.Ojha, A. Abraham and V. Snasel, ACO for Continuous Function Optimization: A Performance Analysis, 14th International Conference on Intelligent Systems Design and Applications (ISDA), Japan, Page 145 - 150, 2017, 978-1-4799-7938-7/14 2014 IEEE.

- ↑ 32.0 32.1 M. Zlochin, M. Birattari, N. Meuleau, et M. Dorigo, Model-based search for combinatorial optimization: A critical survey, Annals of Operations Research, vol. 131, pp. 373-395, 2004.

- ↑ L.M. Gambardella, M. Dorigo, "An Ant Colony System Hybridized with a New Local Search for the Sequential Ordering Problem", INFORMS Journal on Computing, vol.12(3), pp. 237-255, 2000.

- ↑ D. Martens, M. De Backer, R. Haesen, J. Vanthienen, M. Snoeck, B. Baesens, Classification with Ant Colony Optimization, IEEE Transactions on Evolutionary Computation, volume 11, number 5, pages 651—665, 2007.

- ↑ B. Pfahring, "Multi-agent search for open scheduling: adapting the Ant-Q formalism," Technical report TR-96-09, 1996.

- ↑ C. Blem, "Beam-ACO, Hybridizing ant colony optimization with beam search. An application to open shop scheduling," Technical report TR/IRIDIA/2003-17, 2003.

- ↑ T. Stützle, "An ant approach to the flow shop problem," Technical report AIDA-97-07, 1997.

- ↑ A. Bauer, B. Bullnheimer, R. F. Hartl and C. Strauss, "Minimizing total tardiness on a single machine using ant colony optimization," Central European Journal for Operations Research and Economics, vol.8, no.2, pp.125-141, 2000.

- ↑ M. den Besten, "Ants for the single machine total weighted tardiness problem," Master's thesis, University of Amsterdam, 2000.

- ↑ M, den Bseten, T. Stützle and M. Dorigo, "Ant colony optimization for the total weighted tardiness problem," Proceedings of PPSN-VI, Sixth International Conference on Parallel Problem Solving from Nature, vol. 1917 of Lecture Notes in Computer Science, pp.611-620, 2000.

- ↑ D. Merkle and M. Middendorf, "An ant algorithm with a new pheromone evaluation rule for total tardiness problems," Real World Applications of Evolutionary Computing, vol. 1803 of Lecture Notes in Computer Science, pp.287-296, 2000.

- ↑ D. Merkle, M. Middendorf and H. Schmeck, "Ant colony optimization for resource-constrained project scheduling," Proceedings of the Genetic and Evolutionary Computation Conference (GECCO 2000), pp.893-900, 2000.

- ↑ C. Blum, "ACO applied to group shop scheduling: a case study on intensification and diversification," Proceedings of ANTS 2002, vol. 2463 of Lecture Notes in Computer Science, pp.14-27, 2002.

- ↑ C. Gagné, W. L. Price and M. Gravel, "Comparing an ACO algorithm with other heuristics for the single machine scheduling problem with sequence-dependent setup times," Journal of the Operational Research Society, vol.53, pp.895-906, 2002.

- ↑ A. V. Donati, V. Darley, B. Ramachandran, "An Ant-Bidding Algorithm for Multistage Flowshop Scheduling Problem: Optimization and Phase Transitions", book chapter in Advances in Metaheuristics for Hard Optimization, Springer, , pp.111-138, 2008.

- ↑ Toth, Paolo; Vigo, Daniele (2002). "Models, relaxations and exact approaches for the capacitated vehicle routing problem". Discrete Applied Mathematics. 123 (1–3): 487–512. doi:10.1016/S0166-218X(01)00351-1.

- ↑ J. M. Belenguer, and E. Benavent, "A cutting plane algorithm for capacitated arc routing problem," Computers & Operations Research, vol.30, no.5, pp.705-728, 2003.

- ↑ T. K. Ralphs, "Parallel branch and cut for capacitated vehicle routing," Parallel Computing, vol.29, pp.607-629, 2003.

- ↑ Salhi, S.; Sari, M. (1997). "A multi-level composite heuristic for the multi-depot vehicle fleet mix problem". European Journal of Operational Research. 103: 95–112. doi:10.1016/S0377-2217(96)00253-6.

- ↑ Angelelli, Enrico; Speranza, Maria Grazia (2002). "The periodic vehicle routing problem with intermediate facilities". European Journal of Operational Research. 137 (2): 233–247. doi:10.1016/S0377-2217(01)00206-5.

- ↑ Ho, Sin C.; Haugland, Dag (2002). "A Tabu Search Heuristic for the Vehicle Routing Problem with Time Windows and Split Deliveries". Computers and Operations Research. 31 (12): 1947–1964. CiteSeerX 10.1.1.8.7096. doi:10.1016/S0305-0548(03)00155-2.

- ↑ Secomandi, Nicola. "Comparing neuro-dynamic programming algorithms for the vehicle routing problem with stochastic demands". Computers & Operations Research: 2000. CiteSeerX 10.1.1.392.4034.

- ↑ W. P. Nanry and J. W. Barnes, "Solving the pickup and delivery problem with time windows using reactive tabu search," Transportation Research Part B, vol.34, no. 2, pp.107-121, 2000.

- ↑ R. Bent and P.V. Hentenryck, "A two-stage hybrid algorithm for pickup and delivery vehicle routing problems with time windows," Computers & Operations Research, vol.33, no.4, pp.875-893, 2003.

- ↑ L.M. Gambardella, E. Taillard, G. Agazzi, "MACS-VRPTW: A Multiple Ant Colony System for Vehicle Routing Problems with Time Windows", In D. Corne, M. Dorigo and F. Glover, editors, New Ideas in Optimization, McGraw-Hill, London, UK, pp. 63-76, 1999.

- ↑ Bachem, A.; Hochstättler, W.; Malich, M. (1996). "The simulated trading heuristic for solving vehicle routing problems". Discrete Applied Mathematics. 65 (1–3): 47–72. doi:10.1016/0166-218X(95)00027-O.

- ↑ Hong, Sung-Chul; Park, Yang-Byung (1999). "A heuristic for bi-objective vehicle routing with time window constraints". International Journal of Production Economics. 62 (3): 249–258. doi:10.1016/S0925-5273(98)00250-3.

- ↑ Russell, Robert A.; Chiang, Wen-Chyuan (2006). "Scatter search for the vehicle routing problem with time windows". European Journal of Operational Research. 169 (2): 606–622. doi:10.1016/j.ejor.2004.08.018.

- ↑ A. V. Donati, R. Montemanni, N. Casagrande, A. E. Rizzoli, L. M. Gambardella, "Time Dependent Vehicle Routing Problem with a Multi Ant Colony System", European Journal of Operational Research, vol.185, no.3, pp.1174–1191, 2008.

- ↑ "MAX-MIN Ant System for Quadratic Assignment Problems". 1997. CiteSeerX 10.1.1.47.5167.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ R. Lourenço and D. Serra "Adaptive search heuristics for the generalized assignment problem," Mathware & soft computing, vol.9, no.2-3, 2002.

- ↑ M. Yagiura, T. Ibaraki and F. Glover, "An ejection chain approach for the generalized assignment problem," INFORMS Journal on Computing, vol. 16, no. 2, pp. 133–151, 2004.

- ↑ K. I. Aardal, S. P. M. van Hoesel, A. M. C. A. Koster, C. Mannino and Antonio. Sassano, "Models and solution techniques for the frequency assignment problem," A Quarterly Journal of Operations Research, vol.1, no.4, pp.261-317, 2001.

- ↑ Y. C. Liang and A. E. Smith, "An ant colony optimization algorithm for the redundancy allocation problem (RAP)https://en.wikipedia.org/wiki/Defekte_Weblinks?dwl={{{url}}} Seite nicht mehr abrufbar], Suche in Webarchiven: Kategorie:Wikipedia:Weblink offline (andere Namensräume)[http://timetravel.mementoweb.org/list/2010/Kategorie:Wikipedia:Vorlagenfehler/Vorlage:Toter Link/URL_fehlt ," IEEE Transactions on Reliability, vol.53, no.3, pp.417-423, 2004.

- ↑ G. Leguizamon and Z. Michalewicz, "A new version of ant system for subset problems," Proceedings of the 1999 Congress on Evolutionary Computation(CEC 99), vol.2, pp.1458-1464, 1999.

- ↑ R. Hadji, M. Rahoual, E. Talbi and V. Bachelet "Ant colonies for the set covering problem," Abstract proceedings of ANTS2000, pp.63-66, 2000.

- ↑ V Maniezzo and M Milandri, "An ant-based framework for very strongly constrained problems," Proceedings of ANTS2000, pp.222-227, 2002.

- ↑ R. Cordone and F. Maffioli,"Colored Ant System and local search to design local telecommunication networks," Applications of Evolutionary Computing: Proceedings of Evo Workshops, vol.2037, pp.60-69, 2001.

- ↑ C. Blum and M.J. Blesa, "Metaheuristics for the edge-weighted k-cardinality tree problem," Technical Report TR/IRIDIA/2003-02, IRIDIA, 2003.

- ↑ S. Fidanova, "ACO algorithm for MKP using various heuristic information", Numerical Methods and Applications, vol.2542, pp.438-444, 2003.

- ↑ G. Leguizamon, Z. Michalewicz and Martin Schutz, "An ant system for the maximum independent set problem," Proceedings of the 2001 Argentinian Congress on Computer Science, vol.2, pp.1027-1040, 2001.

- ↑ O. Okobiah, S. P. Mohanty, and E. Kougianos, "Ordinary Kriging Metamodel-Assisted Ant Colony Algorithm for Fast Analog Design Optimization -{zh-cn:互联网档案馆; zh-tw:網際網路檔案館; zh-hk:互聯網檔案館;}-的存檔,存档日期March 4, 2016,.", in Proceedings of the 13th IEEE International Symposium on Quality Electronic Design (ISQED), pp. 458--463, 2012.

- ↑ M. Sarkar, P. Ghosal, and S. P. Mohanty, "Reversible Circuit Synthesis Using ACO and SA based Quinne-McCluskey Method -{zh-cn:互联网档案馆; zh-tw:網際網路檔案館; zh-hk:互聯網檔案館;}-的存檔,存档日期July 29, 2014,.", in Proceedings of the 56th IEEE International Midwest Symposium on Circuits & Systems (MWSCAS), 2013, pp. 416--419.

- ↑ 74.0 74.1 74.2 Ermolaev S.Y., Slyusar V.I. Antenna synthesis based on the ant colony optimization algorithm.// Proc. ICATT’2009, Lviv, Ukraine 6 - 9 Octobre, 2009. - Pages 298 - 300 [1]