“情感计算”的版本间的差异

(翻译校正) |

(全文(除大小标题外)文本第一次翻译) |

||

| 第216行: | 第216行: | ||

The complexity of the affect recognition process increases with the number of classes (affects) and speech descriptors used within the classifier. It is, therefore, crucial to select only the most relevant features in order to assure the ability of the model to successfully identify emotions, as well as increasing the performance, which is particularly significant to real-time detection. The range of possible choices is vast, with some studies mentioning the use of over 200 distinct features. It is crucial to identify those that are redundant and undesirable in order to optimize the system and increase the success rate of correct emotion detection. The most common speech characteristics are categorized into the following groups. | The complexity of the affect recognition process increases with the number of classes (affects) and speech descriptors used within the classifier. It is, therefore, crucial to select only the most relevant features in order to assure the ability of the model to successfully identify emotions, as well as increasing the performance, which is particularly significant to real-time detection. The range of possible choices is vast, with some studies mentioning the use of over 200 distinct features. It is crucial to identify those that are redundant and undesirable in order to optimize the system and increase the success rate of correct emotion detection. The most common speech characteristics are categorized into the following groups. | ||

| − | 情感识别过程的复杂性随着分类器中使用的类(情感) | + | 情感识别过程的复杂性随着分类器中使用的类(情感)和语音描述符的数量的增加而增加。因此,为了保证模型能够成功地识别情绪,并提高性能,只选择最相关的特征是至关重要的,这对于实时检测尤为重要。可能的选择范围很广,有些研究提到使用了200多种不同的特征【20】。识别冗余和不需要的情感信息对于优化系统、提高情感检测的成功率至关重要。最常见的言语特征可分为以下几类【24】【25】。 |

# Frequency characteristics<ref>{{Cite book |doi=10.1109/ICCCI50826.2021.9402569|isbn=978-1-7281-5875-4|chapter=Non-linear frequency warping using constant-Q transformation for speech emotion recognition|title=2021 International Conference on Computer Communication and Informatics (ICCCI)|pages=1–4|year=2021|last1=Singh|first1=Premjeet|last2=Saha|first2=Goutam|last3=Sahidullah|first3=Md|arxiv=2102.04029}}</ref> | # Frequency characteristics<ref>{{Cite book |doi=10.1109/ICCCI50826.2021.9402569|isbn=978-1-7281-5875-4|chapter=Non-linear frequency warping using constant-Q transformation for speech emotion recognition|title=2021 International Conference on Computer Communication and Informatics (ICCCI)|pages=1–4|year=2021|last1=Singh|first1=Premjeet|last2=Saha|first2=Goutam|last3=Sahidullah|first3=Md|arxiv=2102.04029}}</ref> | ||

| 第250行: | 第250行: | ||

#* Pitch Discontinuity – describes the transitions of the fundamental frequency. | #* Pitch Discontinuity – describes the transitions of the fundamental frequency. | ||

| − | # 频率特性 | + | # 频率特性 |

| − | * | + | * 重音形状-受基频变化率的影响。 |

| − | * 平均音调-描述说话者相对于正常语言的音调高低。 | + | * 平均音调-描述说话者相对于正常语言的音调高低。 |

| − | * | + | * 曲线斜率-描述频率随时间变化的趋势,可以是上升、下降或水平。 |

| − | * | + | * 最后降低频率-话语结束时频率下降的幅度。 |

| − | * 音高范围-量度一段话语的最高和最低频率之间的差距。 | + | * 音高范围-量度一段话语的最高和最低频率之间的差距。 |

| − | * 语速-描述在一个时间单位内发出的单词或音节的频率 | + | * 2.与时间相关的特征: |

| − | * 重音频率- | + | * 语速-描述在一个时间单位内发出的单词或音节的频率 |

| − | * 呼吸质-测量语音中的吸气噪声 | + | * 重音频率-测量音高重音出现的频率 |

| − | * | + | * 3.语音质量参数和能量描述符: |

| − | * 响度-测量语音的振幅,转换为话音的能量 | + | * 呼吸质-测量语音中的吸气噪声 |

| − | * 暂停间断-描述声音和静音之间的转换 | + | * 亮度-描述语音中高频或低频的主导地位 |

| + | * 响度-测量语音的振幅,转换为话音的能量 | ||

| + | * 暂停间断-描述声音和静音之间的转换 | ||

* 音高间断-描述基本频率的转换。 | * 音高间断-描述基本频率的转换。 | ||

| 第269行: | 第271行: | ||

The detection and processing of facial expression are achieved through various methods such as optical flow, hidden Markov models, neural network processing or active appearance models. More than one modalities can be combined or fused (multimodal recognition, e.g. facial expressions and speech prosody, facial expressions and hand gestures, or facial expressions with speech and text for multimodal data and metadata analysis) to provide a more robust estimation of the subject's emotional state. Affectiva is a company (co-founded by Rosalind Picard and Rana El Kaliouby) directly related to affective computing and aims at investigating solutions and software for facial affect detection. | The detection and processing of facial expression are achieved through various methods such as optical flow, hidden Markov models, neural network processing or active appearance models. More than one modalities can be combined or fused (multimodal recognition, e.g. facial expressions and speech prosody, facial expressions and hand gestures, or facial expressions with speech and text for multimodal data and metadata analysis) to provide a more robust estimation of the subject's emotional state. Affectiva is a company (co-founded by Rosalind Picard and Rana El Kaliouby) directly related to affective computing and aims at investigating solutions and software for facial affect detection. | ||

| − | + | 面部表情的检测和处理通过光流、隐马尔可夫模型、神经网络处理或主动外观模型等多种方法实现。可以组合或融合多种模态(多模态识别,例如面部表情和语音韵律【27】、面部表情和手势【28】,或用于多模态数据和元数据分析的带有语音和文本的面部表情),以提供对受试者情绪的更可靠估计状态。Affectiva 是一家与情感计算直接相关的公司(由 Rosalind Picard 和 Rana El Kaliouby 共同创办) ,旨在研究面部情感检测的解决方案和软件。 | |

==== Facial expression databases ==== | ==== Facial expression databases ==== | ||

| 第278行: | 第280行: | ||

Creation of an emotion database is a difficult and time-consuming task. However, database creation is an essential step in the creation of a system that will recognize human emotions. Most of the publicly available emotion databases include posed facial expressions only. In posed expression databases, the participants are asked to display different basic emotional expressions, while in spontaneous expression database, the expressions are natural. Spontaneous emotion elicitation requires significant effort in the selection of proper stimuli which can lead to a rich display of intended emotions. Secondly, the process involves tagging of emotions by trained individuals manually which makes the databases highly reliable. Since perception of expressions and their intensity is subjective in nature, the annotation by experts is essential for the purpose of validation. | Creation of an emotion database is a difficult and time-consuming task. However, database creation is an essential step in the creation of a system that will recognize human emotions. Most of the publicly available emotion databases include posed facial expressions only. In posed expression databases, the participants are asked to display different basic emotional expressions, while in spontaneous expression database, the expressions are natural. Spontaneous emotion elicitation requires significant effort in the selection of proper stimuli which can lead to a rich display of intended emotions. Secondly, the process involves tagging of emotions by trained individuals manually which makes the databases highly reliable. Since perception of expressions and their intensity is subjective in nature, the annotation by experts is essential for the purpose of validation. | ||

| − | + | 情感数据库的建立是一项既困难又耗时的工作。然而,创建数据库是创建识别人类情感的系统的关键步骤。大多数公开的情感数据库只包含摆出的面部表情。在姿势表情数据库中,参与者被要求展示不同的基本情绪表情,而在自发表情数据库中,表情是自然的。自发的情绪诱导需要在选择合适的刺激物时付出巨大的努力,这会导致丰富的预期情绪的展示。其次,该过程涉及由受过训练的个人手动标记情绪,这使得数据库高度可靠。 由于对表达及其强度的感知本质上是主观的,专家的注释对于验证的目的是必不可少的。 | |

Researchers work with three types of databases, such as a database of peak expression images only, a database of image sequences portraying an emotion from neutral to its peak, and video clips with emotional annotations. Many facial expression databases have been created and made public for expression recognition purpose. Two of the widely used databases are CK+ and JAFFE. | Researchers work with three types of databases, such as a database of peak expression images only, a database of image sequences portraying an emotion from neutral to its peak, and video clips with emotional annotations. Many facial expression databases have been created and made public for expression recognition purpose. Two of the widely used databases are CK+ and JAFFE. | ||

| 第284行: | 第286行: | ||

Researchers work with three types of databases, such as a database of peak expression images only, a database of image sequences portraying an emotion from neutral to its peak, and video clips with emotional annotations. Many facial expression databases have been created and made public for expression recognition purpose. Two of the widely used databases are CK+ and JAFFE. | Researchers work with three types of databases, such as a database of peak expression images only, a database of image sequences portraying an emotion from neutral to its peak, and video clips with emotional annotations. Many facial expression databases have been created and made public for expression recognition purpose. Two of the widely used databases are CK+ and JAFFE. | ||

| − | + | 研究人员使用三种类型的数据库,例如仅峰值表达图像的数据库、描绘从中性到峰值的情绪的图像序列数据库以及带有情绪注释的视频剪辑。面部表情数据库是面部表情识别领域的一个重要研究课题。两个广泛使用的数据库是 CK+和 JAFFE。 | |

====Emotion classification==== | ====Emotion classification==== | ||

| 第295行: | 第297行: | ||

He therefore officially put forth six basic emotions, in 1972: | He therefore officially put forth six basic emotions, in 1972: | ||

| − | + | 1960 年代末,保罗·埃克曼 (Paul Ekman) 在巴布亚新几内亚的 Fore Tribesmen 上进行跨文化研究,提出了一种观点,即情感的面部表情不是由文化决定的,而是普遍存在的。因此,他认为它们是起源于生物的,能够可靠地分类。 因此,他在 1972 年正式提出了六种基本情绪【29】: | |

* [[Anger]] | * [[Anger]] | ||

| 第345行: | 第347行: | ||

# Shame | # Shame | ||

| − | + | 然而,在20世纪90年代,埃克曼扩展了他的基本情绪列表,包括一系列积极和消极的情绪,这些情绪并非都编码在面部肌肉中。新增的情绪是: | |

| + | |||

| + | <nowiki>#</nowiki> 娱乐 | ||

| + | |||

| + | <nowiki>#</nowiki> 轻蔑 | ||

| + | |||

| + | <nowiki>#</nowiki> 满足 | ||

| + | |||

| + | <nowiki>#</nowiki> 尴尬 | ||

| + | |||

| + | <nowiki>#</nowiki> 兴奋 | ||

| + | |||

| + | <nowiki>#</nowiki> 内疚 | ||

| + | |||

| + | <nowiki>#</nowiki> 成就骄傲 | ||

| + | |||

| + | <nowiki>#</nowiki> 解脱 | ||

| + | |||

| + | <nowiki>#</nowiki> 满足 | ||

| + | |||

| + | <nowiki>#</nowiki> 感官愉悦 | ||

| + | |||

| + | <nowiki>#</nowiki> 羞耻 | ||

====Facial Action Coding System==== | ====Facial Action Coding System==== | ||

| 第356行: | 第380行: | ||

They are, basically, a contraction or a relaxation of one or more muscles. Psychologists have proposed the following classification of six basic emotions, according to their action units ("+" here mean "and"): | They are, basically, a contraction or a relaxation of one or more muscles. Psychologists have proposed the following classification of six basic emotions, according to their action units ("+" here mean "and"): | ||

| − | + | 心理学家已经构想出一个系统,用来正式分类脸上情绪的物理表达。面部动作编码系统 (FACS) 的中心概念是由 Paul Ekman 和 Wallace V. Friesen 在 1978 年基于 Carl-Herman Hjortsjö 【31】的早期工作创建的,是动作单位 (AU)。它们基本上是一块或多块肌肉的收缩或放松。心理学家根据他们的行为单位,提出了以下六种基本情绪的分类(这里的“ +”是指“和”) : | |

{| class="wikitable sortable" | {| class="wikitable sortable" | ||

| 第405行: | 第429行: | ||

As with every computational practice, in affect detection by facial processing, some obstacles need to be surpassed, in order to fully unlock the hidden potential of the overall algorithm or method employed. In the early days of almost every kind of AI-based detection (speech recognition, face recognition, affect recognition), the accuracy of modeling and tracking has been an issue. As hardware evolves, as more data are collected and as new discoveries are made and new practices introduced, this lack of accuracy fades, leaving behind noise issues. However, methods for noise removal exist including neighborhood averaging, linear Gaussian smoothing, median filtering, or newer methods such as the Bacterial Foraging Optimization Algorithm.Clever Algorithms. "Bacterial Foraging Optimization Algorithm – Swarm Algorithms – Clever Algorithms" . Clever Algorithms. Retrieved 21 March 2011."Soft Computing". Soft Computing. Retrieved 18 March 2011. | As with every computational practice, in affect detection by facial processing, some obstacles need to be surpassed, in order to fully unlock the hidden potential of the overall algorithm or method employed. In the early days of almost every kind of AI-based detection (speech recognition, face recognition, affect recognition), the accuracy of modeling and tracking has been an issue. As hardware evolves, as more data are collected and as new discoveries are made and new practices introduced, this lack of accuracy fades, leaving behind noise issues. However, methods for noise removal exist including neighborhood averaging, linear Gaussian smoothing, median filtering, or newer methods such as the Bacterial Foraging Optimization Algorithm.Clever Algorithms. "Bacterial Foraging Optimization Algorithm – Swarm Algorithms – Clever Algorithms" . Clever Algorithms. Retrieved 21 March 2011."Soft Computing". Soft Computing. Retrieved 18 March 2011. | ||

| − | 正如每一个计算实践,在人脸处理的情感检测中,一些障碍需要被超越,以便充分释放所使用的整体算法或方法的隐藏潜力。在几乎所有基于人工智能的检测(语音识别、人脸识别、情感识别) | + | 正如每一个计算实践,在人脸处理的情感检测中,一些障碍需要被超越,以便充分释放所使用的整体算法或方法的隐藏潜力。在几乎所有基于人工智能的检测(语音识别、人脸识别、情感识别)的早期,建模和跟踪的准确性一直是个问题。随着硬件的发展,随着更多的数据被收集,随着新的发现和新的实践的引入,这种缺乏准确性的现象逐渐消失,留下了噪音问题。然而,现有的去噪方法包括邻域平均法、线性高斯平滑法、中值滤波法【32】,或者更新的方法如细菌觅食优化算法【33】【34】。 |

Other challenges include | Other challenges include | ||

| 第423行: | 第447行: | ||

* Accuracy of recognition is improved by adding context; however, adding context and other modalities increases computational cost and complexity | * Accuracy of recognition is improved by adding context; however, adding context and other modalities increases computational cost and complexity | ||

| − | + | 其他的挑战 | |

| − | * | + | * 事实上,摆出的表情,正如大多数研究对象所使用的,是不自然的,因此训练这些算法可能不适用于自然表情。缺乏旋转运动的自由度。 正面使用时效果检测效果很好,但在将头部旋转 20 度以上时,“就出现了问题”【35】。面部表情并不总是与与之匹配的潜在情绪相对应(例如,它们可以摆姿势或伪装,或者一个人可以感受到情绪但保持“扑克脸”)。FACS 不包括动态,而动态可以帮助消除歧义(例如,真正快乐的微笑往往与“尝试看起来快乐”的微笑具有不同的动态)。FACS 组合与心理学家最初提出的情绪并不以 1:1 的方式对应(请注意,这种缺乏 1:1 映射的情况也发生在具有同音异义词和许多其他歧义来源的语音识别中,并且可能是通过引入其他信息渠道来缓解)。通过添加上下文提高了识别的准确性; 然而,添加上下文和其他模式增加了计算成本和复杂性 |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

===Body gesture=== | ===Body gesture=== | ||

| 第438行: | 第457行: | ||

Gestures could be efficiently used as a means of detecting a particular emotional state of the user, especially when used in conjunction with speech and face recognition. Depending on the specific action, gestures could be simple reflexive responses, like lifting your shoulders when you don't know the answer to a question, or they could be complex and meaningful as when communicating with sign language. Without making use of any object or surrounding environment, we can wave our hands, clap or beckon. On the other hand, when using objects, we can point at them, move, touch or handle these. A computer should be able to recognize these, analyze the context and respond in a meaningful way, in order to be efficiently used for Human–Computer Interaction. | Gestures could be efficiently used as a means of detecting a particular emotional state of the user, especially when used in conjunction with speech and face recognition. Depending on the specific action, gestures could be simple reflexive responses, like lifting your shoulders when you don't know the answer to a question, or they could be complex and meaningful as when communicating with sign language. Without making use of any object or surrounding environment, we can wave our hands, clap or beckon. On the other hand, when using objects, we can point at them, move, touch or handle these. A computer should be able to recognize these, analyze the context and respond in a meaningful way, in order to be efficiently used for Human–Computer Interaction. | ||

| − | + | 姿势可以有效地作为一种检测用户特定情绪状态的手段,特别是与语音和面部识别结合使用时。根据具体的动作,姿势可以是简单的反射性反应,比如当你不知道一个问题的答案时抬起你的肩膀,或者它们可以是复杂和有意义的,比如当用手语交流时。不需要利用任何物体或周围环境,我们可以挥手、拍手或招手。另一方面,当我们使用物体时,我们可以指向它们,移动,触摸或者处理它们。计算机应该能够识别这些,分析上下文,并以一种有意义的方式作出响应,以便有效地用于人机交互。 | |

There are many proposed methods<ref name="JK">J. K. Aggarwal, Q. Cai, Human Motion Analysis: A Review, Computer Vision and Image Understanding, Vol. 73, No. 3, 1999</ref> to detect the body gesture. Some literature differentiates 2 different approaches in gesture recognition: a 3D model based and an appearance-based.<ref name="Vladimir">{{cite journal | first1 = Vladimir I. | last1 = Pavlovic | first2 = Rajeev | last2 = Sharma | first3 = Thomas S. | last3 = Huang | url = http://www.cs.rutgers.edu/~vladimir/pub/pavlovic97pami.pdf | title = Visual Interpretation of Hand Gestures for Human–Computer Interaction: A Review | journal = [[IEEE Transactions on Pattern Analysis and Machine Intelligence]] | volume = 19 | issue = 7 | pages = 677–695 | year = 1997 | doi = 10.1109/34.598226 }}</ref> The foremost method makes use of 3D information of key elements of the body parts in order to obtain several important parameters, like palm position or joint angles. On the other hand, appearance-based systems use images or videos to for direct interpretation. Hand gestures have been a common focus of body gesture detection methods.<ref name="Vladimir"/> | There are many proposed methods<ref name="JK">J. K. Aggarwal, Q. Cai, Human Motion Analysis: A Review, Computer Vision and Image Understanding, Vol. 73, No. 3, 1999</ref> to detect the body gesture. Some literature differentiates 2 different approaches in gesture recognition: a 3D model based and an appearance-based.<ref name="Vladimir">{{cite journal | first1 = Vladimir I. | last1 = Pavlovic | first2 = Rajeev | last2 = Sharma | first3 = Thomas S. | last3 = Huang | url = http://www.cs.rutgers.edu/~vladimir/pub/pavlovic97pami.pdf | title = Visual Interpretation of Hand Gestures for Human–Computer Interaction: A Review | journal = [[IEEE Transactions on Pattern Analysis and Machine Intelligence]] | volume = 19 | issue = 7 | pages = 677–695 | year = 1997 | doi = 10.1109/34.598226 }}</ref> The foremost method makes use of 3D information of key elements of the body parts in order to obtain several important parameters, like palm position or joint angles. On the other hand, appearance-based systems use images or videos to for direct interpretation. Hand gestures have been a common focus of body gesture detection methods.<ref name="Vladimir"/> | ||

| 第444行: | 第463行: | ||

There are many proposed methodsJ. K. Aggarwal, Q. Cai, Human Motion Analysis: A Review, Computer Vision and Image Understanding, Vol. 73, No. 3, 1999 to detect the body gesture. Some literature differentiates 2 different approaches in gesture recognition: a 3D model based and an appearance-based. The foremost method makes use of 3D information of key elements of the body parts in order to obtain several important parameters, like palm position or joint angles. On the other hand, appearance-based systems use images or videos to for direct interpretation. Hand gestures have been a common focus of body gesture detection methods. | There are many proposed methodsJ. K. Aggarwal, Q. Cai, Human Motion Analysis: A Review, Computer Vision and Image Understanding, Vol. 73, No. 3, 1999 to detect the body gesture. Some literature differentiates 2 different approaches in gesture recognition: a 3D model based and an appearance-based. The foremost method makes use of 3D information of key elements of the body parts in order to obtain several important parameters, like palm position or joint angles. On the other hand, appearance-based systems use images or videos to for direct interpretation. Hand gestures have been a common focus of body gesture detection methods. | ||

| − | + | 提出了许多的方法来检测身体姿势【36】。 一些文献区分了姿势识别的两种不同方法:基于 3D 模型和基于外观【37】。最重要的方法是利用人体关键部位的三维信息,获得手掌位置、关节角度等重要参数。另一方面,基于外观的系统使用图像或视频进行直接解释。手势一直是身体姿态检测方法的共同焦点【37】。 | |

===Physiological monitoring=== | ===Physiological monitoring=== | ||

| 第451行: | 第470行: | ||

This could be used to detect a user's affective state by monitoring and analyzing their physiological signs. These signs range from changes in heart rate and skin conductance to minute contractions of the facial muscles and changes in facial blood flow. This area is gaining momentum and we are now seeing real products that implement the techniques. The four main physiological signs that are usually analyzed are blood volume pulse, galvanic skin response, facial electromyography, and facial color patterns. | This could be used to detect a user's affective state by monitoring and analyzing their physiological signs. These signs range from changes in heart rate and skin conductance to minute contractions of the facial muscles and changes in facial blood flow. This area is gaining momentum and we are now seeing real products that implement the techniques. The four main physiological signs that are usually analyzed are blood volume pulse, galvanic skin response, facial electromyography, and facial color patterns. | ||

| − | + | 这可用于通过监测和分析用户的生理迹象来检测用户的情感状态。 这些迹象的范围从心率和皮肤电导率的变化到面部肌肉的微小收缩和面部血流的变化。这个领域的发展势头越来越强劲,我们现在看到了实现这些技术的真正产品。通常被分析的4个主要生理特征是血容量脉搏、皮肤电反应、面部肌电图和面部颜色模式。 | |

====Blood volume pulse==== | ====Blood volume pulse==== | ||

| 第469行: | 第488行: | ||

A subject's blood volume pulse (BVP) can be measured by a process called photoplethysmography, which produces a graph indicating blood flow through the extremities.Picard, Rosalind (1998). Affective Computing. MIT. The peaks of the waves indicate a cardiac cycle where the heart has pumped blood to the extremities. If the subject experiences fear or is startled, their heart usually 'jumps' and beats quickly for some time, causing the amplitude of the cardiac cycle to increase. This can clearly be seen on a photoplethysmograph when the distance between the trough and the peak of the wave has decreased. As the subject calms down, and as the body's inner core expands, allowing more blood to flow back to the extremities, the cycle will return to normal. | A subject's blood volume pulse (BVP) can be measured by a process called photoplethysmography, which produces a graph indicating blood flow through the extremities.Picard, Rosalind (1998). Affective Computing. MIT. The peaks of the waves indicate a cardiac cycle where the heart has pumped blood to the extremities. If the subject experiences fear or is startled, their heart usually 'jumps' and beats quickly for some time, causing the amplitude of the cardiac cycle to increase. This can clearly be seen on a photoplethysmograph when the distance between the trough and the peak of the wave has decreased. As the subject calms down, and as the body's inner core expands, allowing more blood to flow back to the extremities, the cycle will return to normal. | ||

| − | 一个实验对象的血容量脉搏(BVP) | + | 一个实验对象的血容量脉搏(BVP)可以通过一个叫做光容血管造影术的技术来测量,这个过程产生一个图表来显示通过四肢的血液流动【38】。波峰表明心脏将血液泵入四肢的心动周期。如果受试者感到恐惧或受到惊吓,他们的心脏通常会“跳动”并快速跳动一段时间,导致心脏周期的振幅增加。当波谷和波峰之间的距离减小时,可以在光电容积描记器上清楚地看到这一点。当受试者平静下来,身体内核扩张,允许更多的血液回流到四肢,循环将恢复正常。 |

=====Methodology===== | =====Methodology===== | ||

| 第495行: | 第514行: | ||

There are other factors that can affect one's blood volume pulse. As it is a measure of blood flow through the extremities, if the subject feels hot, or particularly cold, then their body may allow more, or less, blood to flow to the extremities, all of this regardless of the subject's emotional state. | There are other factors that can affect one's blood volume pulse. As it is a measure of blood flow through the extremities, if the subject feels hot, or particularly cold, then their body may allow more, or less, blood to flow to the extremities, all of this regardless of the subject's emotional state. | ||

| − | + | 确保发出红外光并监测反射光的传感器始终指向同一个末端可能很麻烦,尤其是在使用计算机时观察对象经常伸展并重新调整其位置时。 还有其他因素会影响一个人的血容量脉搏。因为它是通过四肢的血流量的量度,如果受试者感觉热,或特别冷,那么他们的身体可能允许更多或更少的血液流向四肢,所有这一切都与受试者的情绪状态无关。 | |

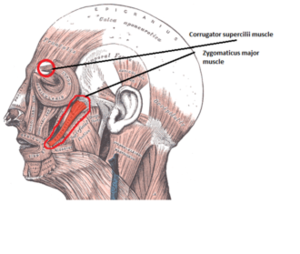

[[File:Em-face-2.png|thumb|left| The corrugator supercilii muscle and zygomaticus major muscle are the 2 main muscles used for measuring the electrical activity, in facial electromyography|链接=Special:FilePath/Em-face-2.png]] | [[File:Em-face-2.png|thumb|left| The corrugator supercilii muscle and zygomaticus major muscle are the 2 main muscles used for measuring the electrical activity, in facial electromyography|链接=Special:FilePath/Em-face-2.png]] | ||

| − | |||

| − | |||

| − | |||

| − | |||

====Facial electromyography==== | ====Facial electromyography==== | ||

| 第514行: | 第529行: | ||

The corrugator supercilii muscle, also known as the 'frowning' muscle, draws the brow down into a frown, and therefore is the best test for negative, unpleasant emotional response.↵The zygomaticus major muscle is responsible for pulling the corners of the mouth back when you smile, and therefore is the muscle used to test for a positive emotional response. | The corrugator supercilii muscle, also known as the 'frowning' muscle, draws the brow down into a frown, and therefore is the best test for negative, unpleasant emotional response.↵The zygomaticus major muscle is responsible for pulling the corners of the mouth back when you smile, and therefore is the muscle used to test for a positive emotional response. | ||

| − | + | 面部肌电图是一种通过放大肌肉纤维收缩时产生的微小电脉冲来测量面部肌肉电活动的技术【39】。面部表达大量情绪,然而,有两个主要的面部肌肉群通常被研究来检测情绪: 皱眉肌,也称为“皱眉”肌肉,将眉毛向下拉成皱眉,因此是对消极的、不愉快的情绪反应的最好测试。当微笑时,颧大肌负责将嘴角向后拉,因此是用于测试积极情绪反应的肌肉。 | |

[[File:Gsrplot.svg|500px|thumb|Here we can see a plot of skin resistance measured using GSR and time whilst the subject played a video game. There are several peaks that are clear in the graph, which suggests that GSR is a good method of differentiating between an aroused and a non-aroused state. For example, at the start of the game where there is usually not much exciting game play, there is a high level of resistance recorded, which suggests a low level of conductivity and therefore less arousal. This is in clear contrast with the sudden trough where the player is killed as one is usually very stressed and tense as their character is killed in the game|链接=Special:FilePath/Gsrplot.svg]] | [[File:Gsrplot.svg|500px|thumb|Here we can see a plot of skin resistance measured using GSR and time whilst the subject played a video game. There are several peaks that are clear in the graph, which suggests that GSR is a good method of differentiating between an aroused and a non-aroused state. For example, at the start of the game where there is usually not much exciting game play, there is a high level of resistance recorded, which suggests a low level of conductivity and therefore less arousal. This is in clear contrast with the sudden trough where the player is killed as one is usually very stressed and tense as their character is killed in the game|链接=Special:FilePath/Gsrplot.svg]] | ||

| − | |||

| − | |||

| − | |||

| − | |||

====Galvanic skin response==== | ====Galvanic skin response==== | ||

| 第529行: | 第540行: | ||

Galvanic skin response (GSR) is an outdated term for a more general phenomenon known as [Electrodermal Activity] or EDA. EDA is a general phenomena whereby the skin's electrical properties change. The skin is innervated by the [sympathetic nervous system], so measuring its resistance or conductance provides a way to quantify small changes in the sympathetic branch of the autonomic nervous system. As the sweat glands are activated, even before the skin feels sweaty, the level of the EDA can be captured (usually using conductance) and used to discern small changes in autonomic arousal. The more aroused a subject is, the greater the skin conductance tends to be. | Galvanic skin response (GSR) is an outdated term for a more general phenomenon known as [Electrodermal Activity] or EDA. EDA is a general phenomena whereby the skin's electrical properties change. The skin is innervated by the [sympathetic nervous system], so measuring its resistance or conductance provides a way to quantify small changes in the sympathetic branch of the autonomic nervous system. As the sweat glands are activated, even before the skin feels sweaty, the level of the EDA can be captured (usually using conductance) and used to discern small changes in autonomic arousal. The more aroused a subject is, the greater the skin conductance tends to be. | ||

| − | 皮肤电反应(GSR)是一个过时的术语,更一般的现象称为[皮肤电活动]或 EDA。EDA 是皮肤电特性改变的普遍现象。皮肤受交感神经神经支配,因此测量皮肤的电阻或电导率可以量化自主神经系统交感神经分支的细微变化。当汗腺被激活时,甚至在皮肤出汗之前,EDA 的水平就可以被捕获(通常使用电导) | + | 皮肤电反应(GSR)是一个过时的术语,更一般的现象称为[皮肤电活动]或 EDA。EDA 是皮肤电特性改变的普遍现象。皮肤受交感神经神经支配,因此测量皮肤的电阻或电导率可以量化自主神经系统交感神经分支的细微变化。当汗腺被激活时,甚至在皮肤出汗之前,EDA 的水平就可以被捕获(通常使用电导) ,并用于辨别自主神经唤醒的微小变化。一个主体越兴奋,皮肤导电反应就越强烈【38】。 |

Skin conductance is often measured using two small [[silver-silver chloride]] electrodes placed somewhere on the skin and applying a small voltage between them. To maximize comfort and reduce irritation the electrodes can be placed on the wrist, legs, or feet, which leaves the hands fully free for daily activity. | Skin conductance is often measured using two small [[silver-silver chloride]] electrodes placed somewhere on the skin and applying a small voltage between them. To maximize comfort and reduce irritation the electrodes can be placed on the wrist, legs, or feet, which leaves the hands fully free for daily activity. | ||

| 第553行: | 第564行: | ||

The surface of the human face is innervated with a large network of blood vessels. Blood flow variations in these vessels yield visible color changes on the face. Whether or not facial emotions activate facial muscles, variations in blood flow, blood pressure, glucose levels, and other changes occur. Also, the facial color signal is independent from that provided by facial muscle movements.Carlos F. Benitez-Quiroz, Ramprakash Srinivasan, Aleix M. Martinez, Facial color is an efficient mechanism to visually transmit emotion, PNAS. April 3, 2018 115 (14) 3581–3586; first published March 19, 2018 https://doi.org/10.1073/pnas.1716084115. | The surface of the human face is innervated with a large network of blood vessels. Blood flow variations in these vessels yield visible color changes on the face. Whether or not facial emotions activate facial muscles, variations in blood flow, blood pressure, glucose levels, and other changes occur. Also, the facial color signal is independent from that provided by facial muscle movements.Carlos F. Benitez-Quiroz, Ramprakash Srinivasan, Aleix M. Martinez, Facial color is an efficient mechanism to visually transmit emotion, PNAS. April 3, 2018 115 (14) 3581–3586; first published March 19, 2018 https://doi.org/10.1073/pnas.1716084115. | ||

| − | + | 人脸表面由大量血管网络支配。 这些血管中的血流变化会在脸上产生可见的颜色变化。 无论面部情绪是否激活面部肌肉,都会发生血流量、血压、血糖水平和其他变化的变化。 此外,面部颜色信号与面部肌肉运动提供的信号无关【40】。 | |

=====Methodology===== | =====Methodology===== | ||

| 第565行: | 第576行: | ||

Approaches are based on facial color changes. Delaunay triangulation is used to create the triangular local areas. Some of these triangles which define the interior of the mouth and eyes (sclera and iris) are removed. Use the left triangular areas’ pixels to create feature vectors. It shows that converting the pixel color of the standard RGB color space to a color space such as oRGB color spaceM. Bratkova, S. Boulos, and P. Shirley, oRGB: a practical opponent color space for computer graphics, IEEE Computer Graphics and Applications, 29(1):42–55, 2009. or LMS channels perform better when dealing with faces.Hadas Shahar, Hagit Hel-Or, Micro Expression Classification using Facial Color and Deep Learning Methods, The IEEE International Conference on Computer Vision (ICCV), 2019, pp. 0–0. So, map the above vector onto the better color space and decompose into red-green and yellow-blue channels. Then use deep learning methods to find equivalent emotions. | Approaches are based on facial color changes. Delaunay triangulation is used to create the triangular local areas. Some of these triangles which define the interior of the mouth and eyes (sclera and iris) are removed. Use the left triangular areas’ pixels to create feature vectors. It shows that converting the pixel color of the standard RGB color space to a color space such as oRGB color spaceM. Bratkova, S. Boulos, and P. Shirley, oRGB: a practical opponent color space for computer graphics, IEEE Computer Graphics and Applications, 29(1):42–55, 2009. or LMS channels perform better when dealing with faces.Hadas Shahar, Hagit Hel-Or, Micro Expression Classification using Facial Color and Deep Learning Methods, The IEEE International Conference on Computer Vision (ICCV), 2019, pp. 0–0. So, map the above vector onto the better color space and decompose into red-green and yellow-blue channels. Then use deep learning methods to find equivalent emotions. | ||

| − | + | 方法是基于面部颜色的变化。 Delaunay 三角剖分用于创建三角形局部区域。 一些定义嘴巴和眼睛(巩膜和虹膜)内部的三角形被移除。 使用左三角区域的像素来创建特征向量【40】。它表明,将标准 RGB 颜色空间的像素颜色转换为 oRGB 颜色空间【41】或 LMS 通道等颜色空间在处理人脸时表现更好【42】。因此,将上面的矢量映射到较好的颜色空间,并分解为红绿色和黄蓝色通道。然后使用深度学习的方法来找到等效的情绪。 | |

===Visual aesthetics=== | ===Visual aesthetics=== | ||

| 第572行: | 第583行: | ||

Aesthetics, in the world of art and photography, refers to the principles of the nature and appreciation of beauty. Judging beauty and other aesthetic qualities is a highly subjective task. Computer scientists at Penn State treat the challenge of automatically inferring the aesthetic quality of pictures using their visual content as a machine learning problem, with a peer-rated on-line photo sharing website as a data source.Ritendra Datta, Dhiraj Joshi, Jia Li and James Z. Wang, Studying Aesthetics in Photographic Images Using a Computational Approach, Lecture Notes in Computer Science, vol. 3953, Proceedings of the European Conference on Computer Vision, Part III, pp. 288–301, Graz, Austria, May 2006. They extract certain visual features based on the intuition that they can discriminate between aesthetically pleasing and displeasing images. | Aesthetics, in the world of art and photography, refers to the principles of the nature and appreciation of beauty. Judging beauty and other aesthetic qualities is a highly subjective task. Computer scientists at Penn State treat the challenge of automatically inferring the aesthetic quality of pictures using their visual content as a machine learning problem, with a peer-rated on-line photo sharing website as a data source.Ritendra Datta, Dhiraj Joshi, Jia Li and James Z. Wang, Studying Aesthetics in Photographic Images Using a Computational Approach, Lecture Notes in Computer Science, vol. 3953, Proceedings of the European Conference on Computer Vision, Part III, pp. 288–301, Graz, Austria, May 2006. They extract certain visual features based on the intuition that they can discriminate between aesthetically pleasing and displeasing images. | ||

| − | + | 美学,在艺术和摄影界,是指自然和欣赏美的原则。 判断美和其他审美品质是一项高度主观的任务。 宾夕法尼亚州立大学的计算机科学家将使用视觉内容自动推断图片的美学质量的挑战视为机器学习问题,并将同行评议的在线照片共享网站作为数据源【43】。 他们根据直觉提取某些视觉特征,即他们可以区分美学上令人愉悦的图像和令人不快的图像。 | |

==Potential applications== | ==Potential applications== | ||

| 第582行: | 第593行: | ||

http://www.learntechlib.org/p/173785/ | http://www.learntechlib.org/p/173785/ | ||

| − | + | 情感影响学习者的学习状态。利用情感计算技术,计算机可以通过学习者的面部表情识别来判断学习者的情感和学习状态。在教学中,教师可以利用分析结果了解学生的学习和接受能力,制定合理的教学计划。同时关注学生的内心感受,有利于学生的心理健康。特别是在远程教育中,由于时间和空间的分离,师生之间缺乏双向交流的情感激励。没有了传统课堂学习带来的氛围,学生很容易感到无聊,影响学习效果。将情感计算应用于远程教育系统可以有效地改善这种状况【44】。 | |

=== Healthcare === | === Healthcare === | ||

| 第589行: | 第600行: | ||

Social robots, as well as a growing number of robots used in health care benefit from emotional awareness because they can better judge users' and patient's emotional states and alter their actions/programming appropriately. This is especially important in those countries with growing aging populations and/or a lack of younger workers to address their needs. | Social robots, as well as a growing number of robots used in health care benefit from emotional awareness because they can better judge users' and patient's emotional states and alter their actions/programming appropriately. This is especially important in those countries with growing aging populations and/or a lack of younger workers to address their needs. | ||

| − | 社会机器人,以及越来越多的机器人在医疗保健中的应用都受益于情感意识,因为它们可以更好地判断用户和病人的情感状态,并适当地改变他们的行为/编程。在人口老龄化日益严重和/ | + | 社会机器人,以及越来越多的机器人在医疗保健中的应用都受益于情感意识,因为它们可以更好地判断用户和病人的情感状态,并适当地改变他们的行为/编程。在人口老龄化日益严重和/或缺乏年轻工人满足其需要的国家,这一点尤为重要【45】。 |

Affective computing is also being applied to the development of communicative technologies for use by people with autism.<ref>[http://affect.media.mit.edu/projects.php Projects in Affective Computing]</ref> The affective component of a text is also increasingly gaining attention, particularly its role in the so-called emotional or [[emotive Internet]].<ref>Shanahan, James; Qu, Yan; Wiebe, Janyce (2006). ''Computing Attitude and Affect in Text: Theory and Applications''. Dordrecht: Springer Science & Business Media. p. 94. {{ISBN|1402040261}}</ref> | Affective computing is also being applied to the development of communicative technologies for use by people with autism.<ref>[http://affect.media.mit.edu/projects.php Projects in Affective Computing]</ref> The affective component of a text is also increasingly gaining attention, particularly its role in the so-called emotional or [[emotive Internet]].<ref>Shanahan, James; Qu, Yan; Wiebe, Janyce (2006). ''Computing Attitude and Affect in Text: Theory and Applications''. Dordrecht: Springer Science & Business Media. p. 94. {{ISBN|1402040261}}</ref> | ||

| 第595行: | 第606行: | ||

Affective computing is also being applied to the development of communicative technologies for use by people with autism.Projects in Affective Computing The affective component of a text is also increasingly gaining attention, particularly its role in the so-called emotional or emotive Internet.Shanahan, James; Qu, Yan; Wiebe, Janyce (2006). Computing Attitude and Affect in Text: Theory and Applications. Dordrecht: Springer Science & Business Media. p. 94. | Affective computing is also being applied to the development of communicative technologies for use by people with autism.Projects in Affective Computing The affective component of a text is also increasingly gaining attention, particularly its role in the so-called emotional or emotive Internet.Shanahan, James; Qu, Yan; Wiebe, Janyce (2006). Computing Attitude and Affect in Text: Theory and Applications. Dordrecht: Springer Science & Business Media. p. 94. | ||

| − | + | 情感计算也被应用于交流技术的发展,以供孤独症患者使用【46】。情感计算项目文本中的情感成分也越来越受到关注,特别是它在所谓的情感或情感互联网中的作用【47】。 | |

=== Video games === | === Video games === | ||

| 第616行: | 第627行: | ||

Affective video games can access their players' emotional states through biofeedback devices. A particularly simple form of biofeedback is available through gamepads that measure the pressure with which a button is pressed: this has been shown to correlate strongly with the players' level of arousal; at the other end of the scale are brain–computer interfaces. Affective games have been used in medical research to support the emotional development of autistic children. | Affective video games can access their players' emotional states through biofeedback devices. A particularly simple form of biofeedback is available through gamepads that measure the pressure with which a button is pressed: this has been shown to correlate strongly with the players' level of arousal; at the other end of the scale are brain–computer interfaces. Affective games have been used in medical research to support the emotional development of autistic children. | ||

| − | + | 情感视频游戏可以通过生物反馈设备访问玩家的情绪状态【48】。一种特别简单的生物反馈形式可以通过游戏手柄来测量按下按钮的压力:这已被证明与玩家的唤醒水平密切相关【49】; 另一方面是脑机接口【50】【51】。情感游戏已被用于医学研究,以支持自闭症儿童的情感发展【52】。 | |

=== Other applications === | === Other applications === | ||

| 第628行: | 第639行: | ||

Other potential applications are centered around social monitoring. For example, a car can monitor the emotion of all occupants and engage in additional safety measures, such as alerting other vehicles if it detects the driver to be angry. Affective computing has potential applications in human–computer interaction, such as affective mirrors allowing the user to see how he or she performs; emotion monitoring agents sending a warning before one sends an angry email; or even music players selecting tracks based on mood. | Other potential applications are centered around social monitoring. For example, a car can monitor the emotion of all occupants and engage in additional safety measures, such as alerting other vehicles if it detects the driver to be angry. Affective computing has potential applications in human–computer interaction, such as affective mirrors allowing the user to see how he or she performs; emotion monitoring agents sending a warning before one sends an angry email; or even music players selecting tracks based on mood. | ||

| − | + | 其他潜在的应用主要围绕社会监控。例如,一辆汽车可以监控所有乘客的情绪,并采取额外的安全措施,例如,如果发现司机生气,就向其他车辆发出警报【53】。情感计算在人机交互方面有着潜在的应用,比如情感镜子可以让用户看到自己的表现; 情感监控代理在发送愤怒邮件之前发送警告; 甚至音乐播放器可以根据情绪选择音轨【54】。 | |

One idea put forth by the Romanian researcher Dr. Nicu Sebe in an interview is the analysis of a person's face while they are using a certain product (he mentioned ice cream as an example).<ref>{{cite web|url=https://www.sciencedaily.com/videos/2006/0811-mona_lisa_smiling.htm|title=Mona Lisa: Smiling? Computer Scientists Develop Software That Evaluates Facial Expressions|date=1 August 2006|website=ScienceDaily|archive-url=https://web.archive.org/web/20071019235625/http://sciencedaily.com/videos/2006/0811-mona_lisa_smiling.htm|archive-date=19 October 2007|url-status=dead}}</ref> Companies would then be able to use such analysis to infer whether their product will or will not be well received by the respective market. | One idea put forth by the Romanian researcher Dr. Nicu Sebe in an interview is the analysis of a person's face while they are using a certain product (he mentioned ice cream as an example).<ref>{{cite web|url=https://www.sciencedaily.com/videos/2006/0811-mona_lisa_smiling.htm|title=Mona Lisa: Smiling? Computer Scientists Develop Software That Evaluates Facial Expressions|date=1 August 2006|website=ScienceDaily|archive-url=https://web.archive.org/web/20071019235625/http://sciencedaily.com/videos/2006/0811-mona_lisa_smiling.htm|archive-date=19 October 2007|url-status=dead}}</ref> Companies would then be able to use such analysis to infer whether their product will or will not be well received by the respective market. | ||

| 第634行: | 第645行: | ||

One idea put forth by the Romanian researcher Dr. Nicu Sebe in an interview is the analysis of a person's face while they are using a certain product (he mentioned ice cream as an example). Companies would then be able to use such analysis to infer whether their product will or will not be well received by the respective market. | One idea put forth by the Romanian researcher Dr. Nicu Sebe in an interview is the analysis of a person's face while they are using a certain product (he mentioned ice cream as an example). Companies would then be able to use such analysis to infer whether their product will or will not be well received by the respective market. | ||

| − | 罗马尼亚研究人员尼库 · 塞贝博士在一次采访中提出的一个想法是,当一个人使用某种产品时,对他的脸进行分析(他提到了冰淇淋作为一个例子) | + | 罗马尼亚研究人员尼库 · 塞贝博士在一次采访中提出的一个想法是,当一个人使用某种产品时,对他的脸进行分析(他提到了冰淇淋作为一个例子)【55】。然后,公司就能够利用这种分析来推断他们的产品是否会受到各自市场的欢迎。 |

One could also use affective state recognition in order to judge the impact of a TV advertisement through a real-time video recording of that person and through the subsequent study of his or her facial expression. Averaging the results obtained on a large group of subjects, one can tell whether that commercial (or movie) has the desired effect and what the elements which interest the watcher most are. | One could also use affective state recognition in order to judge the impact of a TV advertisement through a real-time video recording of that person and through the subsequent study of his or her facial expression. Averaging the results obtained on a large group of subjects, one can tell whether that commercial (or movie) has the desired effect and what the elements which interest the watcher most are. | ||

| 第640行: | 第651行: | ||

One could also use affective state recognition in order to judge the impact of a TV advertisement through a real-time video recording of that person and through the subsequent study of his or her facial expression. Averaging the results obtained on a large group of subjects, one can tell whether that commercial (or movie) has the desired effect and what the elements which interest the watcher most are. | One could also use affective state recognition in order to judge the impact of a TV advertisement through a real-time video recording of that person and through the subsequent study of his or her facial expression. Averaging the results obtained on a large group of subjects, one can tell whether that commercial (or movie) has the desired effect and what the elements which interest the watcher most are. | ||

| − | + | 人们也可以利用情感状态识别来判断电视广告的影响,通过实时录像和随后对他或她的面部表情的研究。对大量主题的结果进行平均,我们就能知道这个广告(或电影)是否达到了预期的效果,以及观众最感兴趣的元素是什么。 | |

==Cognitivist vs. interactional approaches== | ==Cognitivist vs. interactional approaches== | ||

| 第652行: | 第663行: | ||

Within the field of human–computer interaction, Rosalind Picard's cognitivist or "information model" concept of emotion has been criticized by and contrasted with the "post-cognitivist" or "interactional" pragmatist approach taken by Kirsten Boehner and others which views emotion as inherently social. | Within the field of human–computer interaction, Rosalind Picard's cognitivist or "information model" concept of emotion has been criticized by and contrasted with the "post-cognitivist" or "interactional" pragmatist approach taken by Kirsten Boehner and others which views emotion as inherently social. | ||

| − | 在人机交互领域,罗莎琳德 · 皮卡德的情绪认知主义或“信息模型”概念受到了后认知主义或“互动”实用主义者柯尔斯滕 · | + | 在人机交互领域,罗莎琳德 · 皮卡德的情绪认知主义或“信息模型”概念受到了后认知主义或“互动”实用主义者柯尔斯滕 · 博纳等人的批判和对比【56】。 |

Picard's focus is human–computer interaction, and her goal for affective computing is to "give computers the ability to recognize, express, and in some cases, 'have' emotions".<ref name="Affective Computing"/> In contrast, the interactional approach seeks to help "people to understand and experience their own emotions"<ref name="How emotion is made and measured"/> and to improve computer-mediated interpersonal communication. It does not necessarily seek to map emotion into an objective mathematical model for machine interpretation, but rather let humans make sense of each other's emotional expressions in open-ended ways that might be ambiguous, subjective, and sensitive to context.<ref name="How emotion is made and measured"/>{{rp|284}}{{example needed|date=September 2018}} | Picard's focus is human–computer interaction, and her goal for affective computing is to "give computers the ability to recognize, express, and in some cases, 'have' emotions".<ref name="Affective Computing"/> In contrast, the interactional approach seeks to help "people to understand and experience their own emotions"<ref name="How emotion is made and measured"/> and to improve computer-mediated interpersonal communication. It does not necessarily seek to map emotion into an objective mathematical model for machine interpretation, but rather let humans make sense of each other's emotional expressions in open-ended ways that might be ambiguous, subjective, and sensitive to context.<ref name="How emotion is made and measured"/>{{rp|284}}{{example needed|date=September 2018}} | ||

| 第658行: | 第669行: | ||

Picard's focus is human–computer interaction, and her goal for affective computing is to "give computers the ability to recognize, express, and in some cases, 'have' emotions". In contrast, the interactional approach seeks to help "people to understand and experience their own emotions" and to improve computer-mediated interpersonal communication. It does not necessarily seek to map emotion into an objective mathematical model for machine interpretation, but rather let humans make sense of each other's emotional expressions in open-ended ways that might be ambiguous, subjective, and sensitive to context. | Picard's focus is human–computer interaction, and her goal for affective computing is to "give computers the ability to recognize, express, and in some cases, 'have' emotions". In contrast, the interactional approach seeks to help "people to understand and experience their own emotions" and to improve computer-mediated interpersonal communication. It does not necessarily seek to map emotion into an objective mathematical model for machine interpretation, but rather let humans make sense of each other's emotional expressions in open-ended ways that might be ambiguous, subjective, and sensitive to context. | ||

| − | + | 皮卡德的研究重点是人机交互,她研究情感计算的目标是“赋予计算机识别、表达、在某些情况下‘拥有’情感的能力”【4】。相比之下,交互式的方法旨在帮助“人们理解和体验他们自己的情绪”【57】,并改善以电脑为媒介的人际沟通。它不一定寻求将情感映射到机器解释的客观数学模型中,而是让人类以可能含糊不清、主观且对上下文敏感的开放式方式理解彼此的情感表达【57】。 | |

Picard's critics describe her concept of emotion as "objective, internal, private, and mechanistic". They say it reduces emotion to a discrete psychological signal occurring inside the body that can be measured and which is an input to cognition, undercutting the complexity of emotional experience.<ref name="How emotion is made and measured"/>{{rp|280}}<ref name="How emotion is made and measured"/>{{rp|278}} | Picard's critics describe her concept of emotion as "objective, internal, private, and mechanistic". They say it reduces emotion to a discrete psychological signal occurring inside the body that can be measured and which is an input to cognition, undercutting the complexity of emotional experience.<ref name="How emotion is made and measured"/>{{rp|280}}<ref name="How emotion is made and measured"/>{{rp|278}} | ||

| 第664行: | 第675行: | ||

Picard's critics describe her concept of emotion as "objective, internal, private, and mechanistic". They say it reduces emotion to a discrete psychological signal occurring inside the body that can be measured and which is an input to cognition, undercutting the complexity of emotional experience. | Picard's critics describe her concept of emotion as "objective, internal, private, and mechanistic". They say it reduces emotion to a discrete psychological signal occurring inside the body that can be measured and which is an input to cognition, undercutting the complexity of emotional experience. | ||

| − | + | 皮卡德的批评者将她的情感概念描述为“客观的、内在的、私人的和机械的”。他们认为它把情绪简化为发生在身体内部的一个离散的心理信号,这个信号可以被测量,并且是认知的输入,削弱了情绪体验的复杂性。 | |

The interactional approach asserts that though emotion has biophysical aspects, it is "culturally grounded, dynamically experienced, and to some degree constructed in action and interaction".<ref name="How emotion is made and measured"/>{{rp|276}} Put another way, it considers "emotion as a social and cultural product experienced through our interactions".<ref>{{cite journal|last1=Boehner|first1=Kirsten|last2=DePaula|first2=Rogerio|last3=Dourish|first3=Paul|last4=Sengers|first4=Phoebe|title=Affection: From Information to Interaction|journal=Proceedings of the Aarhus Decennial Conference on Critical Computing|date=2005|pages=59–68}}</ref><ref name="How emotion is made and measured">{{cite journal|last1=Boehner|first1=Kirsten|last2=DePaula|first2=Rogerio|last3=Dourish|first3=Paul|last4=Sengers|first4=Phoebe|title=How emotion is made and measured|journal=International Journal of Human–Computer Studies|date=2007|volume=65|issue=4|pages=275–291|doi=10.1016/j.ijhcs.2006.11.016}}</ref><ref>{{cite journal|last1=Hook|first1=Kristina|last2=Staahl|first2=Anna|last3=Sundstrom|first3=Petra|last4=Laaksolahti|first4=Jarmo|title=Interactional empowerment|journal=Proc. CHI|date=2008|pages=647–656|url=http://research.microsoft.com/en-us/um/cambridge/projects/hci2020/pdf/interactional%20empowerment%20final%20Jan%2008.pdf}}</ref> | The interactional approach asserts that though emotion has biophysical aspects, it is "culturally grounded, dynamically experienced, and to some degree constructed in action and interaction".<ref name="How emotion is made and measured"/>{{rp|276}} Put another way, it considers "emotion as a social and cultural product experienced through our interactions".<ref>{{cite journal|last1=Boehner|first1=Kirsten|last2=DePaula|first2=Rogerio|last3=Dourish|first3=Paul|last4=Sengers|first4=Phoebe|title=Affection: From Information to Interaction|journal=Proceedings of the Aarhus Decennial Conference on Critical Computing|date=2005|pages=59–68}}</ref><ref name="How emotion is made and measured">{{cite journal|last1=Boehner|first1=Kirsten|last2=DePaula|first2=Rogerio|last3=Dourish|first3=Paul|last4=Sengers|first4=Phoebe|title=How emotion is made and measured|journal=International Journal of Human–Computer Studies|date=2007|volume=65|issue=4|pages=275–291|doi=10.1016/j.ijhcs.2006.11.016}}</ref><ref>{{cite journal|last1=Hook|first1=Kristina|last2=Staahl|first2=Anna|last3=Sundstrom|first3=Petra|last4=Laaksolahti|first4=Jarmo|title=Interactional empowerment|journal=Proc. CHI|date=2008|pages=647–656|url=http://research.microsoft.com/en-us/um/cambridge/projects/hci2020/pdf/interactional%20empowerment%20final%20Jan%2008.pdf}}</ref> | ||

| 第670行: | 第681行: | ||

The interactional approach asserts that though emotion has biophysical aspects, it is "culturally grounded, dynamically experienced, and to some degree constructed in action and interaction". Put another way, it considers "emotion as a social and cultural product experienced through our interactions". | The interactional approach asserts that though emotion has biophysical aspects, it is "culturally grounded, dynamically experienced, and to some degree constructed in action and interaction". Put another way, it considers "emotion as a social and cultural product experienced through our interactions". | ||

| − | + | 互动方法断言,虽然情绪具有生物物理方面,但它是“以文化为基础的,动态体验的,并在某种程度上构建于行动和互动中”【57】。换句话说,它认为“情感是一种通过我们的互动体验到的社会和文化产品”【57】【58】【59】。 | |

==See also== | ==See also== | ||

| 第745行: | 第756行: | ||

<small>This page was moved from [[wikipedia:en:Affective computing]]. Its edit history can be viewed at [[情感计算/edithistory]]</small></noinclude> | <small>This page was moved from [[wikipedia:en:Affective computing]]. Its edit history can be viewed at [[情感计算/edithistory]]</small></noinclude> | ||

| − | |||

|} | |} | ||

2021年7月24日 (六) 15:37的版本

此词条暂由彩云小译翻译,翻译字数共5068,未经人工整理和审校,带来阅读不便,请见谅。

Affective computing is the study and development of systems and devices that can recognize, interpret, process, and simulate human affects. It is an interdisciplinary field spanning computer science, psychology, and cognitive science.[1] While some core ideas in the field may be traced as far back as to early philosophical inquiries into emotion,[2] the more modern branch of computer science originated with Rosalind Picard's 1995 paper[3] on affective computing and her book Affective Computing[4] published by MIT Press.[5][6] One of the motivations for the research is the ability to give machines emotional intelligence, including to simulate empathy. The machine should interpret the emotional state of humans and adapt its behavior to them, giving an appropriate response to those emotions.

Affective computing is the study and development of systems and devices that can recognize, interpret, process, and simulate human affects. It is an interdisciplinary field spanning computer science, psychology, and cognitive science. While some core ideas in the field may be traced as far back as to early philosophical inquiries into emotion, Cited by Tao and Tan. the more modern branch of computer science originated with Rosalind Picard's 1995 paper"Affective Computing" MIT Technical Report #321 (Abstract), 1995 on affective computing and her book Affective Computing published by MIT Press.

One of the motivations for the research is the ability to give machines emotional intelligence, including to simulate empathy. The machine should interpret the emotional state of humans and adapt its behavior to them, giving an appropriate response to those emotions.

情感计算是对能够识别、解释、处理和模拟人类影响的系统和设备的研究和开发。这是一个融合计算机科学、心理学和认知科学【1】的跨学科领域。虽然该领域的一些核心思想可以追溯到早期对情感【2】的哲学研究,但计算机科学的更现代分支起源于罗莎琳德·皮卡德1995年关于情感计算的论文【3】和她的由麻省理工出版社【5】【6】出版的《情感计算》【4】。这项研究的动机之一是赋予机器情商的能力,包括模拟移情。机器应该解读人类的情绪状态,并使其行为适应人类的情绪,对这些情绪作出适当的反应。

Areas

Areas

= 面积 =

Detecting and recognizing emotional information

Detecting emotional information usually begins with passive sensors that capture data about the user's physical state or behavior without interpreting the input. The data gathered is analogous to the cues humans use to perceive emotions in others. For example, a video camera might capture facial expressions, body posture, and gestures, while a microphone might capture speech. Other sensors detect emotional cues by directly measuring physiological data, such as skin temperature and galvanic resistance.[7]

Detecting emotional information usually begins with passive sensors that capture data about the user's physical state or behavior without interpreting the input. The data gathered is analogous to the cues humans use to perceive emotions in others. For example, a video camera might capture facial expressions, body posture, and gestures, while a microphone might capture speech. Other sensors detect emotional cues by directly measuring physiological data, such as skin temperature and galvanic resistance.

检测情感信息通常从被动传感器开始,这些传感器捕捉关于用户身体状态或行为的数据,而不解释输入信息。收集的数据类似于人类用来感知他人情感的线索。例如,摄像机可以捕捉面部表情、身体姿势和手势,而麦克风可以捕捉语音。其他传感器通过直接测量生理数据(如皮肤温度和电流电阻)来探测情感信号【7】。

Recognizing emotional information requires the extraction of meaningful patterns from the gathered data. This is done using machine learning techniques that process different modalities, such as speech recognition, natural language processing, or facial expression detection. The goal of most of these techniques is to produce labels that would match the labels a human perceiver would give in the same situation: For example, if a person makes a facial expression furrowing their brow, then the computer vision system might be taught to label their face as appearing "confused" or as "concentrating" or "slightly negative" (as opposed to positive, which it might say if they were smiling in a happy-appearing way). These labels may or may not correspond to what the person is actually feeling.

Recognizing emotional information requires the extraction of meaningful patterns from the gathered data. This is done using machine learning techniques that process different modalities, such as speech recognition, natural language processing, or facial expression detection. The goal of most of these techniques is to produce labels that would match the labels a human perceiver would give in the same situation: For example, if a person makes a facial expression furrowing their brow, then the computer vision system might be taught to label their face as appearing "confused" or as "concentrating" or "slightly negative" (as opposed to positive, which it might say if they were smiling in a happy-appearing way). These labels may or may not correspond to what the person is actually feeling.

识别情感信息需要从收集到的数据中提取出有意义的模式。这是通过处理不同模式的机器学习技术完成的,如语音识别、自然语言处理或面部表情检测。大多数这些技术的目标是产生与人类感知者在相同情况下给出的标签相匹配的标签: 例如,如果一个人做出皱眉的面部表情,那么计算机视觉系统可能会被教导将他们的脸标记为看起来“困惑”、“专注”或“轻微消极”(与积极相反,它可能会说,如果他们正在以一种快乐的方式微笑)。这些标签可能与人们的真实感受相符,也可能不相符。

Emotion in machines

Another area within affective computing is the design of computational devices proposed to exhibit either innate emotional capabilities or that are capable of convincingly simulating emotions. A more practical approach, based on current technological capabilities, is the simulation of emotions in conversational agents in order to enrich and facilitate interactivity between human and machine.[8]

Another area within affective computing is the design of computational devices proposed to exhibit either innate emotional capabilities or that are capable of convincingly simulating emotions. A more practical approach, based on current technological capabilities, is the simulation of emotions in conversational agents in order to enrich and facilitate interactivity between human and machine.

情感计算的另一个领域是计算设备的设计,旨在展示先天的情感能力或能够令人信服地模拟情感。基于当前的技术能力,一个更加实用的方法是模拟会话代理中的情绪,以丰富和促进人与机器之间的互动【8】。

Marvin Minsky, one of the pioneering computer scientists in artificial intelligence, relates emotions to the broader issues of machine intelligence stating in The Emotion Machine that emotion is "not especially different from the processes that we call 'thinking.'"[9]

Marvin Minsky, one of the pioneering computer scientists in artificial intelligence, relates emotions to the broader issues of machine intelligence stating in The Emotion Machine that emotion is "not especially different from the processes that we call 'thinking.'"

人工智能领域的计算机科学先驱之一马文•明斯基(Marvin Minsky)在《情绪机器》(The Emotion Machine)一书中将情绪与更广泛的机器智能问题联系起来。他在书中表示,情绪“与我们所谓的‘思考’过程并没有特别的不同。'"【9】

Technologies

In psychology, cognitive science, and in neuroscience, there have been two main approaches for describing how humans perceive and classify emotion: continuous or categorical. The continuous approach tends to use dimensions such as negative vs. positive, calm vs. aroused.

In psychology, cognitive science, and in neuroscience, there have been two main approaches for describing how humans perceive and classify emotion: continuous or categorical. The continuous approach tends to use dimensions such as negative vs. positive, calm vs. aroused.

在心理学、认知科学和神经科学中,描述人类如何感知和分类情绪的方法主要有两种: 连续的和分类的。连续的方法倾向于使用诸如消极与积极、平静与激动之类的维度。

The categorical approach tends to use discrete classes such as happy, sad, angry, fearful, surprise, disgust. Different kinds of machine learning regression and classification models can be used for having machines produce continuous or discrete labels. Sometimes models are also built that allow combinations across the categories, e.g. a happy-surprised face or a fearful-surprised face.[10]

The categorical approach tends to use discrete classes such as happy, sad, angry, fearful, surprise, disgust. Different kinds of machine learning regression and classification models can be used for having machines produce continuous or discrete labels. Sometimes models are also built that allow combinations across the categories, e.g. a happy-surprised face or a fearful-surprised face.

分类方法倾向于使用离散的类别,如快乐,悲伤,愤怒,恐惧,惊讶,厌恶。不同类型的机器学习回归和分类模型可以用于让机器产生连续或离散的标签。有时还会构建允许跨类别组合的模型,例如 一张高兴而惊讶的脸或一张害怕而惊讶的脸【10】。

The following sections consider many of the kinds of input data used for the task of emotion recognition.

The following sections consider many of the kinds of input data used for the task of emotion recognition.

接下来的部分将讨论用于情感识别任务的各种输入数据。

Emotional speech

Various changes in the autonomic nervous system can indirectly alter a person's speech, and affective technologies can leverage this information to recognize emotion. For example, speech produced in a state of fear, anger, or joy becomes fast, loud, and precisely enunciated, with a higher and wider range in pitch, whereas emotions such as tiredness, boredom, or sadness tend to generate slow, low-pitched, and slurred speech.[11] Some emotions have been found to be more easily computationally identified, such as anger[12] or approval.[13]

Various changes in the autonomic nervous system can indirectly alter a person's speech, and affective technologies can leverage this information to recognize emotion. For example, speech produced in a state of fear, anger, or joy becomes fast, loud, and precisely enunciated, with a higher and wider range in pitch, whereas emotions such as tiredness, boredom, or sadness tend to generate slow, low-pitched, and slurred speech.Breazeal, C. and Aryananda, L. Recognition of affective communicative intent in robot-directed speech. Autonomous Robots 12 1, 2002. pp. 83–104. Some emotions have been found to be more easily computationally identified, such as anger or approval.

自主神经系统的各种变化可以间接地改变一个人的语言,情感技术可以利用这些信息来识别情绪。例如,在恐惧、愤怒或高兴的状态下发言变得快速、响亮、清晰,音调变得越来越高、越来越宽,而诸如疲倦、厌倦或悲伤等情绪往往会产生缓慢、低沉、含糊不清的发言【11】。有些情绪更容易被计算识别,比如愤怒【12】或赞同【13】。

Emotional speech processing technologies recognize the user's emotional state using computational analysis of speech features. Vocal parameters and prosodic features such as pitch variables and speech rate can be analyzed through pattern recognition techniques.[12][14]

Emotional speech processing technologies recognize the user's emotional state using computational analysis of speech features. Vocal parameters and prosodic features such as pitch variables and speech rate can be analyzed through pattern recognition techniques.Dellaert, F., Polizin, t., and Waibel, A., Recognizing Emotion in Speech", In Proc. Of ICSLP 1996, Philadelphia, PA, pp.1970–1973, 1996Lee, C.M.; Narayanan, S.; Pieraccini, R., Recognition of Negative Emotion in the Human Speech Signals, Workshop on Auto. Speech Recognition and Understanding, Dec 2001

情感语音处理技术通过对语音特征的计算分析来识别用户的情感状态。通过模式识别技术【12】【14】可以分析声音参数和韵律特征,如音高变量和语速等。

Speech analysis is an effective method of identifying affective state, having an average reported accuracy of 70 to 80% in recent research.[15][16] These systems tend to outperform average human accuracy (approximately 60%[12]) but are less accurate than systems which employ other modalities for emotion detection, such as physiological states or facial expressions.[17] However, since many speech characteristics are independent of semantics or culture, this technique is considered to be a promising route for further research.[18]

Speech analysis is an effective method of identifying affective state, having an average reported accuracy of 70 to 80% in recent research. These systems tend to outperform average human accuracy (approximately 60%) but are less accurate than systems which employ other modalities for emotion detection, such as physiological states or facial expressions. However, since many speech characteristics are independent of semantics or culture, this technique is considered to be a promising route for further research.

语音分析是一种有效的情感状态识别方法,在最近的研究中,语音分析的平均报告准确率为70%-80%【15】【16】 。这些系统往往比人类的平均准确率(大约60%【12】)更高,但是不如使用其他情绪检测方式的系统准确,比如生理状态或面部表情【17】。然而,由于许多言语特征是独立于语义或文化的,这种技术被认为是一个很有前途的研究路线【18】。

Algorithms

Algorithms

= = 算法 = =

The process of speech/text affect detection requires the creation of a reliable database, knowledge base, or vector space model,[19] broad enough to fit every need for its application, as well as the selection of a successful classifier which will allow for quick and accurate emotion identification.

The process of speech/text affect detection requires the creation of a reliable database, knowledge base, or vector space model,

broad enough to fit every need for its application, as well as the selection of a successful classifier which will allow for quick and accurate emotion identification.

语音/文本影响检测的过程需要创建一个可靠的数据库、知识库或者向量空间模型【19】,这些数据库的范围足以满足其应用的所有需要,同时还需要选择一个成功的分类器,这样才能快速准确地识别情感。

Currently, the most frequently used classifiers are linear discriminant classifiers (LDC), k-nearest neighbor (k-NN), Gaussian mixture model (GMM), support vector machines (SVM), artificial neural networks (ANN), decision tree algorithms and hidden Markov models (HMMs).[20] Various studies showed that choosing the appropriate classifier can significantly enhance the overall performance of the system.[17] The list below gives a brief description of each algorithm:

Currently, the most frequently used classifiers are linear discriminant classifiers (LDC), k-nearest neighbor (k-NN), Gaussian mixture model (GMM), support vector machines (SVM), artificial neural networks (ANN), decision tree algorithms and hidden Markov models (HMMs). Various studies showed that choosing the appropriate classifier can significantly enhance the overall performance of the system. The list below gives a brief description of each algorithm:

目前常用的分类器有线性判别分类器(LDC)、 k- 近邻分类器(k-NN)、高斯混合模型(GMM)、支持向量机(SVM)、人工神经网络(ANN)、决策树算法和隐马尔可夫模型(HMMs)【20】。各种研究表明,选择合适的分类器可以显著提高系统的整体性能。下面的列表给出了每个算法的简要描述:

- LDC – Classification happens based on the value obtained from the linear combination of the feature values, which are usually provided in the form of vector features.

- k-NN – Classification happens by locating the object in the feature space, and comparing it with the k nearest neighbors (training examples). The majority vote decides on the classification.

- GMM – is a probabilistic model used for representing the existence of subpopulations within the overall population. Each sub-population is described using the mixture distribution, which allows for classification of observations into the sub-populations.[21]

- SVM – is a type of (usually binary) linear classifier which decides in which of the two (or more) possible classes, each input may fall into.

- ANN – is a mathematical model, inspired by biological neural networks, that can better grasp possible non-linearities of the feature space.

- Decision tree algorithms – work based on following a decision tree in which leaves represent the classification outcome, and branches represent the conjunction of subsequent features that lead to the classification.

- HMMs – a statistical Markov model in which the states and state transitions are not directly available to observation. Instead, the series of outputs dependent on the states are visible. In the case of affect recognition, the outputs represent the sequence of speech feature vectors, which allow the deduction of states' sequences through which the model progressed. The states can consist of various intermediate steps in the expression of an emotion, and each of them has a probability distribution over the possible output vectors. The states' sequences allow us to predict the affective state which we are trying to classify, and this is one of the most commonly used techniques within the area of speech affect detection.

- LDC – Classification happens based on the value obtained from the linear combination of the feature values, which are usually provided in the form of vector features.

- k-NN – Classification happens by locating the object in the feature space, and comparing it with the k nearest neighbors (training examples). The majority vote decides on the classification.

- GMM – is a probabilistic model used for representing the existence of subpopulations within the overall population. Each sub-population is described using the mixture distribution, which allows for classification of observations into the sub-populations."Gaussian Mixture Model". Connexions – Sharing Knowledge and Building Communities. Retrieved 10 March 2011.

- SVM – is a type of (usually binary) linear classifier which decides in which of the two (or more) possible classes, each input may fall into.

- ANN – is a mathematical model, inspired by biological neural networks, that can better grasp possible non-linearities of the feature space.

- Decision tree algorithms – work based on following a decision tree in which leaves represent the classification outcome, and branches represent the conjunction of subsequent features that lead to the classification.

- HMMs – a statistical Markov model in which the states and state transitions are not directly available to observation. Instead, the series of outputs dependent on the states are visible. In the case of affect recognition, the outputs represent the sequence of speech feature vectors, which allow the deduction of states' sequences through which the model progressed. The states can consist of various intermediate steps in the expression of an emotion, and each of them has a probability distribution over the possible output vectors. The states' sequences allow us to predict the affective state which we are trying to classify, and this is one of the most commonly used techniques within the area of speech affect detection.

- LDC-根据特征值的线性组合值进行分类,特征值通常以矢量特征的形式提供。

- k-NN-分类是通过在特征空间中定位目标,并与 k 个最近邻(训练样本)进行比较来实现的。多数票决定分类。

- GMM-是一种概率模型,用于表示总体中是否存在亚群。 每个子群都使用混合分布来描述,这允许将观察结果分类到子群中【21】。

- SVM-是一种(通常为二进制)线性分类器,它决定每个输入可能属于两个(或多个)可能类别中的哪一个。

- ANN-是一种受生物神经网络启发的数学模型,能够更好地把握特征空间可能存在的非线性。

- 决策树算法——基于遵循决策树的工作,其中叶子代表分类结果,而分支代表导致分类的后续特征的结合

- HMMs-一个统计马尔可夫模型,其中的状态和状态转变不能直接用于观测。相反,依赖于状态的一系列输出是可见的。在情感识别的情况下,输出表示语音特征向量的序列,这样可以推导出模型所经过的状态序列。这些状态可以由表达情绪的各种中间步骤组成,每个概率分布都有一个可能的输出向量。状态序列允许我们预测我们试图分类的情感状态,这是语音情感检测领域最常用的技术之一。

It is proved that having enough acoustic evidence available the emotional state of a person can be classified by a set of majority voting classifiers. The proposed set of classifiers is based on three main classifiers: kNN, C4.5 and SVM-RBF Kernel. This set achieves better performance than each basic classifier taken separately. It is compared with two other sets of classifiers: one-against-all (OAA) multiclass SVM with Hybrid kernels and the set of classifiers which consists of the following two basic classifiers: C5.0 and Neural Network. The proposed variant achieves better performance than the other two sets of classifiers.[22]

It is proved that having enough acoustic evidence available the emotional state of a person can be classified by a set of majority voting classifiers. The proposed set of classifiers is based on three main classifiers: kNN, C4.5 and SVM-RBF Kernel. This set achieves better performance than each basic classifier taken separately. It is compared with two other sets of classifiers: one-against-all (OAA) multiclass SVM with Hybrid kernels and the set of classifiers which consists of the following two basic classifiers: C5.0 and Neural Network. The proposed variant achieves better performance than the other two sets of classifiers.

事实证明,如果有足够的声学证据,可以通过一组多数投票分类器对一个人的情绪状态进行分类。该分类器集合基于三个主要分类器: kNN、 C4.5和 SVM-RBF 核。该分类器比单独采集的基本分类器具有更好的分类性能。将其与其他两组分类器进行比较:具有混合内核的一对多 (OAA) 多类 SVM 和由以下两个基本分类器组成的分类器组:C5.0 和神经网络。所提出的变体比其他两组分类器获得了更好的性能【22】。

Databases

Databases

= = = 数据库 = =

The vast majority of present systems are data-dependent. This creates one of the biggest challenges in detecting emotions based on speech, as it implicates choosing an appropriate database used to train the classifier. Most of the currently possessed data was obtained from actors and is thus a representation of archetypal emotions. Those so-called acted databases are usually based on the Basic Emotions theory (by Paul Ekman), which assumes the existence of six basic emotions (anger, fear, disgust, surprise, joy, sadness), the others simply being a mix of the former ones.[23] Nevertheless, these still offer high audio quality and balanced classes (although often too few), which contribute to high success rates in recognizing emotions.

The vast majority of present systems are data-dependent. This creates one of the biggest challenges in detecting emotions based on speech, as it implicates choosing an appropriate database used to train the classifier. Most of the currently possessed data was obtained from actors and is thus a representation of archetypal emotions. Those so-called acted databases are usually based on the Basic Emotions theory (by Paul Ekman), which assumes the existence of six basic emotions (anger, fear, disgust, surprise, joy, sadness), the others simply being a mix of the former ones.Ekman, P. & Friesen, W. V (1969). The repertoire of nonverbal behavior: Categories, origins, usage, and coding. Semiotica, 1, 49–98. Nevertheless, these still offer high audio quality and balanced classes (although often too few), which contribute to high success rates in recognizing emotions.

绝大多数现有系统都依赖于数据。 这造成了基于语音检测情绪的最大挑战之一,因为它涉及选择用于训练分类器的合适数据库。 目前拥有的大部分数据都是从演员那里获得的,因此是原型情感的代表。这些所谓的行为数据库通常是基于基本情绪理论(保罗 · 埃克曼) ,该理论假定存在六种基本情绪(愤怒、恐惧、厌恶、惊讶、喜悦、悲伤) ,其他情绪只是前者的混合体【23】。尽管如此,这些仍然提供高音质和平衡的类别(尽管通常太少),有助于提高识别情绪的成功率。

However, for real life application, naturalistic data is preferred. A naturalistic database can be produced by observation and analysis of subjects in their natural context. Ultimately, such database should allow the system to recognize emotions based on their context as well as work out the goals and outcomes of the interaction. The nature of this type of data allows for authentic real life implementation, due to the fact it describes states naturally occurring during the human–computer interaction (HCI).

However, for real life application, naturalistic data is preferred. A naturalistic database can be produced by observation and analysis of subjects in their natural context. Ultimately, such database should allow the system to recognize emotions based on their context as well as work out the goals and outcomes of the interaction. The nature of this type of data allows for authentic real life implementation, due to the fact it describes states naturally occurring during the human–computer interaction (HCI).

然而,对于现实生活应用,自然数据是首选的。自然数据库可以通过在自然环境中观察和分析对象来产生。最终,这样的数据库应该允许系统根据他们的上下文识别情绪,并制定交互的目标和结果。此类数据的性质允许真实的现实生活实施,因为它描述了人机交互 (HCI) 期间自然发生的状态。

Despite the numerous advantages which naturalistic data has over acted data, it is difficult to obtain and usually has low emotional intensity. Moreover, data obtained in a natural context has lower signal quality, due to surroundings noise and distance of the subjects from the microphone. The first attempt to produce such database was the FAU Aibo Emotion Corpus for CEICES (Combining Efforts for Improving Automatic Classification of Emotional User States), which was developed based on a realistic context of children (age 10–13) playing with Sony's Aibo robot pet.[24][25] Likewise, producing one standard database for all emotional research would provide a method of evaluating and comparing different affect recognition systems.

Despite the numerous advantages which naturalistic data has over acted data, it is difficult to obtain and usually has low emotional intensity. Moreover, data obtained in a natural context has lower signal quality, due to surroundings noise and distance of the subjects from the microphone. The first attempt to produce such database was the FAU Aibo Emotion Corpus for CEICES (Combining Efforts for Improving Automatic Classification of Emotional User States), which was developed based on a realistic context of children (age 10–13) playing with Sony's Aibo robot pet. Likewise, producing one standard database for all emotional research would provide a method of evaluating and comparing different affect recognition systems.

尽管自然数据比行为数据具有许多优势,但很难获得并且通常情绪强度较低。此外,由于环境噪声和对象与麦克风的距离,在自然环境中获得的数据具有较低的信号质量。第一次尝试创建这样的数据库是 FAU Aibo Emotion Corpus for CEICES(Combining Efforts for Improvement Automatic Classification of Emotional User States),它是基于儿童(10-13 岁)与索尼 Aibo 机器人宠物玩耍的真实情境开发的 。同样,为所有情感研究生成一个标准数据库将提供一种评估和比较不同情感识别系统的方法。

Speech descriptors

Speech descriptors

= = 语言描述符 = =