“复杂性”的版本间的差异

| 第34行: | 第34行: | ||

'''Emergence.''' Interactions between components in integrated systems often generate phenomena, functions, or effects that cannot be trivially reduced to properties of the components alone. Instead these functions emerge as a result of structured interactions and are properties of the system as a whole. In many cases, even a detailed and complete examination of the individual components will fail to predict the range of emergent processes that these components are capable of if allowed to interact as part of a system. In turn, dissection of an integrated system into components and interactions generally results in a loss of the emergent process. | '''Emergence.''' Interactions between components in integrated systems often generate phenomena, functions, or effects that cannot be trivially reduced to properties of the components alone. Instead these functions emerge as a result of structured interactions and are properties of the system as a whole. In many cases, even a detailed and complete examination of the individual components will fail to predict the range of emergent processes that these components are capable of if allowed to interact as part of a system. In turn, dissection of an integrated system into components and interactions generally results in a loss of the emergent process. | ||

| + | |||

| + | '''涌现''' 系统中组件之间的相互作用往往产生不能被简单地归结为组件特性的现象、功能或效应。相反,这些功能是结构化交互的结果,是系统作为一个整体的属性。在许多情况下,即使对单个组件进行详细而全面的检查,也无法预测这些组件作为系统的一部分进行交互时能够发生的突发过程的范围。反过来,将一个集成的系统拆解成组件和相互作用通常会导致涌现过程的丢失。 | ||

| + | |||

In summary, systems with numerous components capable of structured interactions that generate emergent phenomena may be called complex. The observation of complex systems poses many challenges, as an observer needs simultaneously to record states and state transitions of many components and interactions. Such observations require that choices be made concerning the definition of states, state space and time resolution. How states are defined and measured can impact other derived measures such as those of system complexity. | In summary, systems with numerous components capable of structured interactions that generate emergent phenomena may be called complex. The observation of complex systems poses many challenges, as an observer needs simultaneously to record states and state transitions of many components and interactions. Such observations require that choices be made concerning the definition of states, state space and time resolution. How states are defined and measured can impact other derived measures such as those of system complexity. | ||

| + | |||

| + | 总之,具有大量组件的、能够产生涌现现象的、结构化交互的系统可以称为'''复杂系统'''。对复杂系统得观察提出了许多挑战,因为观察者需要同时记录许多组件和交互的状态和状态转换。这种观测要求对状态的定义、状态空间和时间分辨率作出选择。怎样定义和度量系统的状态会影响到其他派生出来的度量方法,比如系统复杂性度量方法。 | ||

| + | |||

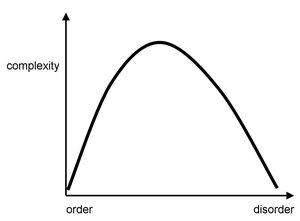

[[Image:complexity_figure1.jpg|thumb|300px|left|F1|Complexity as a mixture of order and disorder. Drawn after Huberman and Hogg (1986).]] | [[Image:complexity_figure1.jpg|thumb|300px|left|F1|Complexity as a mixture of order and disorder. Drawn after Huberman and Hogg (1986).]] | ||

| + | |||

==复杂性的度量== | ==复杂性的度量== | ||

Measures of complexity allow different systems to be compared to each other by applying a common metric. This is especially meaningful for systems that are structurally or functionally related. Differences in complexity among such related systems may reveal features of their organization that promote complexity. | Measures of complexity allow different systems to be compared to each other by applying a common metric. This is especially meaningful for systems that are structurally or functionally related. Differences in complexity among such related systems may reveal features of their organization that promote complexity. | ||

| + | |||

| + | 通过应用一个共同的度量标准,复杂性度量使得不同的系统可以相互比较。这对于结构上或功能上相关的系统尤其有意义。这些相关系统之间复杂性的差异可能揭示其组织的特征,从而促进我们对复杂性的理解。 | ||

| + | |||

Some measures of complexity are algorithmic in nature and attempt to identify a minimal description length. For these measures complexity applies to a description, not the system or process per se. Other measures of complexity take into account the time evolution of a system and often build on the theoretical foundations of statistical information theory. | Some measures of complexity are algorithmic in nature and attempt to identify a minimal description length. For these measures complexity applies to a description, not the system or process per se. Other measures of complexity take into account the time evolution of a system and often build on the theoretical foundations of statistical information theory. | ||

| + | |||

| + | 一些复杂性度量是算法性质的,并试图确定最小描述长度。对于这些度量,复杂性仅适用于描述,而不适用于系统或流程本身。其他衡量复杂性的方法考虑到一个系统的时间演变,并且往往建立在统计信息理论的理论基础之上。 | ||

| + | |||

Most extant complexity measures can be grouped into two main categories. Members of the first category (algorithmic information content and logical depth) all capture the randomness, information content or description length of a system or process, with random processes possessing the highest complexity since they most resist compression. The second category (including statistical complexity, physical complexity and neural complexity) conceptualizes complexity as distinct from randomness. Here, complex systems are those that possess a high amount of structure or information, often across multiple temporal and spatial scales. Within this category of measures, highly complex systems are positioned somewhere between systems that are highly ordered (regular) or highly disordered (random). <figref>Complexity_figure1.jpg</figref> shows a schematic diagram of the shape of such measures, however, it should be emphasized again that a generally accepted quantitative expression linking complexity and disorder does not currently exist. | Most extant complexity measures can be grouped into two main categories. Members of the first category (algorithmic information content and logical depth) all capture the randomness, information content or description length of a system or process, with random processes possessing the highest complexity since they most resist compression. The second category (including statistical complexity, physical complexity and neural complexity) conceptualizes complexity as distinct from randomness. Here, complex systems are those that possess a high amount of structure or information, often across multiple temporal and spatial scales. Within this category of measures, highly complex systems are positioned somewhere between systems that are highly ordered (regular) or highly disordered (random). <figref>Complexity_figure1.jpg</figref> shows a schematic diagram of the shape of such measures, however, it should be emphasized again that a generally accepted quantitative expression linking complexity and disorder does not currently exist. | ||

2021年8月8日 (日) 00:56的版本

The complexity of a physical system or a dynamical process expresses the degree to which components engage in organized structured interactions. High complexity is achieved in systems that exhibit a mixture of order and disorder (randomness and regularity) and that have a high capacity to generate emergent phenomena.

物理系统或动态过程的复杂性表达了组件参与有组织的结构化交互的程度。高度复杂的系统实现了有序和无序(规律性和随机性)的混合,并有很高的可能产生涌现现象。

各学科中的复杂性

Despite the importance and ubiquity of the concept of complexity in modern science and society, no general and widely accepted means of measuring the complexity of a physical object, system, or process currently exists. The lack of any general measure may reflect the nascent stage of our understanding of complex systems, which still lacks a general unified framework that cuts across all natural and social sciences. While a general measure has remained elusive until now, there is a broad spectrum of measures of complexity that apply to specific types of systems or problem domains. The justification for each of these measures often rests on their ability to produce intuitively correct values at the extremes of the complexity spectrum. Despite the heterogeneous approaches taken to defining and measuring complexity, the belief persists that there are properties common to all complex systems, and new proposals are continually being produced and tested by an interdisciplinary community of physicists, biologists, mathematicians, computer scientists, economists and social theorists.

尽管复杂性的概念在现代科学和社会中非常重要和普遍存在,但目前还没有一种普遍的、广泛接受的方法来衡量一个物理对象、系统或过程的复杂性。缺乏普遍的衡量标准,可能反映了我们对复杂系统的理解处于初始阶段,缺乏一个贯穿所有自然科学和社会科学的普遍的统一框架。虽然到目前为止,一般性的衡量标准仍然难以捉摸,但是,我们拥有许多适用于具体类型的系统或问题领域的复杂性衡量标准。尽管在定义和测量复杂性方面存在了各种不同的方法,但有一种信念仍然坚持认为所有复杂系统都有共同的特性,而且新的定义和度量正在不断地被一个由物理学家、生物学家、数学家、计算机科学家、经济学家和社会理论家组成的跨学科团体提出和检验。

This article deals with complexity from an information-theoretical perspective. A treatment of complexity from the perspective of dynamical systems can be found in another article (Complex Systems).

本文从信息论的角度探讨复杂性问题。读者还可以从系统论的角度出发理解复杂性。

复杂性的一般特征

Quantitative or analytic definitions of complexity often disagree about the precise formulation, or indeed even the formal framework within which complexity is to be defined. Yet, there is an informal notion of complexity based upon some of the ingredients that are shared by most if not all complex systems and processes. Herbert Simon was among the first to discuss the nature and architecture of complex systems (Simon, 1981) and he suggested to define complex systems as those that are “made up of a large number of parts that have many interactions.” Simon goes on to note that “in such systems the whole is more than the sum of the parts in the […] sense that, given the properties of the parts and the laws of their interaction, it is not a trivial matter to infer the properties of the whole.”

复杂性的定量或分析学(analytic)定义往往不同意精确的表述,甚至不同意定义复杂性的正式框架。然而,有一种非正式的复杂性概念,它基于大多数(如果不是所有的话)复杂系统和过程所共有的一些要素。赫伯特 · 西蒙是最早讨论复杂系统的性质和结构的人之一(Simon,1981) ,他建议将复杂系统定义为那些“由大量具有许多相互作用的部分组成”的系统。西蒙接着指出,“在这样的系统中,整体不仅仅在[ ... ]的意义上大于各部分之和,考虑到各部分的性质及其相互作用的规律,推断整体的性质不是一件容易事。”

Components. Many complex systems can be decomposed into components (elements, units) that are structurally distinct, and may function as individuals, generating some form of local behavior, activity or dynamics. Components of complex systems may themselves be decomposable into subcomponents, resulting in systems that are complex at multiple levels of organization, forming hierarchies of nearly, but not completely decomposable components.

组分 许多复杂的系统可以分解成结构上不同的组成部分(组件、元素、单元) ,且它们可以作为个体发挥作用,产生某种形式的局部行为、活动或动态。复杂系统的组件本身可以分解为子组件,导致系统在多层次的组织中是复杂的,形成(不完全)可分解的组件层次结构。

Interactions. Components of complex systems engage in dynamic interactions, resulting in integration or binding of these components across space and time into an organized whole. Interactions often modulate the individual actions of the components, thus altering their local functionality by relaying global context. In many complex systems, interactions between subsets of components are mediated by some form of communication paths or connections. Such structured interactions are often sparse, i.e. only a small subset of all possible interactions is actually realized within the system. The specific patterns of these interactions are crucial in determining how the system functions as a whole. Some interactions between components may be strong, in the sense that they possess great efficacy with respect to individual components. Other interactions may be considered weak, as their effects are limited in size or sporadic across time. Both, strong and weak interactions can have significant effects on the system as a whole.

交互 复杂系统的组成部分进行动态交互,导致这些组成部分跨空间和时间整合成一个有组织的整体。交互通常能调节组件的单个动作,从而通过中继全局上下文 relaying global context 改变其局部功能。在许多复杂系统中,组件子集之间的交互是通过某种形式的通信路径或连接进行的。这样的结构化交互通常是稀疏的,也就是说,所有可能的交互中只有一小部分是在系统中实现的。这些相互作用的具体模式对于确定系统作为一个整体如何运作至关重要。组件之间的某些相互作用可能很强,因为它们对于单个组件具有很大的效力。其他相互作用可能被认为是弱的,因为他们的影响范围或时间是有限的。无论是强相互作用还是弱相互作用,都能对整个系统产生重大影响。

Emergence. Interactions between components in integrated systems often generate phenomena, functions, or effects that cannot be trivially reduced to properties of the components alone. Instead these functions emerge as a result of structured interactions and are properties of the system as a whole. In many cases, even a detailed and complete examination of the individual components will fail to predict the range of emergent processes that these components are capable of if allowed to interact as part of a system. In turn, dissection of an integrated system into components and interactions generally results in a loss of the emergent process.

涌现 系统中组件之间的相互作用往往产生不能被简单地归结为组件特性的现象、功能或效应。相反,这些功能是结构化交互的结果,是系统作为一个整体的属性。在许多情况下,即使对单个组件进行详细而全面的检查,也无法预测这些组件作为系统的一部分进行交互时能够发生的突发过程的范围。反过来,将一个集成的系统拆解成组件和相互作用通常会导致涌现过程的丢失。

In summary, systems with numerous components capable of structured interactions that generate emergent phenomena may be called complex. The observation of complex systems poses many challenges, as an observer needs simultaneously to record states and state transitions of many components and interactions. Such observations require that choices be made concerning the definition of states, state space and time resolution. How states are defined and measured can impact other derived measures such as those of system complexity.

总之,具有大量组件的、能够产生涌现现象的、结构化交互的系统可以称为复杂系统。对复杂系统得观察提出了许多挑战,因为观察者需要同时记录许多组件和交互的状态和状态转换。这种观测要求对状态的定义、状态空间和时间分辨率作出选择。怎样定义和度量系统的状态会影响到其他派生出来的度量方法,比如系统复杂性度量方法。

复杂性的度量

Measures of complexity allow different systems to be compared to each other by applying a common metric. This is especially meaningful for systems that are structurally or functionally related. Differences in complexity among such related systems may reveal features of their organization that promote complexity.

通过应用一个共同的度量标准,复杂性度量使得不同的系统可以相互比较。这对于结构上或功能上相关的系统尤其有意义。这些相关系统之间复杂性的差异可能揭示其组织的特征,从而促进我们对复杂性的理解。

Some measures of complexity are algorithmic in nature and attempt to identify a minimal description length. For these measures complexity applies to a description, not the system or process per se. Other measures of complexity take into account the time evolution of a system and often build on the theoretical foundations of statistical information theory.

一些复杂性度量是算法性质的,并试图确定最小描述长度。对于这些度量,复杂性仅适用于描述,而不适用于系统或流程本身。其他衡量复杂性的方法考虑到一个系统的时间演变,并且往往建立在统计信息理论的理论基础之上。

Most extant complexity measures can be grouped into two main categories. Members of the first category (algorithmic information content and logical depth) all capture the randomness, information content or description length of a system or process, with random processes possessing the highest complexity since they most resist compression. The second category (including statistical complexity, physical complexity and neural complexity) conceptualizes complexity as distinct from randomness. Here, complex systems are those that possess a high amount of structure or information, often across multiple temporal and spatial scales. Within this category of measures, highly complex systems are positioned somewhere between systems that are highly ordered (regular) or highly disordered (random). <figref>Complexity_figure1.jpg</figref> shows a schematic diagram of the shape of such measures, however, it should be emphasized again that a generally accepted quantitative expression linking complexity and disorder does not currently exist.

复杂与随机

Algorithmic information content was defined (Kolmogorov, 1965; Chaitin, 1977) as the amount of information contained in a string of symbols given by the length of the shortest computer program that generates the string. Highly regular, periodic or monotonic strings may be computed by programs that are short and thus contain little information, while random strings require a program that is as long as the string itself, thus resulting in high (maximal) information content. Algorithmic information content (AIC) captures the amount of randomness of symbol strings, but seems ill suited for applications to biological or neural systems and, in addition, has the inconvenient property of being uncomputable. For further discussion see algorithmic information theory.

Logical Depth (Bennett, 1988) is related to AIC and draws additionally on computational complexity defined as the minimal amount of computational resources (time, memory) needed to solve a given class of problem. Complexity as logical depth refers mainly to the running time of the shortest program capable of generating a given string or pattern. Similarly to AIC, complexity as logical depth is a measure of a generative process and does not apply directly to an actually existing physical system or dynamical process. Computing logical depth requires knowing the shortest computer program, thus the measure is subject to the same fundamental limitation as AIC.

Effective measure complexity (Grassberger, 1986) quantifies the complexity of a sequence by the amount of information contained in a given part of the sequence that is needed to predict the next symbol. Effective measure complexity can capture structure in sequences that range over multiple scales and it is related to the extensivity of entropy (see below).

Thermodynamic depth (Lloyd and Pagels, 1988) relates the entropy of a system to the number of possible historical paths that led to its observed state, with “deep” systems being all those that are “hard to build”, whose final state carries much information about the history leading up to it. The emphasis on how a system comes to be, its generative history, identifies thermodynamic depth as a complementary measure to logical depth. While thermodynamic depth has the advantage of being empirically calculable, problems with the definition of system states have been noted (Crutchfield and Shalizi, 1999). Although aimed at distinguishing complex systems as different from random ones, its formalism essentially captures the amount of randomness created by a generative process, and does not differentiate regular from random systems.

作为结构和信息的复杂性

A simple way of quantifying complexity on a structural basis would be to count the number of components and/or interactions within a system. An examination of the number of structural parts (McShea, 1996) and functional behaviors of organisms across evolution demonstrates that these measures increase over time, a finding that contributes to the ongoing debate over whether complexity grows as a result of natural selection. However, numerosity alone may only be an indicator of complicatedness, but not necessarily of complexity. Large and highly coupled systems may not be more complex than those that are smaller and less coupled. For example, a very large system that is fully connected can be described in a compact manner and may tend to generate uniform behavior, while the description of a smaller but more heterogeneous system may be less compressible and its behavior may be more differentiated.

Effective complexity (Gell-Mann, 1995) measures the minimal description length of a system’s regularities. As such, this measure is related to AIC, but it attempts to distinguish regular features from random or incidental ones and therefore belongs within the family of complexity measures that aim at capturing how much structure a system contains. The separation of regular features from random ones may be difficult for any given empirical system, and it may crucially depend on criteria supplied by an external observer.

Physical complexity (Adami and Cerf, 2000) is related to effective complexity and is designed to estimate the complexity of any sequence of symbols that is about a physical world or environment. As such the measure is particularly useful when applied to biological systems. It is defined as the Kolmogorov complexity (AIC) that is shared between a sequence of symbols (such as a genome) and some description of the environment in which that sequence has meaning (such as an ecological niche). Since the Kolmogorov complexity is not computable, neither is the physical complexity. However, the average physical complexity of an ensemble of sequences (e.g. the set of genomes of an entire population of organisms) can be approximated by the mutual information between the ensembles of sequences (genomes) and their environment (ecology). Experiments conducted in a digital ecology (Adami, 2002) demonstrated that the mutual information between self-replicating genomes and their environment increased along evolutionary time. Physical complexity has also been used to estimate the complexity of biomolecules. The structural and functional complexity of a set of RNA molecules were shown to positively correlate with physical complexity (Carothers et al., 2004), indicating a possible link between functional capacities of evolved molecular structures and the amount of information they encode.

Statistical complexity (Crutchfield and Young, 1989) is a component of a broader theoretic framework known as computational mechanics, and can be calculated directly from empirical data. To calculate the statistical complexity, each point in the time series is mapped to a corresponding symbol according to some partitioning scheme, so that the raw data is now a stream of consecutive symbols. The symbol sequences are then clustered into causal states according to the following rule: two symbol sequences (i.e. histories of the dynamics) are contained in the same causal state if the conditional probability of any future symbol is identical for these two histories. In other words, two symbol sequences are considered to be the same if, on average, they predict the same distribution of future dynamics. Once these causal states have been identified, the transition probabilities between causal states can be extracted from the data, and the long-run probability distribution over all causal states can be calculated. The statistical complexity is then defined as the Shannon entropy of this distribution over causal states. Statistical complexity can be calculated analytically for abstract systems such as the logistic map, cellular automata and many basic Markov processes, and computational methods for constructing appropriate causal states in real systems, while taxing, exist and have been applied in a variety of contexts.

Predictive information (Bialek et al., 2001), while not in itself a complexity measure, can be used to separate systems into different complexity categories based on the principle of the extensivity of entropy. Extensivity manifests itself, for example, in systems composed of increasing numbers of homogeneous independent random variables. The Shannon entropy of such systems will grow linearly with their size. This linear growth of entropy with system size is known as extensivity. However, the constituent elements of a complex system are typically inhomogeneous and interdependent, so that as the number of random variables grows the entropy does not always grow linearly. The manner in which a given system departs from extensivity can be used to characterize its complexity.

Neural complexity (Tononi et al., 1994), which may be applied to any empirically observed system including brains, is related to the extensivity of a system. One of its building blocks is integration (also called multi-information), a multivariate extension of mutual information that estimates the total amount of statistical structure within an arbitrarily large system. Integration is computed as the difference between the sum of the component’s individual entropies and the joint entropy of the system as a whole. The distribution of integration across multiple spatial scales captures the complexity of the system. Consider the following three cases (<figref>Complexity_figure2.jpg</figref>). (1) A system with components that are statistically independent will exhibit globally disordered or random dynamics. Its joint entropy will be exactly equal to the sum of the component entropies, and system integration will be zero, regardless of which spatial scale of the system is examined. (2) Any statistical dependence between components will lead to a contraction of the system’s joint entropy relative to the individual entropies, resulting in positive integration. If the components of a system are highly coupled and exhibit statistical dependencies as well as homogeneous dynamics (i.e. all components behave identically) then estimates of integration across multiple spatial scales of the system will, on average, follow a linear distribution. (3) If statistical dependencies are inhomogeneous (for example involving groupings of components, modules, or hierarchical patterns) the distribution of integration will deviate from linearity. The total amount of deviation is the system’s complexity. The complexity of a random system is zero, while the complexity of a homogeneous coupled (regular) system is very low. Systems with rich structure and dynamic behavior have high complexity.

Applied to neural dynamics, complexity as defined by Tononi et al. (1994) was found to be associated with specific patterns of neural connectivity, for example those exhibiting attributes of small-world networks (Sporns et al., 2000). Descendants of this complexity measure have addressed the matching of systems to an environment as well as their degeneracy, i.e. their capacity to produce functionally equivalent behaviors in different ways.

复杂网络

Traditionally, complex systems have been analyzed using tools from nonlinear dynamics and statistical information theory. Recently, the analytical framework of complex networks has led to a significant reappraisal of commonalities and differences between complex systems found in different scientific domains (Amaral and Ottino, 2004). A key insight is that network topology, the graph structure of the interactions, places important constraints on the system's dynamics, by directing information flow, creating patterns of coherence between components, and by shaping the emergence of macroscopic system states. Complexity is highly sensitive to changes in network topology (Sporns et al., 2000). Changes in connection patterns or strengths may thus serve as modulators of complexity. The link between network structure and dynamics represents one of the most promising areas of complexity research in the near future.

为什么是复杂性?

Why does complexity exist in the first place, especially among biological systems? A definitive answer to this question remains elusive. One perspective is based on the evolutionary demands biological systems face. The evolutionary success of biological structures and organisms depends on their ability to capture information about the environment, be it molecular or ecological. Biological complexity may then emerge as a result of evolutionary pressure on the effective encoding of structured relationships which support differential survival.

Another clue may be found in the emerging link between complexity and network structure. Complexity appears very prominently in systems that combine segregated and heterogeneous components with large-scale integration. Such systems become more complex as they more efficiently integrate more information, that is, as they become more capable to accommodate both the existence of specialized components that generate information and the existence of structured interactions that bind these components into a coherent whole. Thus reconciling parts and wholes, complexity may be a necessary manifestation of a fundamental dialectic in nature.

引用

- Adami, C. (2002) What is complexity? BioEssays 24, 1085-1094.

- Adami, C., Cerf, N.J. (2000) Physical complexity of symbolic sequences. Physica D 137, 62-69.

- Amaral, L.A.N., Ottino, J.M. (2004) Complex networks. Eur. Phys. J. B 38, 147-162.

- Bennett, C.H. (1988) Logical depth and physical complexity. In: R. Herken (ed.): The Universal Turing Machine. A Half-Century Survey. Pp. 227-257, Oxford: Oxford University Press.

- Bialek, W., Nemenman, I., Tishby, N. (2001) Predictability, complexity, and learning. Neural Computation 13, 2409-2463.

- Carothers, J.M., Oestreich, S.C., Davis, J.H., and Szostak, J.W. (2004) Informational complexity and functional activity of RNA structures. J. Am. Chem. Soc. 126, 5130–5137.

- Chaitin, G.J. (1977) Algorithmic information theory. IBM Journal of Research and Development 21, 350-359. [1]

- Crutchfield, J.P., Young, K. (1989) Inferring statistical complexity. Phys. Rev. Lett. 63, 105-109.

- Crutchfield, J.P., Shalizi, C.R. (1999) Thermodynamic depth of causal states: Objective complexity via minimal representations. Phys. Rev. E 59, 275-283.

- Gell-Mann, M. (1995) What is complexity? Complexity 1, 16-19.

- Grassberger, P. (1986) Toward a quantitative theory of self-generated complexity. Int. J. Theor. Phys. 25, 907-928.

- Huberman, B.A., Hogg, T. (1986) Complexity and adaptation. Physica D 22, 376-384.

- Kolmogorov, A. N. (1965) Three approaches to the quantitative definition of information. Problems of Information Transmission 1, 1-17.

- Lloyd, S., Pagels, H. (1988) Complexity as thermodynamic depth. Annals of Physics 188, 186-213.

- McShea, D.W. (1995) Metazoan complexity and evolution: Is there a trend? Evolution 50, 477-492.

- Simon, H.A. (1981) The Sciences of the Artificial. MIT Press: Cambridge.

- Sporns, O., Tononi, G., Edelman, G.M. (2000) Theoretical neuroanatomy: Relating anatomical and functional connectivity in graphs and cortical connection matrices. Cereb. Cortex 10, 127-141.

- Tononi, G., Sporns, O., Edelman, G.M. (1994) A measure for brain complexity: Relating functional segregation and integration in the nervous system. Proc. Natl. Acad. Sci. U.S.A. 91, 5033-5037.

Scholarpedia内部引用

- Marcus Hutter (2007) Algorithmic information theory. Scholarpedia, 2(3):2519.

- Valentino Braitenberg (2007) Brain. Scholarpedia, 2(11):2918.

- Gregoire Nicolis and Catherine Rouvas-Nicolis (2007) Complex systems. Scholarpedia, 2(11):1473.

- James Meiss (2007) Dynamical systems. Scholarpedia, 2(2):1629.

- Tomasz Downarowicz (2007) Entropy. Scholarpedia, 2(11):3901.

- Arkady Pikovsky and Michael Rosenblum (2007) Synchronization. Scholarpedia, 2(12):1459.